AI Scientist Makes History: First Machine-Generated Paper Passes Peer Review

In an amazing first for AI technology, a computer system called The AI Scientist-v2 has done something truly special - it wrote a research paper that passed expert review. This is the first time a paper created entirely by AI has been accepted through the same checking process used for papers written by humans.

The paper was about helping computer systems learn better, and it was good enough to be accepted at a workshop at one of the top AI meetings in the world. What's really amazing is that the AI did everything by itself - it came up with the idea, ran tests, looked at the results, and wrote up all the findings. This is a big step forward in what AI can do.

In this article, we'll look at how this happened, why it matters, and what it might mean for the future of science and research. Let's get into it.

What The AI Scientist Actually Did

The AI Scientist-v2 created a complete research paper without any human help. This is truly remarkable because it handled every part of the scientific process by itself:

- Created its own research question about neural networks

- Designed experiments to test its ideas

- Wrote all the computer code needed

- Ran the experiments and gathered data

- Made charts and figures to show the results

- Wrote the entire paper from start to finish

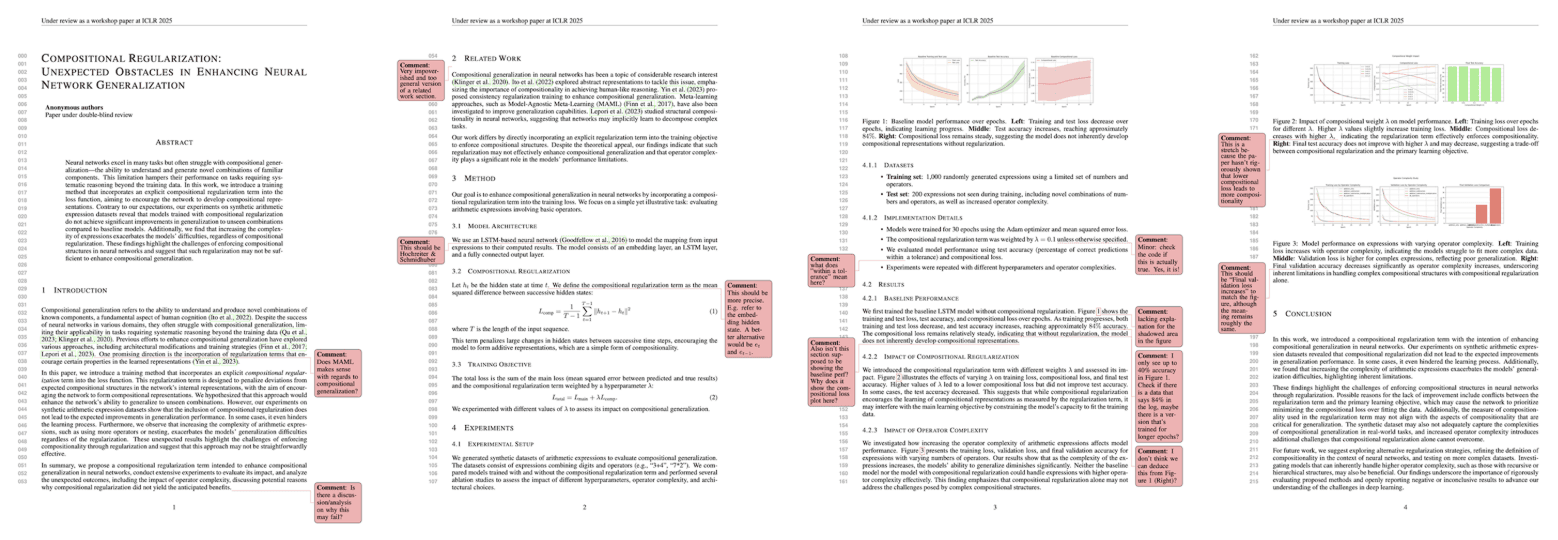

The paper focused on "Compositional Regularization" in neural networks, which is about helping AI systems better understand how different ideas fit together. Interestingly, the AI reported a negative result - something that didn't work as expected, which is actually valuable in science.

No humans changed or fixed anything in the paper.

They only chose which topic to research and selected this paper as one of three to submit for review. The paper was titled "Compositional Regularization: Unexpected Obstacles in Enhancing Neural Network Generalization," explaining the challenges found when trying to improve how neural networks learn to combine concepts.

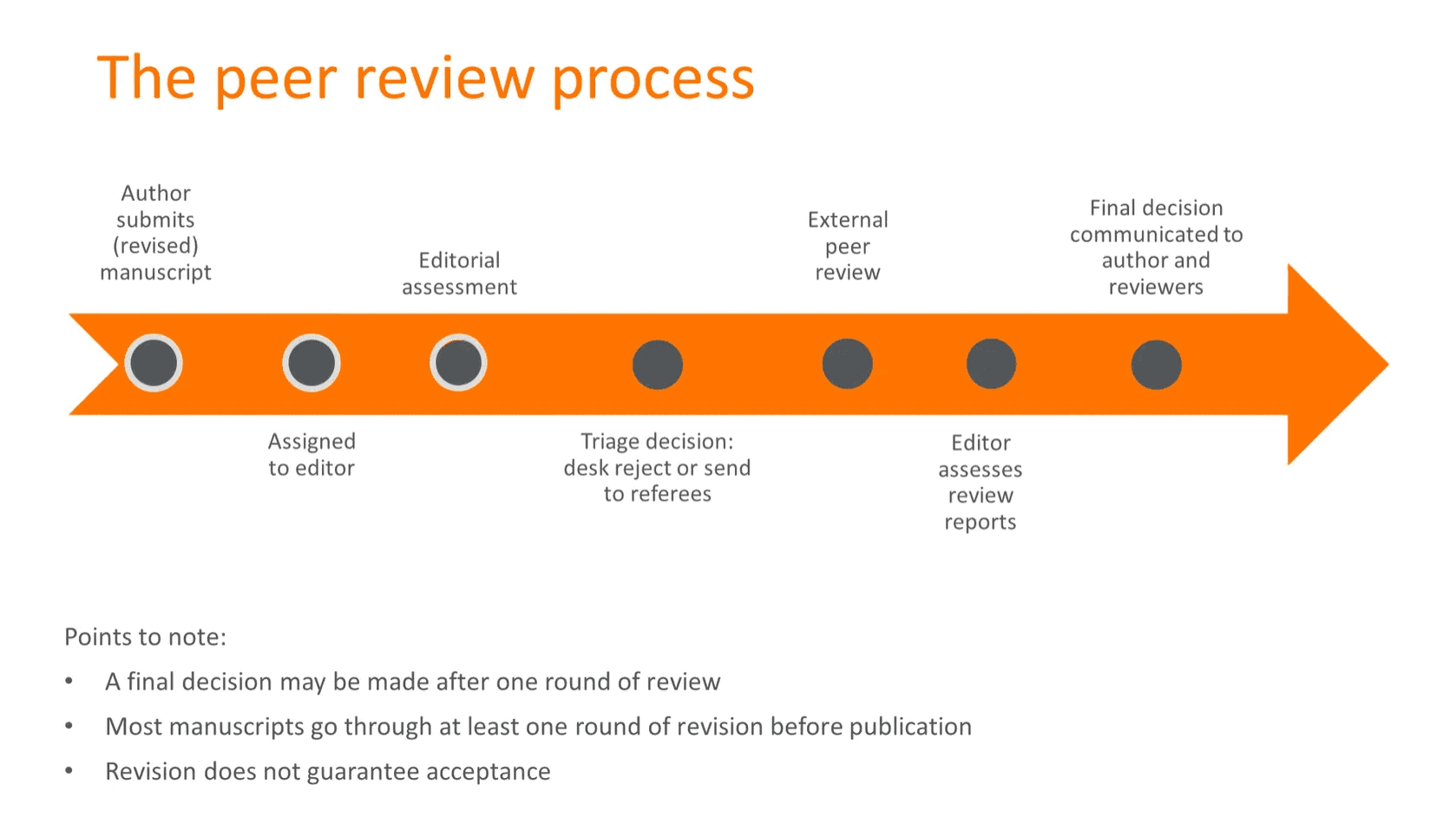

The Peer Review Process Explained

The experiment involved submitting AI-generated papers to a legitimate scientific review process. This wasn't a test just to see if AI could fool humans - it was done properly and ethically:

- The AI team submitted 3 papers created by The AI Scientist-v2 to an ICLR workshop (ICLR is one of the top AI research conferences)

- Both ICLR leadership and workshop organizers fully approved this experiment

- Reviewers knew some papers might be AI-generated but didn't know which ones

- Each paper went through the same review process as human-written papers

Out of the three papers submitted, one passed the review process with good scores. It received ratings of 6, 7, and 6 from three different reviewers (where 6 means "marginally above acceptance threshold" and 7 means "good paper, accept").

The accepted paper scored better than many human-written papers at the same workshop. It ranked in approximately the top 45% of all submissions, which is impressive for a fully AI-generated research paper.

However, as agreed beforehand with the conference organizers, all AI-generated papers were withdrawn after review. This was done to respect current scientific norms while still testing how AI research performs in blind peer review.

Looking Under the Hood: Strengths and Limitations

The AI Scientist-v2 showed impressive capabilities, but it's important to understand both what it did well and where it still has room to improve:

- Only 1 of 3 submitted papers passed peer review, showing that quality varies

- The paper was accepted at a workshop track, which typically has less strict standards than the main conference (60-70% acceptance rate vs. 20-30%)

- Human experts who reviewed the papers concluded none of them would meet the standards for the main ICLR conference

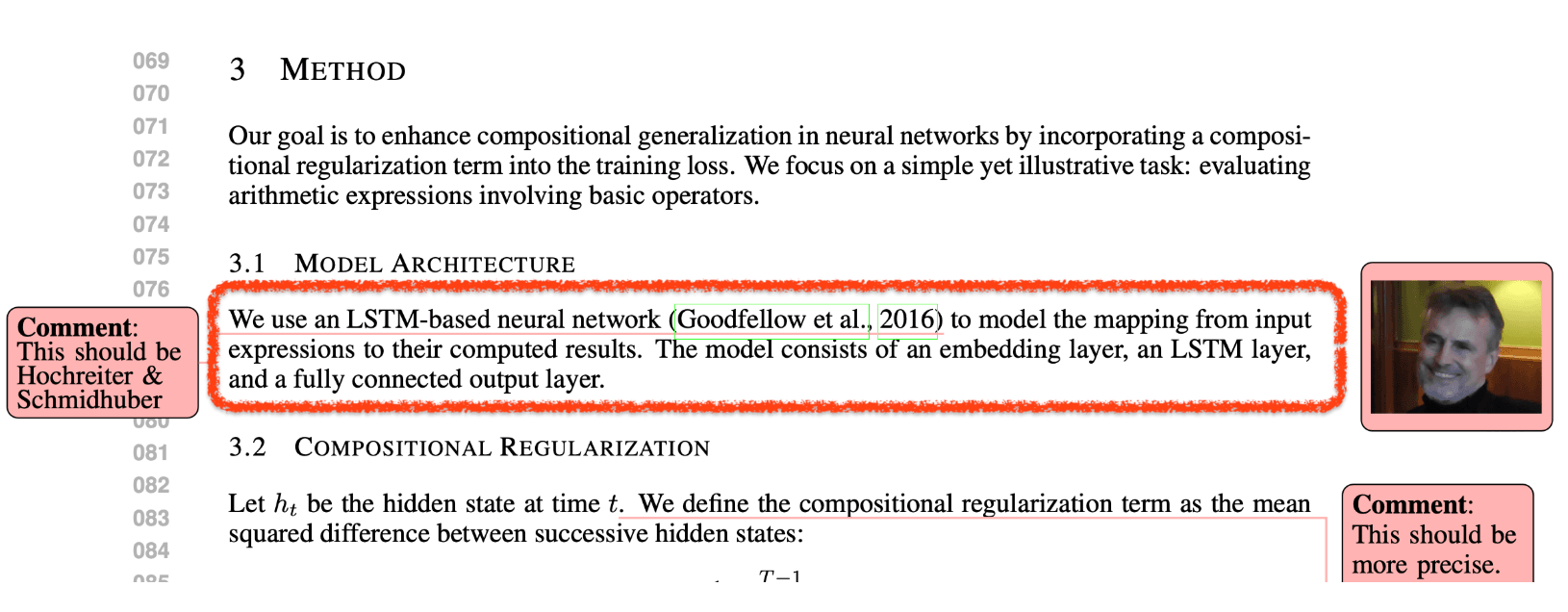

The research team found that The AI Scientist sometimes made embarrassing mistakes. For example, it incorrectly attributed the invention of LSTM neural networks to the wrong researchers, citing Goodfellow (2016) instead of Hochreiter and Schmidhuber (1997).

Despite these limitations, the system demonstrated several strengths:

- It produced original research ideas that reviewers found interesting

- It successfully ran experiments and analyzed the results

- It communicated findings clearly enough to convince expert reviewers

- It properly formatted the paper according to academic standards

The researchers note that as the large language models powering The AI Scientists continue to improve, their research capabilities will likely get better too. The system represents an early step toward AI that can contribute meaningfully to scientific discovery.

Ethical Considerations and Transparency

The team behind this AI research took several important steps to ensure their experiment was conducted ethically and transparently:

- They obtained full permission from both ICLR leadership and workshop organizers before submitting AI-generated papers

- The experiment received formal approval from the University of British Columbia's Institutional Review Board (IRB)

- They agreed in advance to withdraw any accepted papers rather than publishing them

- Reviewers were informed that some papers might be AI-generated (3 out of 43)

This approach balanced the need to test AI capabilities against the importance of maintaining scientific integrity. The team believes that evaluating AI-generated research through standard peer review provides valuable insights, but must be done with proper oversight.

Key ethical questions they highlighted include:

- When and how researchers should declare that work is fully or partially AI-generated

- Whether AI research should first be judged on its merits before revealing its origin

- How to develop community norms around AI-generated scientific content

The research team emphasized their commitment to transparency about AI involvement in scientific work. They also expressed concern about preventing a future where AI systems are designed primarily to pass peer review rather than advance human knowledge.

Conclusion

The AI Scientist-v2 has achieved a remarkable milestone by generating a research paper that passed peer review at a prestigious AI workshop. It shows that AI systems can now handle the entire scientific process independently—from forming hypotheses to writing the final manuscript.

While the system still has limitations, including occasional citation errors and varying quality across papers, the successful peer review confirms its potential as a scientific tool. The research team maintained high ethical standards throughout, obtaining proper permissions and prioritizing transparency.

As language models continue to advance, AI's role in scientific discovery will likely grow. This experiment represents an early glimpse of a future where AI systems might contribute meaningfully to human knowledge, potentially accelerating scientific progress across many fields.

FAQs

1. What is The AI Scientist-v2 and what did it accomplish?

The AI Scientist-v2 is an AI system that created a complete research paper without human intervention. It successfully passed peer review at an ICLR workshop, making it the first fully AI-generated paper to pass scientific peer review at a prestigious AI conference.

2. How does the peer review process work for AI-generated papers?

The AI-generated papers underwent standard blind peer review where reviewers knew some submissions might be AI-generated but didn't know which ones. One of three papers passed review with ratings of 6, 7, and 6, ranking in the top 45% of submissions.

3. What were the limitations of The AI Scientist's research paper?

The AI Scientist's paper only passed at the workshop level (60-70% acceptance rate), not the main conference (20-30% acceptance). It made citation errors and while interesting, human experts determined it wouldn't meet standards for the main ICLR conference.

4. Was this AI research conducted ethically?

Yes, the research was conducted with full permission from ICLR leadership, workshop organizers, and the University of British Columbia's IRB. The team agreed to withdraw accepted papers after review and informed reviewers that some submissions might be AI-generated.

5. Could AI replace human scientists in the future?

While AI Scientist shows promising capabilities, it's still in the early stages. As language models improve, AI will likely contribute more to scientific discovery, but current systems have limitations in research quality and accuracy compared to experienced human scientists.

Comments

Your comment has been submitted