What Caused the Cloudflare Outage? Why 20% of the Internet Crashed on November 18

Your favorite websites suddenly stopped working on November 18, 2025. ChatGPT went silent. X crashed. Spotify stopped playing music. Claude AI disappeared. All at once, millions of people worldwide saw error messages instead of their usual screens. The culprit? A company called Cloudflare that most people have never heard of, yet it powers 20% of the entire internet.

What started as a simple database update turned into one of the biggest internet disruptions of 2025, taking down dozens of major platforms for hours. But here's what makes this story interesting: the crash wasn't caused by hackers or cyberattacks. It was triggered by something far simpler and more surprising.

In this article, we'll break down exactly what Cloudflare does, why one company's problem affected so many websites, what went wrong behind the scenes, and how engineers fixed it. We'll also explore whether these massive internet outages are becoming the new normal.

Let's get into it.

Executive Summary

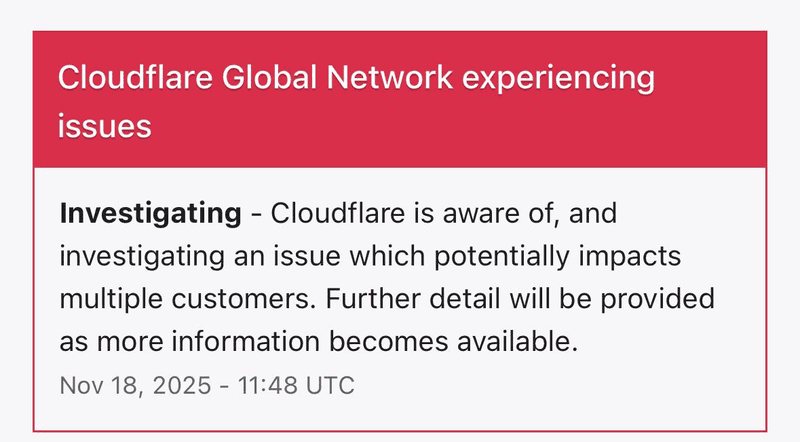

On November 18, 2025, Cloudflare experienced a major outage that disrupted popular services including ChatGPT, X(Twitter), Spotify, and Claude AI for several hours. The problem started at 6:30 AM Eastern Time when a routine database permissions update accidentally caused a critical configuration file to double in size from 60 to over 200 features. This exceeded the system's hardcoded limit of 200, triggering crashes across Cloudflare's global network.

Key Impact Points:

- 20% of all internet websites were affected simultaneously

- Services crashed and recovered in five-minute cycles, initially suggesting a cyberattack

- Engineers required nearly six hours to identify and fix the root cause

- Full restoration completed by 5:06 PM UTC after manual file replacement and system-wide restart

Cloudflare's CTO Dane Knecht publicly apologized, calling the incident "unacceptable" and promising system improvements. This outage joins recent disruptions from Amazon Web Services and Microsoft Azure, highlighting how modern internet infrastructure creates single points of failure.

While total outage numbers remain steady, the concentration of services under few providers means each incident now affects millions of users simultaneously, making disruptions feel more frequent and severe.

What Happened During the Cloudflare Outage

Cloudflare experienced a major technical problem on November 18, 2025, that brought down dozens of popular websites and services. The disruption started around 6:30 AM Eastern Time and affected millions of users across the globe. People trying to access their usual platforms saw error messages instead of their normal screens.

The outage hit a wide range of services that many people use every day:

AI and Work Tools:

- ChatGPT stopped responding to users

- Claude AI went offline

- Perplexity search became unavailable

- Canva design platform crashed

Social Media and Entertainment:

- X (formerly Twitter) went down completely

- Spotify music streaming stopped working

- Letterboxd film review site showed errors

Gaming Platforms:

- League of Legends players couldn't connect

- Genshin Impact experienced server issues

- Honkai: Star Rail faced disruptions

The problems lasted for several hours, with Cloudflare working through the afternoon to restore services. By evening, most platforms had returned to normal operation. The company confirmed that all systems were functioning properly again.

Users could access their favorite websites and apps without any further issues. Cloudflare's team continued monitoring their systems to make sure everything stayed stable after the fix.

What is Cloudflare and Why Does It Matter?

Now that we know what happened during the outage, you might be wondering what Cloudflare actually does and why its problems affected so many websites at once.

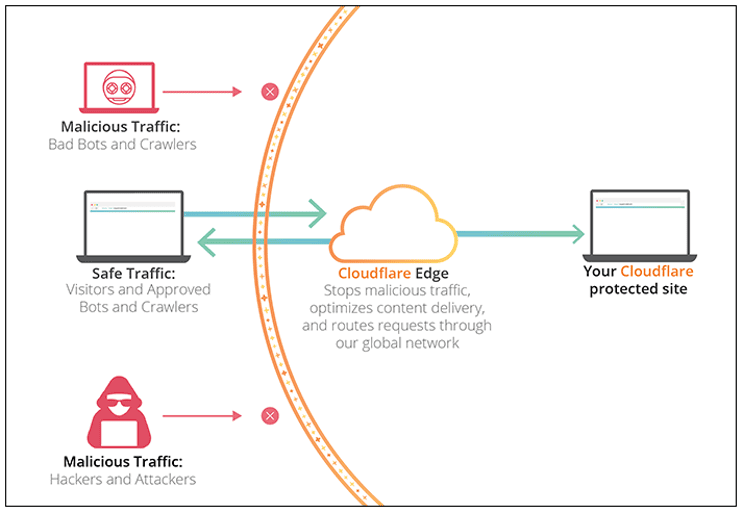

Think of Cloudflare as a security guard and traffic controller for websites. It sits between the websites you visit and you as a user. When you try to open a website, your request goes through Cloudflare first before reaching the actual site. This setup helps websites run smoothly and stay protected.

Here's what Cloudflare does for websites:

- Blocks harmful traffic and cyber attacks before they reach the site

- Checks if visitors are real people or automated bots

- Stores copies of website content closer to users for faster loading

- Manages huge amounts of traffic without slowing down

Cloudflare handles about 81 million requests every single second. That's a massive amount of internet activity flowing through their systems at any moment.

The company provides services to roughly 20% of all websites on the internet. This includes major platforms, small businesses, and everything in between. Many big names rely on Cloudflare to keep their services running smoothly.

This is exactly why one technical problem at Cloudflare created such widespread chaos. When their systems went down, all the websites depending on them went down too. It's like a power outage at a main electrical station affecting an entire neighborhood. One failure point impacts everyone connected to it.

Why Did Cloudflare Go Down?

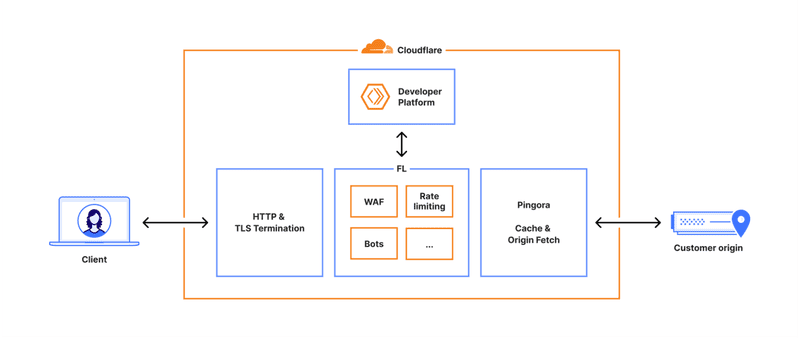

The outage started with a seemingly harmless database update at 11:05 AM UTC. Cloudflare made a permissions change to their ClickHouse database system to improve security and access control. This change had an unexpected consequence.

Cloudflare's Bot Management system uses a configuration file called a "feature file" to identify and block automated bot traffic. This file contains around 60 individual features that help machine learning models distinguish between real users and bots. The system generates this file every five minutes using data from their ClickHouse database.

What went wrong:

The database query pulling this information didn't filter which database it was reading from. After the permissions update, the query started seeing duplicate data from two database layers instead of one. This caused the feature file to double in size, jumping from 60 features to over 200.

Cloudflare's system had a hardcoded memory limit of 200 features. When the file exceeded this limit, the software triggered a panic error and crashed. This crash spread across their entire network because the oversized file was automatically distributed to all their servers within minutes.

The problem created a cycle where services would crash, briefly recover when an older correct file was generated, then crash again when the new oversized file appeared.

In more simple terms, Cloudflare changed a setting in their system that accidentally made their security software see everything twice, like looking at a mirror reflection. This doubled the size of an important file from 60 items to over 200. Their system was built to handle only 200 items maximum, so when it saw more, it crashed.

This crashed file was automatically copied to every server across their network every five minutes, causing websites everywhere to go down until they stopped the copying process and manually replaced it with an older working file.

How Cloudflare Fixed the Problem

This section gets technical, but understanding the recovery process shows how complex these systems are.

Cloudflare's engineering team faced a challenging diagnosis because the system showed unusual behavior. Services would crash, recover briefly, then crash again every five minutes. This pattern initially led them to suspect a DDoS attack rather than an internal configuration issue.

Initial Response and Investigation (11:32-13:05 UTC):

- Automated monitoring systems detected elevated HTTP 5xx error rates at 11:31

- The team first focused on Workers KV service degradation

- They attempted traffic manipulation and account limiting to restore normal operations

- An incident call was created at 11:35 to coordinate the response

Bypass Implementation (13:05 UTC):

- Engineers deployed system bypasses for Workers KV and Cloudflare Access

- These services fell back to an older version of the core proxy (FL instead of FL2)

- This reduced error rates but didn't solve the root problem

Root Cause Identification (13:37-14:24 UTC):

- The team confirmed the Bot Management configuration file was the trigger

- They stopped the automated generation and propagation system for new feature files

- Testing began with a previous known-good version of the configuration file

Deployment and Recovery (14:30-17:06 UTC):

The fix required multiple steps executed in sequence. First, they halted the ClickHouse database query that was generating the oversized feature files every five minutes. Next, they manually inserted a validated previous version of the Bot Management feature file into the distribution queue. This old file contained the correct 60 features instead of the duplicated 200+ features.

The team then forced a restart of the core proxy system across their entire global network. This ensured all servers loaded the corrected configuration file. By 14:30, most traffic began flowing normally again. However, some downstream services remained in a degraded state and required individual restarts. The final services were restored at 17:06 when all systems returned to normal operation.

What Cloudflare's CTO Said About the Outage

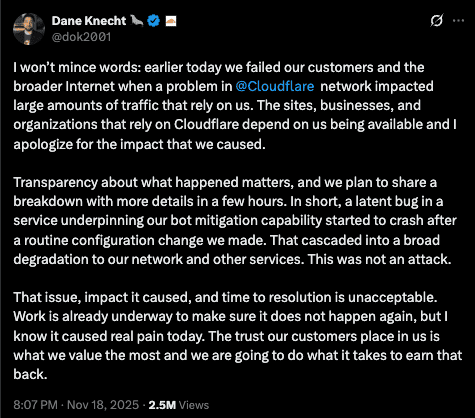

Dane Knecht, Cloudflare's Chief Technology Officer, posted a direct response on X addressing the outage. His statement showed accountability and transparency about what went wrong.

Knecht opened with a clear admission: "I won't mince words: earlier today we failed our customers and the broader Internet when a problem in Cloudflare network impacted large amounts of traffic that rely on us."

He explained the technical cause in simple terms: "A latent bug in a service underpinning our bot mitigation capability started to crash after a routine configuration change we made. That cascaded into a broad degradation to our network and other services."

Knecht emphasized this was not a security breach, stating "This was not an attack."

He concluded by acknowledging customer trust: "The trust our customers place in us is what we value the most and we are going to do what it takes to earn that back."

“An outage like today is unacceptable. We've architected our systems to be highly resilient to failure to ensure traffic will always continue to flow. When we've had outages in the past it's always led to us building new, more resilient systems.

On behalf of the entire team at Cloudflare, I would like to apologize for the pain we caused the Internet today.” Cloudflare blog

Are Internet Outages Happening More Often?

After seeing three major outages in less than a month, you might wonder if the internet is becoming less reliable. The answer is more complicated than a simple yes or no.

Recent Pattern of Major Outages:

November saw the Cloudflare disruption, but it wasn't an isolated incident. Amazon Web Services experienced a major outage in October 2025 that prevented people from ordering coffee through apps and controlling their smart home devices. Days after that, Microsoft's Azure cloud service went down. In 2024, a CrowdStrike software update caused one of the biggest disruptions in recent history, affecting businesses, flights, and hospitals worldwide.

The Numbers Tell an Interesting Story:

Cisco's network monitoring service tracked 12 major outages in 2025 so far, compared to 23 in 2024, 13 in 2023, and 10 in 2022. According to experts, the actual number of outages has remained consistent over time.

Why It Feels Like More Outages:

The key difference is impact scale. Angelique Medina from Cisco ThousandEyes explained to CNN that while outage numbers stay steady, "the number of sites and applications dependent on these services has increased, making them more disruptive to users."

Here's what's changed:

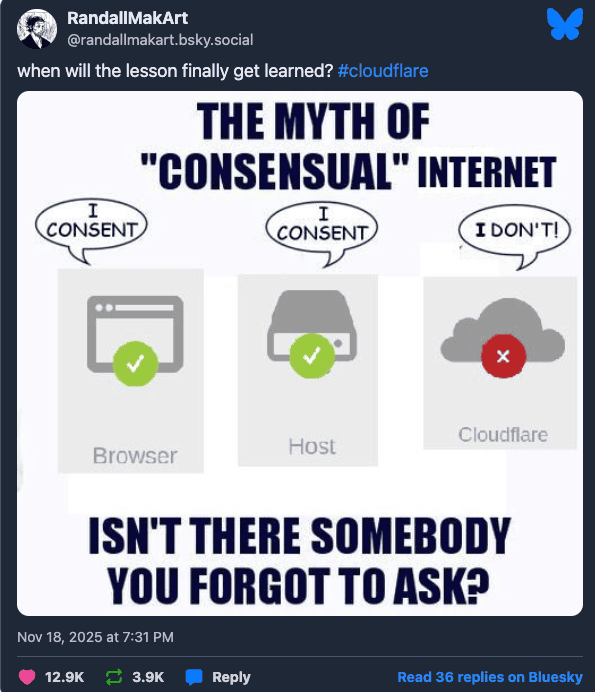

- Fewer companies now provide critical infrastructure for most websites

- When Amazon, Microsoft, or Cloudflare goes down, thousands of services fail simultaneously

- Millions of users get affected at once instead of isolated groups

- Social media amplifies complaints, making every outage highly visible

The internet has created what experts call "single points of failure" where one company's problem cascades across the entire web.

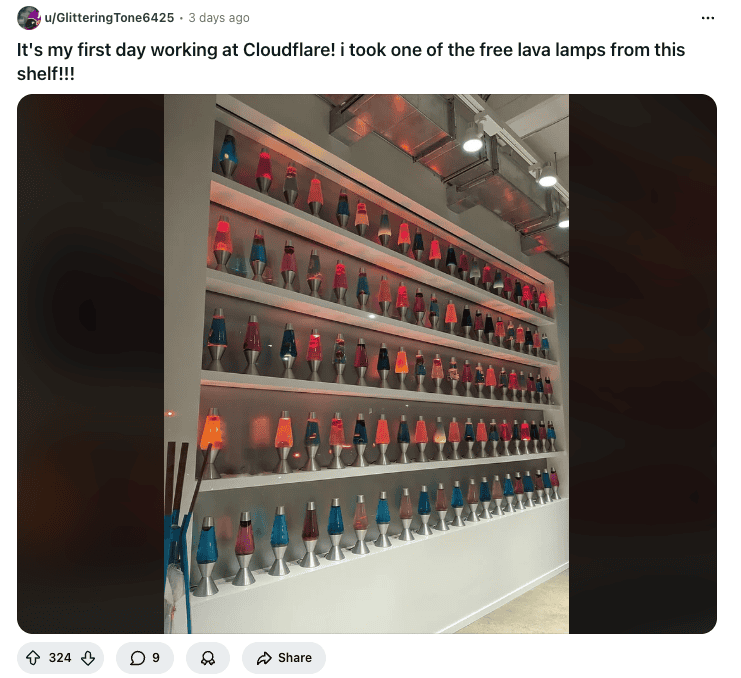

Reddit and Bluesky User Reactions to Cloudflare Down?

Comments

Your comment has been submitted