15 Best Open Source AI Models & LLMs in 2026 (Tested and Reviewed)

A report by Linux Foundation Research and Meta reveals that 89% of organizations using AI are already leveraging open source AI models in some form, with companies using open-source tools seeing 25% higher ROI compared to those relying solely on proprietary solutions, making it clear that understanding these models is now essential for maximizing business value.

In this article on the top 15 open source AI models in 2026, we'll explore everything you need to know about these powerful alternatives that are challenging expensive cloud-based AI services.

Here is what we are going to cover:

- Complete breakdown of all 15 leading open source AI models

- What each model does best and hardware requirements

- Who should use each model for maximum results

- How models like Llama, Gemma, Mistral, and DeepSeek compare

- Real user experiences and feedback from Reddit communities

- How Elephas maximizes the potential of these open source models

By the end of this article, you'll understand which open source AI models fit your specific needs and budget, plus discover how to unlock their full potential through smart tools and workflows.

Let's get into it.

Top 15 Open Source AI Models in 2026 at a Glance

Model Name | What it is Best For | Who it is Best For | Hardware Requirements |

DeepSeek R1 | Complex reasoning and step-by-step problem solving | Students, teachers, and professionals who need clear explanations | Mac hardware, multiple sizes from 1.5B to 671B parameters |

Llama 3.3 70B | Professional text writing that matches paid services | Business professionals and content creators | Mac hardware, 70B parameters |

Mixtral 8x7B | Fast multilingual tasks with GPT-3.5 performance | International businesses and multilingual teams | M3 Max Mac, uses 12.9B of 46.7B parameters |

Gemma 2 | Fast and lightweight Google-backed models | Developers building customer-facing apps | Apple Silicon Macs, available in 2B, 9B, and 27B sizes |

Mistral 7B | Reliable AI help on older Macs with less memory | Users with older Macs who need dependable AI | 16GB RAM minimum, 7.3B parameters |

Phi-3 | GPT-3.5 performance on basic Macs | Users with limited hardware who want good results | Entry-level Macs, just 3.8B parameters |

Solar 10.7B | Business document processing | Companies in finance, healthcare, and legal fields | Single GPU, 10.7B parameters |

Qwen 2.5 32B | Multilingual apps and international business | Global businesses and international development teams | 32B parameters, Mac compatible |

OpenHermes 2.5 | ChatGPT-like conversations | Developers who want conversational AI | 4GB RAM minimum |

Vicuna 13B v1.5 | Natural conversations with good reasoning | Users who want ChatGPT-like conversations offline | Mid-range Mac configurations, 13B parameters |

Zephyr 7B | Following instructions precisely | Professionals who need reliable AI assistance | Standard Mac configurations, 7B parameters |

LLaVA | Understanding both images and text | Content creators and accessibility developers | Mac hardware, available in 7B, 13B, and 34B sizes |

Stable Diffusion | Creating professional images on Apple devices | Creative professionals and app developers | Apple Silicon with Neural Engine |

Whisper.cpp | Fast offline speech-to-text in 99 languages | Content creators, journalists, and accessibility users | Apple Silicon, models from 39MB to 3GB |

RWKV | Memory-efficient AI with unlimited context | Users with very limited hardware resources | Just 2GB RAM for 3B model |

1. DeepSeek R1

Best for complex reasoning tasks and step-by-step problem solving

DeepSeek R1 represents a breakthrough in reasoning AI, offering transparent thought processes that rival OpenAI's o1 model while running completely offline on Mac hardware. Released in January 2025 with an MIT license, it excels at mathematical problem-solving, code debugging, and logical reasoning tasks that challenge other local models.The model's unique ability to show its thinking process makes it invaluable for educational applications and professional scenarios requiring explainable AI decisions.

Users consistently praise R1's methodical approach to complex problems, with the model working through multi-step solutions visibly rather than providing unexplained answers. The MIT licensing ensures complete freedom for commercial use, making it particularly attractive for businesses seeking powerful local AI without ongoing subscription costs.

Key Features:

- Step-by-step reasoning display showing complete thought process

- MIT license allowing unrestricted commercial use

- Multiple parameter sizes from 1.5B to 671B for different hardware configurations

- Advanced mathematical and coding problem-solving capabilities

- Distributed inference support across multiple Mac devices

- 4-bit quantization enabling efficient memory usage on modest hardware

User Testimonials:

“This is easily the best release since GPT-4. It’s wild… thanks to this new DeepSeek-R1 model and how they trained it.” Reddit

“I’ve tested R1… underwhelmed after all the hype… on par with Sonnet/4o and more hit and miss.” Reddit

2. Llama 3.3 70B

Best for professional-grade text generation replacing expensive cloud AI services

Llama 3.3 70B delivers genuine GPT-4 class performance while running entirely on Mac hardware, representing Meta's most capable open source offering. Released in December 2024 under Apache 2.0 licensing, this model provides enterprise-grade text generation, analysis, and reasoning capabilities without cloud dependencies.The 128,000 token context length enables processing of entire documents, making it invaluable for professional writing, research, and business applications.

Users report performance that genuinely matches paid cloud services, with particular strength in technical documentation, creative writing, and complex analytical tasks. The model's consistency and reliability have made it a popular choice for professionals who need predictable, high-quality output without the ongoing costs and privacy concerns of cloud AI services.

Key Features:

- 70 billion parameters delivering GPT-4 level performance capabilities

- 128,000 token context length for processing lengthy documents

- Apache 2.0 license enabling free commercial deployment

- Exceptional multilingual support across dozens of languages

- Superior instruction-following capabilities for complex multi-step tasks

- Optimized inference through Metal Performance Shaders and unified memory

User Testimonials:

“It seems that Llama 3.3 is on-par with Qwen 2.5… especially very good at IFEval.” Reddit

“It has been confidently hallucinating… inventing URLs and facts.” Reddit

3. Mixtral 8x7B (Mistral AI)

Best for multilingual applications requiring GPT-3.5 performance with 6x faster inference

Mixtral 8x7B has the best mixture-of-experts architecture, accessing 46.7 billion parameters while actively using only 12.9 billion per token. This innovative design from French startup Mistral AI enables each transformer layer to contain eight distinct expert networks, with a router dynamically selecting two per token for specialized processing.

Founded by former Google DeepMind and Meta AI researchers, Mistral AI raised over $1 billion while maintaining strong open-source commitments. The company's Apache 2.0 licensed models challenge proprietary AI through transparent development, with Mixtral achieving superior multilingual performance across English, French, German, Spanish, and Italian.

Key Features:

- Sparse MoE architecture using only 12.9B of 46.7B parameters per token

- 32K token context window for long document processing

- Native fluency in 5+ languages with superior code generation

- Apache 2.0 license for unrestricted commercial use

- 30+ tokens/second on M3 Max through unified memory optimization

Outperforms Llama 2 70B with 6x faster inference speed

User Testimonials:

When Mistral 7B came out, people were claiming similar things. Then someone checked and it had Sally tests burned-in. Same thing is probably happening here. Reddit

Mixtral 8x7B has been continuously giving me the “Chat ram into a problem answering your request” error,” and won’t let me do new responses to my prompts. I’ve tried everything. Closing the app, restarting my device. Nothing works. Reddit

4. Gemma 2

Best for developers wanting Google-backed lightweight models with blazing speed

Gemma 2 represents Google DeepMind's approach to efficient local AI, built on the same research foundation as the Gemini models but optimized for consumer hardware. Available in 2B, 9B, and 27B parameter variants, it delivers impressive performance while maintaining exceptional inference speed across different Mac configurations.The model's integration with Google's AI ecosystem and focus on responsible AI development provide enterprise users with confidence in deployment.

Users consistently highlight Gemma 2's exceptional speed, with the 27B model running efficiently on a single M-series Mac while delivering near-enterprise performance.Google's emphasis on safety and responsible AI ensures robust guardrails, making it suitable for customer-facing applications where content quality and appropriateness are paramount.

Key Features:

- Multiple parameter sizes (2B, 9B, 27B) optimized for different hardware capabilities

- Custom Gemma Terms of Use providing commercially friendly licensing

- Exceptional inference speed across all Apple Silicon configurations

- Built on Google's Gemini research foundation ensuring quality outputs

- Integration capabilities with Google's broader AI ecosystem

- Comprehensive responsible AI toolkit for safe deployment

User Testimonials:

“I mean it's clearly a very intelligent model but I don't have this issue with 70b models like llama3 or Miqu.” Reddit

“I 100% have experienced this and it threw me off because I really dislike its writing style because of it. It's on my list of "models that everyone else loves that I really dislike" solely because of this.” Reddit

5. Mistral 7B

Best for reliable, fast AI assistance on older Macs with limited RAM

Mistral 7B has earned community praise as the most efficient and reliable small language model, delivering exceptional performance with minimal hardware requirements.

Despite having only 7.3 billion parameters, it consistently outperforms much larger models on key benchmarks while running smoothly on Macs with just 16GB of RAM.

The Apache 2.0 license and focus on reliability have made it the go-to choice for developers and professionals who need dependable AI assistance without expensive hardware upgrades.

Key Features:

- Highly efficient 7.3 billion parameter architecture optimizing speed over size

- Apache 2.0 license providing complete commercial use freedom

- Superior tokens-per-second performance per gigabyte of RAM consumed

- Sliding window attention mechanism improving inference efficiency

- Exceptional reliability with minimal hallucination compared to competitors

- Strong multilingual support particularly for European languages

User Testimonials:

“There are simply no single LLM good at everything, but Mistral-7B is as close as you can get to this title.” Reddit

“It seems like a good 7b but it's not as good as 13b and considering everyone can run 13b what exactly is the point?.” Reddit

6. Phi-3 (Microsoft)

Best for achieving GPT-3.5 level performance on entry-level Macs with minimal resources

Phi-3 represents Microsoft's breakthrough in small language models, delivering GPT-3.5 class performance from just 3.8 billion parameters. The model's exceptional efficiency comes from training on carefully curated "textbook-quality" synthetic data rather than massive web crawls, proving that data quality trumps quantity in AI development.

Microsoft Research designed Phi-3 specifically for edge deployment, offering multiple variants (Mini, Small, Medium) with context lengths from 4K to 128K tokens. The model runs exceptionally well on Mac through native MLX framework support and Ollama integration, leveraging Metal Performance Shaders for optimal Apple Silicon performance.

Key Features:

- 3.8B parameters achieving GPT-3.5 performance benchmarks

- Multiple context variants including 128K token support

- Native MLX and Metal Performance Shaders optimization

- MIT license enabling unrestricted commercial deployment

- 2-bit to 4-bit quantization for memory-constrained devices

- Multimodal capabilities through Phi-3-Vision variant

User Testimonials:

“Microsoft just dropped an update on Phi-3, their series of small models (1.3B to 7B params) that are now performing on par with GPT-3.5 in a lot of benchmarks.” Reddit

“I need to experiment with phi 3 if it is really that good with rag. Having a low end laptop doesn't help that I only get 5-7 t/s on 7b models so hearing that phi-3 can do rag well is nice since I get extremely good t/s ( around 40/45 t/s). Did anyone experiment with how well it handles tool calling? I'm more interested in that.” Reddit

7. Solar 10.7B (Upstage)

Best for enterprise document processing with superior performance-to-size ratio

Solar 10.7B revolutionized model scaling through Depth Up-Scaling, enabling 10.7 billion parameters to outperform 30 billion parameter alternatives. This South Korean startup's breakthrough integrates Mistral 7B weights into upscaled Llama 2 architecture layers, followed by continued pretraining on curated datasets.

Upstage consistently topped HuggingFace's Open LLM Leaderboard, achieving an massive 74.2 average score that surpassed GPT-3.5, Llama 2, and Mixtral 8x7B. The company's enterprise focus shows in Solar Pro's exceptional HTML/Markdown parsing capabilities, crucial for document processing workflows in finance, healthcare, and legal sectors.

Key Features:

- Revolutionary Depth Up-Scaling architecture innovation

- 74.2 HuggingFace leaderboard score beating GPT-3.5

- Superior HTML/Markdown parsing for document AI

- Apache 2.0 license for enterprise deployment

- Solar Pro 22B delivers 70B+ performance on single GPU

- 64% improvement in Korean/Japanese language tasks

User Testimonials:

“Solar has beaten Mixtral for me in every use case… faster, accurate to its prompting.” Reddit

“Deci LM and Solar 10.7B struggled to do both [format and content].” Reddit

8. Qwen 2.5 (32B)

Best for multilingual applications and international business use cases

Qwen 2.5 stands as the premier multilingual open source model, handling over 29 languages with remarkable fluency and cultural awareness. Developed by Alibaba Cloud and trained on 18 trillion tokens, the 32B parameter variant strikes an optimal balance between capability and hardware requirements.

Its exceptional non-English language performance and strong reasoning capabilities make it invaluable for global businesses, international development teams, and users working in multilingual environments.

Users consistently report that Qwen 2.5's multilingual capabilities go beyond simple translation, showing genuine understanding of cultural nuances and context-appropriate responses across different languages and regions.

Key Features:

- Outstanding multilingual support covering 29+ languages with cultural awareness

- 32 billion parameters providing strong performance while remaining hardware-accessible

- Apache 2.0 license enabling unrestricted commercial deployment

- Superior non-English language performance exceeding Western-focused models

- Strong mathematical reasoning and coding capabilities across languages

- Specialized model variants optimized for different use cases and languages

User Testimonials:

“Know your tools. Qwen works well at what it does well like every other llm..” Reddit

“Do NOT trust it to do ANY actual work. It will try to convince you that it can pack the information using protobuf schemas and efficient algorithms.... buuuuuuuut its next session can't decode the result.” Reddit

9. OpenHermes 2.5 (Teknium/Nous Research)

Best for ChatGPT-like conversational AI with superior instruction-following capabilities

OpenHermes 2.5 demonstrates how thoughtful fine-tuning changes base models into exceptional conversational AI. Created by Teknium at Nous Research, this Mistral 7B fine-tune achieved remarkable performance through training on 1 million primarily GPT-4 generated samples using the structured ChatML format.

The model incorporates 7-14% code instruction data, boosting HumanEval scores from 43% to 50.7% while maintaining conversational excellence. Teknium's work at Nous Research, supported by a16z and GlaiveAI sponsorship, exemplifies open-source collaboration in advancing accessible AI technology.

Key Features:

- Fine-tuned on 1M high-quality GPT-4 generated samples

- Native ChatML format for structured multi-turn conversations

- HumanEval score improved to 50.7% from base model's 43%

- Efficient Q4_K_M quantization requiring only 4GB RAM

- Apache 2.0 license enabling broad commercial deployment

- Superior performance on TruthfulQA and AGIEval benchmarks

User Testimonials:

“OpenHermes 2.5 is on another level conversationally.” Reddit

“Open-Hermes is only better in a strange edge case; Mixtral 8x7B is more rounded.” Reddit

10. Vicuna 13B v1.5

Best for ChatGPT-like conversational AI with enhanced reasoning capabilities

Vicuna 13B v1.5 emerged from UC Berkeley's research as one of the most capable open conversational AI models, fine-tuned from Llama 2 with conversations from ShareGPT. The model demonstrates impressive capabilities in multi-turn conversations, creative writing, and complex reasoning tasks while maintaining a natural, helpful personality.

Its 13B parameter size strikes a balance between capability and accessibility, running well on mid-range Mac configurations.

Users frequently compare Vicuna favorably to ChatGPT for conversational applications, noting its ability to maintain context over long discussions and provide nuanced responses to complex queries.

Key Features:

- Fine-tuned on high-quality conversation data from ShareGPT for natural interactions

- 13 billion parameters providing strong reasoning while remaining hardware-accessible

- Excellent multi-turn conversation capabilities maintaining context effectively

- Superior performance in creative writing and complex analytical tasks

- Apache 2.0 license enabling broad commercial and research use

- Optimized for Apple Silicon through multiple Mac-compatible deployment frameworks

User Testimonials:

“Vicuna 13B… 90% the quality of ChatGPT.” Reddit

“Randomly produces a lot of tags like ‘[INST]’, ‘<<SYS>>’ in the responses.” Reddit

11. Zephyr 7B

Best for instruction-following tasks requiring precise, helpful responses

Zephyr 7B represents Hugging Face's approach to creating a highly capable instruction-following model through innovative training techniques. Built on Mistral 7B and fine-tuned using Direct Preference Optimization (DPO), it excels at understanding and executing complex instructions while maintaining helpfulness and accuracy.

The model's training specifically focused on being helpful, harmless, and honest, making it ideal for professional applications requiring reliable AI assistance.

Users appreciate Zephyr's consistent performance across different task types, from creative writing to technical analysis. The model demonstrates strong reasoning capabilities while maintaining the efficiency benefits of the 7B parameter size, making it accessible to users with modest hardware requirements.

Key Features:

- Built on Mistral 7B foundation with advanced DPO fine-tuning techniques

- Exceptional instruction-following capabilities across diverse task categories

- Optimized training for helpful, harmless, and honest response generation

- 7 billion parameters ensuring efficient operation on standard Mac configurations

- Apache 2.0 license providing complete freedom for commercial applications

- Strong performance in reasoning, analysis, and creative tasks

User Testimonials:

I am enjoying Zephyr a lot . It working better than mistral-orca in document writing tasks. I haven't test it against mistral 7b-dolphain2.1 tho. Reddit

I prefer mistral-7b-openorca over zephyr-7b-alpha and dolphin-2.1-mistral-7b. Reddit

12.LLaVA (Large Language and Vision Assistant)

Best for multimodal tasks combining vision and text understanding

LLaVA represents a breakthrough in multimodal AI, seamlessly combining large language model capabilities with sophisticated image understanding. Available in 7B, 13B, and 34B variants, it can analyze images, read text within pictures, answer visual questions, and generate detailed descriptions of complex scenes.

The model's ability to understand both visual and textual information makes it invaluable for document analysis, accessibility applications, and content creation workflows.

Users consistently praise LLaVA's ability to understand context within images and provide thoughtful, accurate descriptions. The model excels at tasks like OCR, visual question answering, and image-based reasoning, with performance that approaches proprietary multimodal systems while running entirely on local Mac hardware.

Key Features:

- Advanced multimodal architecture combining vision and language understanding

- Multiple parameter sizes (7B, 13B, 34B) for different performance requirements

- High-resolution image processing supporting up to 4x pixel density

- Strong OCR capabilities for text extraction from images

- Apache 2.0 license enabling broad commercial deployment

- Optimized for Apple Silicon through multiple Mac-compatible frameworks

User Testimonials:

I have llava:13b locally and gotta tell you, i wouldn't use it at production. At best, is very unreliable. I was hoping what i lack is the number of parameters (maybe i'll get a card that can do llava:34b). I assume there are models i can pay to use for production, but would be nice to have an open-source model that can read/analyze images reliably. Reddit

If you can afford a 70B model, then Qwen2.5-VL-72B-Instruct might be the best choice, which should be significantly better than Molmo-72B or Llama3.2. Reddit

13. Stable Diffusion (Core ML)

Best for professional image generation optimized specifically for Apple devices

Apple's official Core ML implementation of Stable Diffusion represents the best of local image generation on Mac hardware. Optimized specifically for Apple Silicon with Neural Engine integration, it delivers up to 4x faster performance than standard Python implementations while maintaining excellent image quality.

The native macOS integration and support for iPhone and iPad deployment make it ideal for creative professionals and developers building image generation applications.

The Core ML optimization leverages Apple's complete AI stack, from the Neural Engine to Metal Performance Shaders, providing energy-efficient image generation that doesn't drain laptop batteries.

Key Features:

- Official Apple implementation optimized for complete Apple ecosystem

- Up to 4x performance improvement over standard Python implementations

- Neural Engine utilization for efficient, low-power image generation

- Swift and Python packages supporting diverse development workflows

- 6-bit palettization reducing memory requirements significantly

- Cross-platform deployment supporting iPhone, iPad, and Mac

User Testimonials:

It does work, search Reddit for Zluda and you’ll find posts details or with links to how to install it. It’s fairly easy to install - install a set of libraries , add a set of optimised libraries for your particular gpu , install the ui - run it. Reddit

I've had it working before, ran it on manjaro. But heads up, SD is super slow on that card. I don't know how much there's been in the way of efficiency improvements since the A1111 days, so maybe it's not quite as terrible anymore. Reddit

14. Whisper.cpp

Best for fast, offline speech recognition and transcription

Whisper.cpp brings OpenAI's powerful speech recognition model to Apple Silicon with remarkable performance optimizations. This community-driven C++ implementation delivers 2x faster transcription than cloud-based services while supporting 99 languages completely offline.

The model's ability to handle various audio qualities, accents, and background noise makes it essential for content creators, journalists, and accessibility applications requiring reliable speech-to-text conversion.

The multiple model sizes (from tiny to large) allow optimization for different use cases, from real-time transcription on modest hardware to high-accuracy processing on powerful Mac configurations.

Key Features:

- Native C++ implementation optimized specifically for Apple Silicon performance

- Support for 99 languages with high accuracy across diverse accents

- Multiple model sizes from tiny (39MB) to large (3GB) for different requirements

- Real-time transcription capabilities with ~1/10th processing time ratio

- Complete offline operation ensuring privacy for sensitive audio content

- Metal acceleration leveraging Mac GPU capabilities for faster processing

User Testimonials:

I’ve been using Whisper for transcription and love its accuracy, but speed is an issue for me. It takes around 40 seconds to process a 2-minute audio file on my setup. I’ve read about models (sometimes dubbed “tree-like models”) that can achieve this in just 5 seconds. Reddit

I'll give this a shot. I struggled with whisper already and then started to use Vosk, but it's not working great. Reddit

15.RWKV (BlinkDL)

Best for memory-efficient inference with theoretically infinite context length

RWKV eliminates transformer attention mechanisms entirely while maintaining competitive language modeling performance. Created by BlinkDL (Bo Peng) and now a Linux Foundation AI project, this radical architecture combines RNN efficiency with transformer-quality results through innovative Receptance Weighted Key Value operations.

The breakthrough replaces quadratic attention complexity with linear time-decay channels, operating in dual modes: "GPT mode" for parallel training and "RNN mode" for sequential generation.

This enables transformer-quality performance with O(T) time complexity and O(1) space complexity, requiring no KV cache and dramatically reducing memory requirements.

Key Features:

- Attention-free architecture with linear time/constant space complexity

- No KV cache required, enabling infinite context processing

- Dual-mode operation for training (parallel) and inference (sequential)

- 3B model runs in just 2GB RAM with excellent performance

- Apache 2.0 license with Linux Foundation backing

- Hardware-friendly matrix-vector operations instead of matrix-matrix

User Testimonials:

RWKV-7 "Goose" 0.4B trained w/ ctx4k automatically extrapolates to ctx32k+, and perfectly solves NIAH ctx16k 🤯 100% RNN and attention-free. Only trained on the Pile. No finetuning. Replicable training runs. tested by our community. Hugging Face

This model does not have fully optimized kernels, hence the lack of speed comparison. Optimized kernels takes time! Redlib

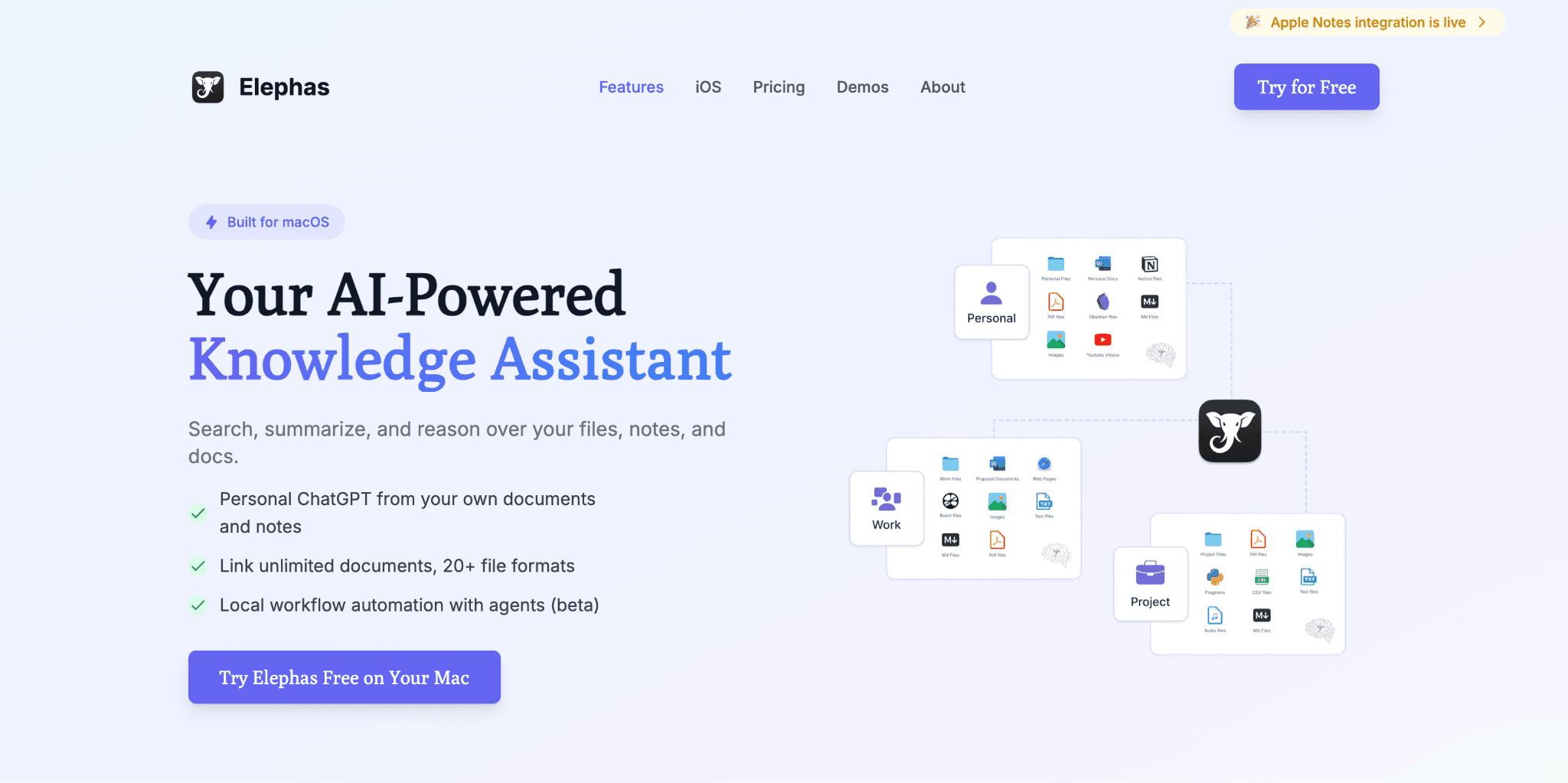

Getting the Most Out of Open Source AI Models

Elephas is a Mac knowledge assistant that helps you work with your documents and information in new ways. It lets you upload thousands of files, YouTube videos, and webpages to create your own knowledge base that you can chat with and ask questions about, and it also has writing features and several integration features to note-taking tools like Notion and Apple Notes.

Unlike paid LLM models like Claude and ChatGPT that limit how much you can upload, Elephas lets you add as many documents as you want. You can create reports, get summaries, and even set up automated tasks that save you time.

Elephas can even work offline using local or open source AI models such as Gemma, Mistral, Llama, Deepseek, etc., and you can also run Elephas using different AI providers such as ChatGPT, Claude, Gemini, etc.

Key Features

- Super Brain: Upload all your documents to create a personal knowledge base that you can chat with and ask questions, generate reports about your files.

- Multiple AI Models: Choose from different AI providers from Gemini to Claude based on your preference.

- Automation Workflows: Set up automated tasks that can summarize documents, create diagrams, fill PDF forms, and export content in different formats.

- Note Integration: Works smoothly with Apple Notes, Notion, Obsidian, and other note-taking apps to sync your information.

- Writing Tools: Rewrite content in different tones, fix grammar, continue writing, and create smart replies for emails and messages.

- Offline Mode: Using local or open source AI models, you can run Elephas completely offline to keep your data private and secure on your Mac.

Conclusion

From small models like Phi-3 that work on basic computers to large models like Llama 3.3 70B that match expensive paid services, there is something for everyone.

These models let you work with AI without paying monthly fees or worrying about your data being sent to other companies. You can choose models for writing, different languages, understanding images, or converting speech to text. The best part is that all of these models work completely offline on your Mac.

Whether you need help with complex reasoning like DeepSeek R1, fast multilingual support like Mixtral, or reliable everyday assistance like Mistral 7B, open source AI gives you the freedom to pick what works best for your needs.

Also, using tools like Elephas with open-source models will give you more features, such as letting you upload your documents and create automated workflows that save you time every day.

Comments

Your comment has been submitted