Deep Dive: OpenAI's New Rules for Teen Safety on ChatGPT

OpenAI just made a big decision that will change how millions of people use ChatGPT. The company announced new teen safety rules that create completely different experiences for teenagers and adults. This move comes after serious concerns about AI chatbot safety for kids and a lawsuit involving a teenager's tragic death.

The new ChatGPT teen safety measures tackle a tough problem that many tech companies face today. How do you protect young users while keeping AI helpful for adults? OpenAI's solution involves age prediction technology, strict content filtering for teens, and powerful OpenAI parental controls that let families monitor their teenager's conversations.

But not everyone is happy with these changes. The announcement sparked heated debates about privacy, government surveillance, and who should decide what's safe for teenagers. Some parents love the new teen AI protection features, while others feel the company is overstepping its authority.

From emergency intervention systems to ID verification requirements, these changes will affect how people interact with AI chatbots.

Let's get into it.

Executive Summary

OpenAI announced major changes to ChatGPT that create separate experiences for teenagers and adults. The company will use age prediction technology and ID verification to identify users under 18, who will then receive a restricted version of the AI chatbot with enhanced safety measures.

Key Changes:

- Teen restrictions: No flirtatious conversations, complete blocks on self-harm discussions, graphic content filtering, and active mental health monitoring

- Adult freedom: Fewer content restrictions, private conversations, and full AI capabilities without parental oversight

- Parental controls: Account linking, usage blackout hours, feature management, response guidance, and crisis alerts

The Challenge: OpenAI struggled to balance three conflicting principles - privacy, freedom, and teen safety online. Their solution prioritizes safety over privacy for users under 18 while preserving adult autonomy.

Emergency Features: The system monitors teen conversations for signs of crisis and can contact parents or authorities when detecting suicidal thoughts or acute distress.

Public Response: Mixed reactions from users, with some supporting teen AI protection measures while others raised concerns about privacy, surveillance, and parental authority.

Impact: These ChatGPT teen safety rules set a new industry standard and will likely influence how other tech companies approach AI chatbot safety for kids. The changes launch at the end of September 2024.

What's This All About OpenAI Safety Rules?

OpenAI recently announced new safety rules that will change how ChatGPT works for teenagers. The company is creating different experiences for young users compared to adults. This move comes after growing concerns about AI chatbot safety for kids and teen AI protection.

The new ChatGPT teen safety measures aim to create a safer space for people under 18. OpenAI wants to balance two important goals. First, they want to protect young users from potential risks. Second, they want to keep the AI helpful and useful for adult users who need fewer restrictions.

These changes will affect how the chatbot responds to teenagers in several ways:

- Age-specific responses: The AI will give different answers based on the user's age

- Enhanced safety filters: Stronger content controls for younger users

- Parental oversight options: New OpenAI parental controls for families

- Mental health safeguards: Better protection for teens who might be struggling

The company plans to use ChatGPT age verification to identify teenage users. This system will help the AI know when it's talking to someone under 18. Once identified, the chatbot will follow stricter safety guidelines.

However, OpenAI is also aware that it is not 100% efficient, so they specified that they will soon ask for user IDs to verify their age.

The Big Problem OpenAI Had to Solve

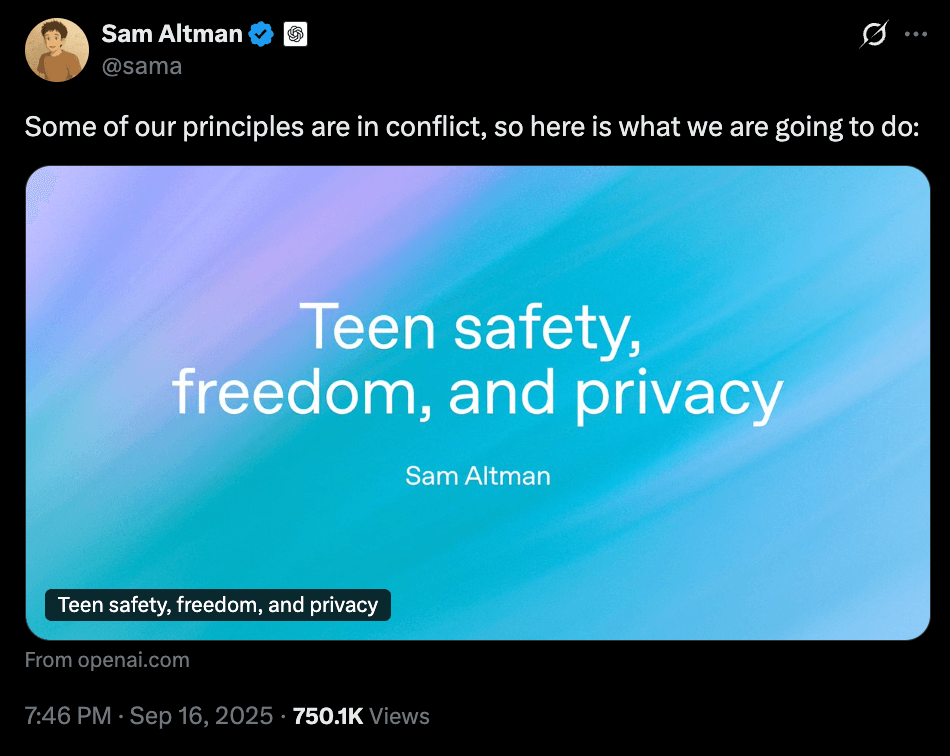

OpenAI faced a tough challenge that many tech companies struggle with today. They had to balance three important values that often work against each other. This problem became bigger as more teenagers started using ChatGPT and concerns about AI chatbot safety for kids grew.

The company discovered that their core principles were pulling in different directions. Privacy means keeping user conversations secret and not collecting personal information.

Freedom means letting people ask anything and get helpful answers without too many limits. Teen safety online means protecting young users from harmful content and situations.

Here's why these three things create problems when put together:

- Privacy vs Safety: To protect teens, OpenAI needs to know who is underage, but this requires collecting age information

- Freedom vs Protection: Adult users want fewer restrictions, while teens need stronger AI safety protocols

- Safety vs Privacy: Monitoring conversations for dangerous content means looking at what should be private chats

- Detection vs Anonymity: Finding teens automatically means the system must analyze user behavior and language

The biggest challenge comes when trying to create AI protection measures for minors while keeping adult conversations private and unrestricted. If OpenAI makes the system too safe, adults lose freedom.

If they make it too private, they cannot protect teenagers properly. This conflict forced OpenAI to make hard choices about chatbot safety guidelines. They had to decide which principle matters most in different situations. The result is their new approach to teen AI protection that tries to balance all three needs.

How OpenAI Treats Adult Users

OpenAI believes that adults should have a different experience with ChatGPT compared to teenagers. The company wants to give grown-up users more freedom while still maintaining basic AI safety protocols. This approach recognizes that adults can make their own choices about AI usage.

Adult users get several advantages that younger users will not have. They can explore topics more freely without heavy content filtering. The AI chatbot responds with fewer restrictions and provides more detailed information on sensitive subjects. Adults also get better privacy protection for their conversations.

The key differences for adult users include:

- Fewer content restrictions: Adults can discuss complex topics without extra safety barriers

- Private conversations: Less monitoring and data collection compared to teen accounts

- Full AI capabilities: Access to all features without age-appropriate AI responses

- Personal responsibility: Users make their own choices about what to ask and discuss

- Minimal oversight: No parental controls or external monitoring systems

OpenAI stated that treating adults like adults means giving them the respect to handle AI interactions responsibly. This philosophy separates their approach from other platforms that apply the same rules to everyone.

“For example, the default behavior of our model will not lead to much flirtatious talk, but if an adult user asks for it, they should get it. For a much more difficult example, the model by default should not provide instructions about how to commit suicide, but if an adult user is asking for help writing a fictional story that depicts a suicide, the model should help with that request.”

Special Rules Just for Teenagers

OpenAI announced specific safety measures that create a completely different ChatGPT experience for users under 18. These teen AI protection rules go far beyond simple content filtering and include active monitoring systems with real-world consequences.

OpenAI CEO Sam Altman stated:

"ChatGPT will be trained not to do the above-mentioned flirtatious talk if asked, or engage in discussions about suicide of self-harm even in a creative writing setting."

This means teenagers cannot access certain conversations that adults can freely have.

The company uses an automated age prediction technology to identify teen users. If there is doubt about a user's age, the system will "play it safe and default to the under-18 experience." In some countries, OpenAI may ask adults for ID verification to access the unrestricted version. But OpenAI states it is a worthy compromise.

“In some cases or countries we may also ask for an ID; we know this is a privacy compromise for adults but believe it is a worthy tradeoff.”

Specific restrictions for teenage users include:

- No flirtatious conversations: The AI will refuse any romantic or sexual chat requests

- Complete self-harm blocks: No discussions about suicide or self-harm, even for creative writing projects

- Graphic content filtering: All sexual and violent content gets automatically blocked

- Emergency intervention system: The AI monitors for signs of crisis and can contact authorities

The most serious safety feature involves mental health monitoring. When the system detects suicidal thoughts in an under-18 user, OpenAI will "attempt to contact the users' parents and if unable, will contact the authorities in case of imminent harm."

These ChatGPT teen safety features launch at the end of September 2024, with parental controls allowing families to manage their teenager's AI interactions completely.

New Tools for Parents

OpenAI announced new OpenAI parental controls that launch at the end of September. These tools give parents direct oversight of their teenager's ChatGPT usage through detailed monitoring and management systems.

The upcoming controls will allow parents to "link their ChatGPT account with their teen's via email, set blackout hours for when their teen can't use the chatbot, manage which features to disable, guide how the chatbot responds and receive notifications if the teen is in acute distress."

Parents get several powerful management options:

- Account linking: Direct connection between parent and teen ChatGPT accounts for full oversight

- Usage blackout hours: Parents can completely block access during specific times like bedtime or school hours

- Feature control: Disable memory, chat history storage, and other advanced functions

- Response guidance: Shape how the AI talks to their teenager with custom behavior rules

- Crisis alerts: Immediate notifications when the system detects signs of mental distress

These parental notification features include alerts when teens show signs of "acute distress," with OpenAI potentially involving law enforcement if parents cannot be reached during emergencies.

User Reaction to OpenAI New Teen Safety Rules

Sam Altman's announcement about ChatGPT teen safety measures received mixed reactions from users across social media. The 750,000 views on his initial X(Twitter) post showed high public interest in these AI chatbot safety for kids changes.

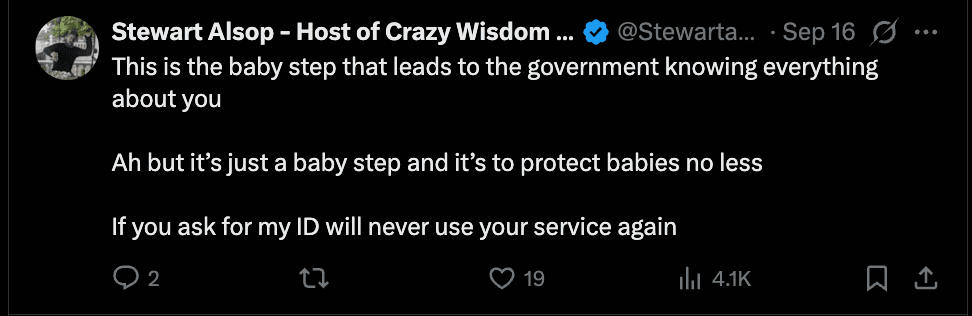

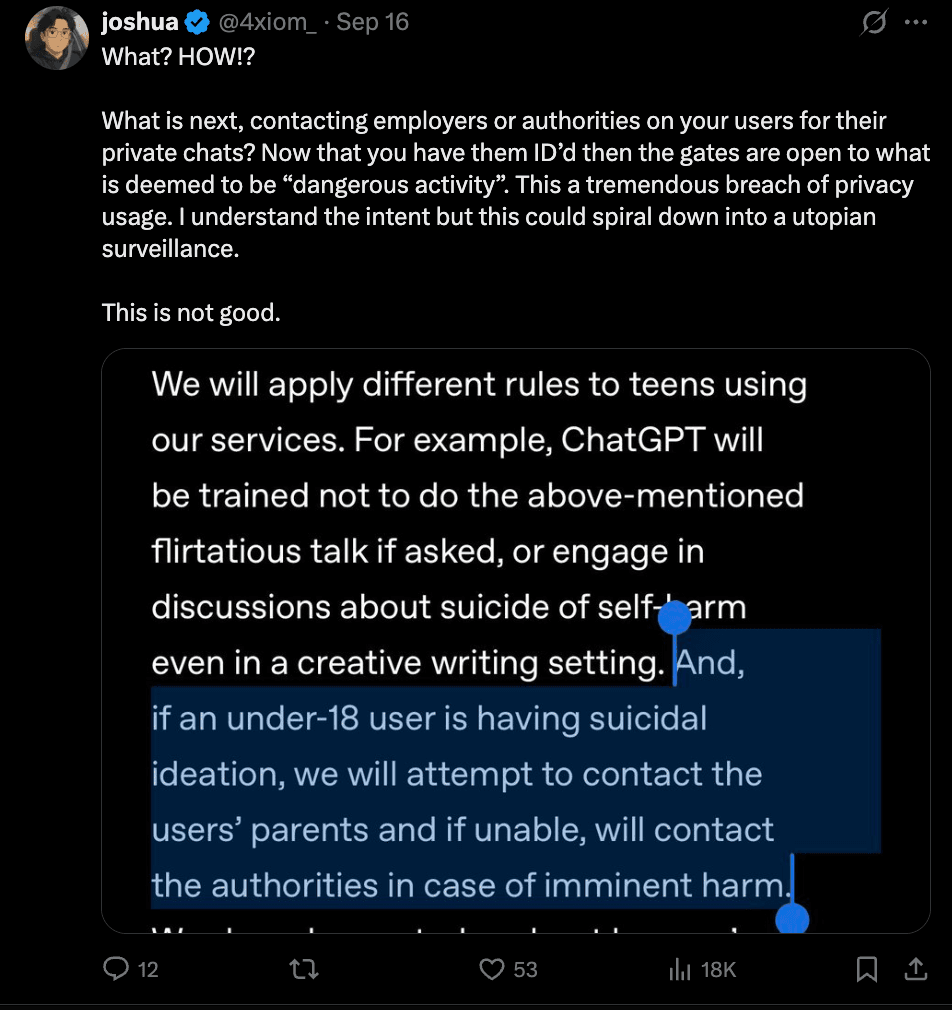

Many users expressed serious privacy concerns about the new system. One user worried that ID verification could lead to "utopian surveillance" and questioned what happens when OpenAI contacts authorities about private conversations.

Another user stated they would stop using the service entirely if asked for identification, calling it "the baby step that leads to the government knowing everything about you."

Parents had split opinions on the parental controls features. Some appreciated OpenAI's efforts to protect minors, with one user saying "protecting minors is crucial."

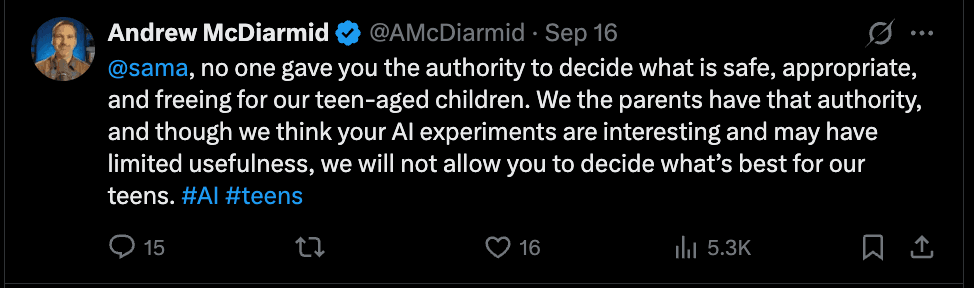

However, other parents pushed back against the company making decisions for their families. One parent wrote that "no one gave you the authority to decide what is safe, appropriate, and freeing for our teen-aged children."

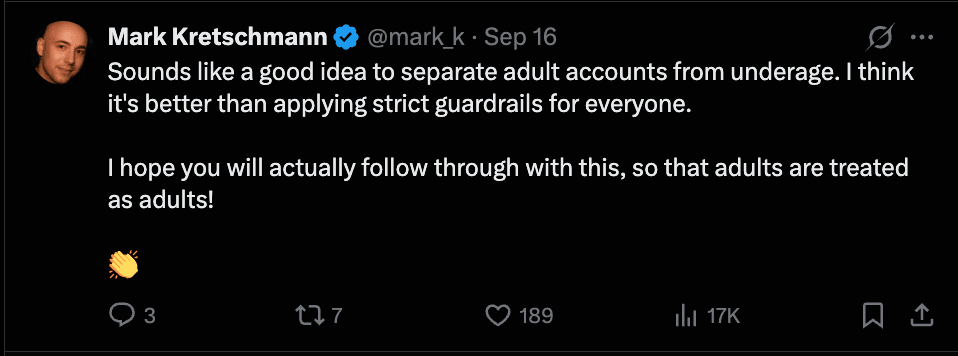

Several users supported separating adult and teen experiences. They felt this approach was better than applying strict rules to everyone.

However, others worried about false positives in the age prediction technology, with concerns about adults getting wrongly flagged and having authorities contacted over misunderstood conversations.

Comments

Your comment has been submitted