OpenAI Drops GPT-4.1 Without a Safety Report — Why?

OpenAI has released GPT-4.1, a powerful new AI model with impressive capabilities, but something important is missing: a safety report. This unusual departure from industry practices comes amid revelations from the Financial Times that OpenAI has dramatically cut back on safety testing resources, reducing evaluation periods from months to mere days.

As AI models become more capable, this trend raises serious questions about the balance between innovation and responsibility. What does it mean when companies rush sophisticated AI systems to market with minimal safety evaluation? How might these decisions affect everyday users who increasingly rely on these technologies?

We'll explore OpenAI's explanation for skipping the safety report, examine the concerning shift in testing practices across the industry, and look at what users can do to stay informed about the AI tools they're using.

Let's get into it.

The New GPT-4.1 Release

OpenAI has just unveiled GPT-4.1, their latest AI model that brings major improvements in several key areas. Released on Monday, April 14, 2025, this new model has quickly caught the attention of AI enthusiasts and developers alike.

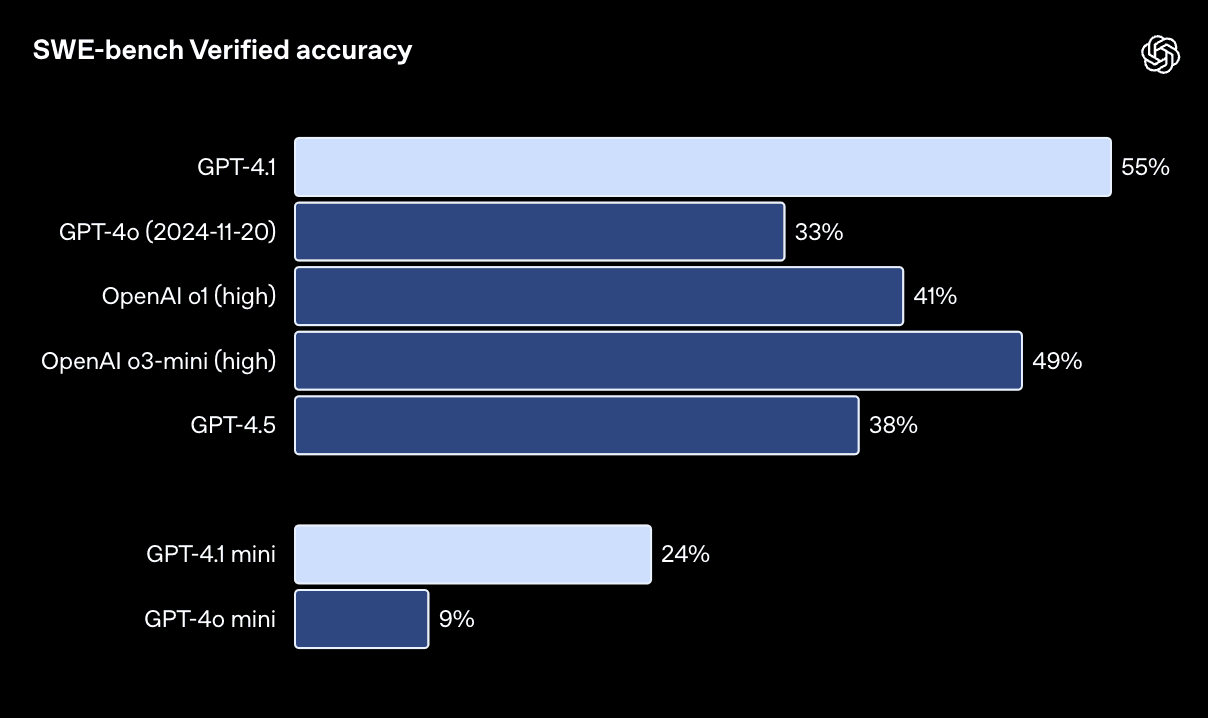

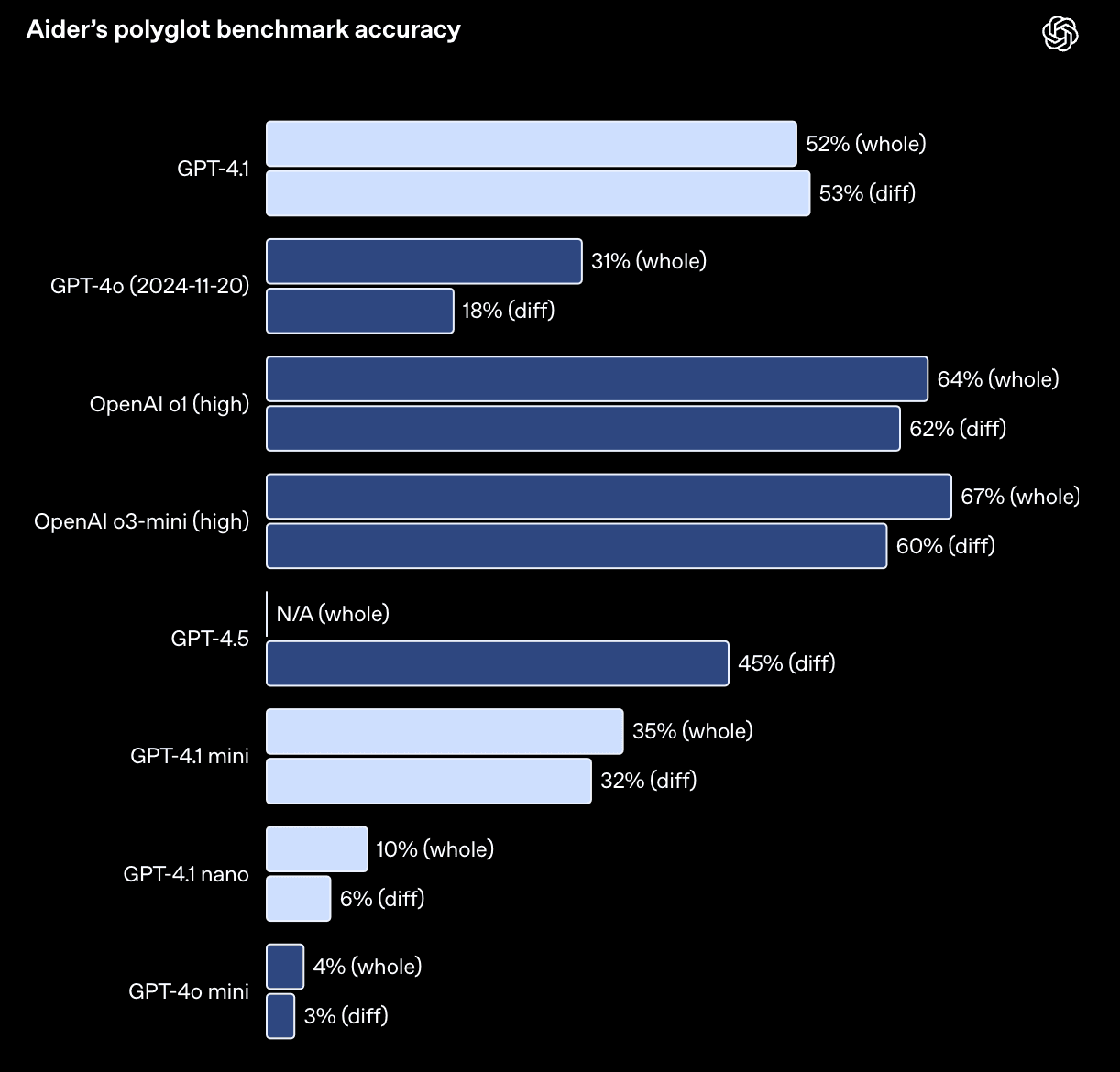

GPT-4.1 shows impressive advances over previous versions, particularly in programming tasks.

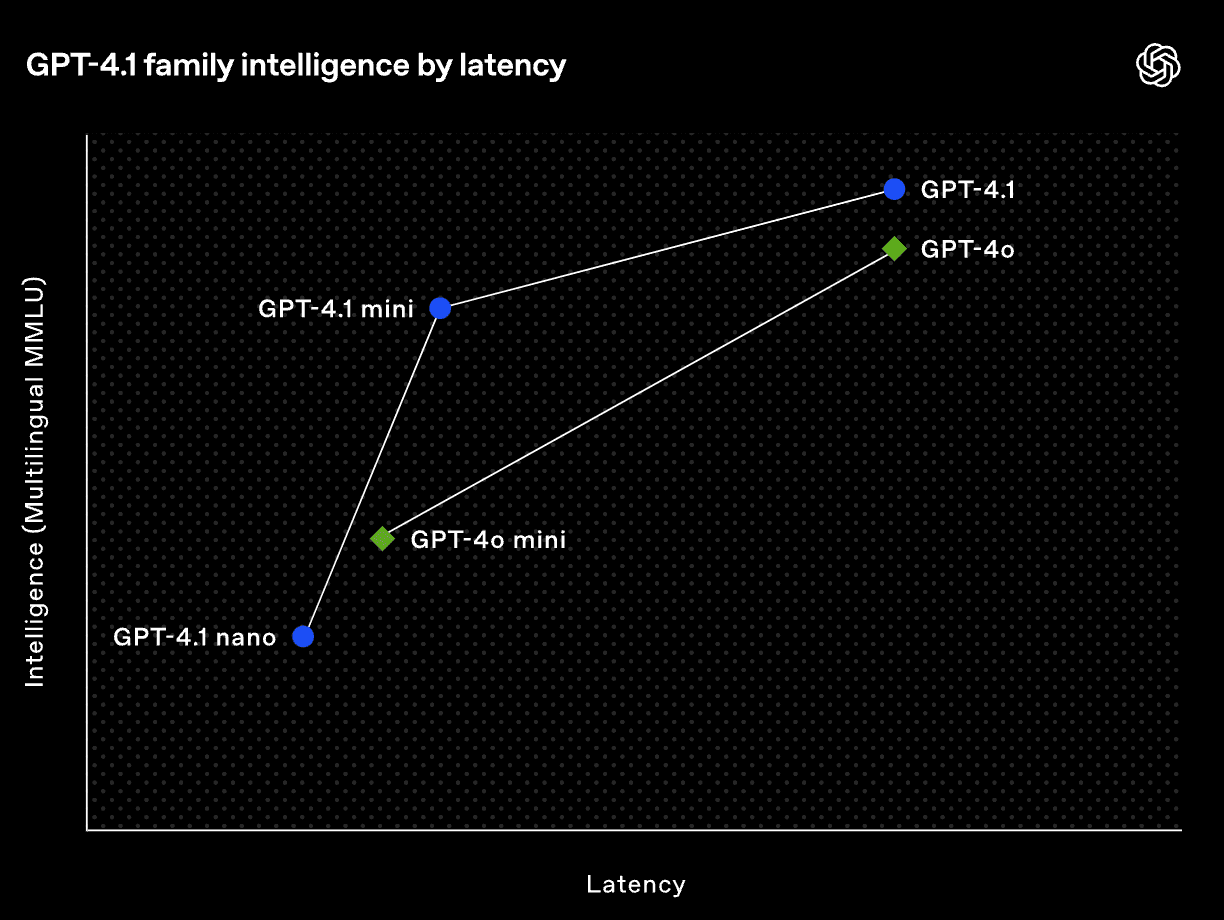

The model comes in three different options to fit various needs:

- Standard GPT-4.1: The full-powered version with all new capabilities

- GPT-4.1 Mini: A lighter version that needs less computing power

- GPT-4.1 Nano: The smallest, most affordable option for simple tasks

What makes this new model stand out is its huge leap in processing ability. GPT-4.1 can handle up to 1 million tokens at once (about 750,000 words), which means it can work with very long documents or conversations without forgetting earlier information.

The coding improvements are especially noteworthy, with GPT-4.1 scoring 21% better than GPT-4o and 27% better than GPT-4.5 on standard coding tests. It's also much better at following complex instructions over multiple turns of conversation, scoring 20% higher on instruction tests.

Other key features include:

- Support for both text and image inputs (though it can only output text)

- 26% lower cost compared to GPT-4o

- Available only through OpenAI's API, not in the regular ChatGPT interface

Despite these significant improvements and new capabilities, OpenAI has not published a safety report for GPT-4.1. When asked about this, an OpenAI spokesperson stated that they don't consider it a "frontier model," suggesting they don't believe a safety report is needed for this release.

What are AI Model Safety Reports

AI safety reports (or system cards) are detailed documents that AI companies publish when releasing new models. These reports show the testing done to check if models might cause harm. They include information about how the AI performs on various tests and any risks or limitations found during testing.

These reports matter because they help users understand what the AI can and cannot do safely. For the industry, they set standards for transparency and accountability.

Safety reports are especially important because they:

- Reveal potential problems like bias, harmful outputs, or security risks

- Help users make informed choices about which AI to use

- Allow independent researchers to verify company claims

- Build trust between AI developers and the public

By documenting both strengths and weaknesses, safety reports help everyone see how AI models might affect society before widespread use begins.

Why didn’t Openai publish a Safety Report?

OpenAI explained the missing safety report by stating that "GPT-4.1 is not a frontier model," suggesting it doesn't represent their most advanced technology. For average users, this classification implies the model isn't breaking new ground that would require special safety considerations.

This explanation has raised eyebrows among experts who note that GPT-4.1 does include significant improvements in key areas. Industry standards typically encourage publishing safety reports for any model with substantial upgrades or new capabilities.

Safety researchers have expressed concern about this decision, with some pointing out that even incremental improvements can introduce new risks. Former OpenAI safety researcher Steven Adler noted that while safety reports are voluntary, they represent "the AI industry's main tool for transparency.

Financial Times Reveals OpenAI Slashed Safety Resources for AI Models

A recent investigation by the Financial Times has uncovered alarming changes in how OpenAI tests the safety of its AI models. The report reveals that OpenAI has significantly cut the time and resources dedicated to safety testing, raising serious concerns among experts and former employees.

Previously, OpenAI allowed several months for safety tests. For GPT-4, which launched in 2023, testers had six months to conduct evaluations before its release. Now, OpenAI has been pushing to release new models with dramatically shortened testing periods, giving testers less than a week for safety checks in some cases.

According to the findings:

- Former testers note that some dangerous capabilities were only discovered after months of testing

- Safety tests are often not conducted on the final models released to the public, but on earlier versions

- Eight people familiar with OpenAI's testing processes reported that tests have become less thorough

- "It is bad practice to release a model which is different from the one you evaluated," warned a former OpenAI technical staff member

This trend appears to be part of a broader pattern across major AI companies, with transparency and safety practices weakening as competition increases.

Expert Concerns About Safety Practices

The changes have alarmed many in the field, including those who previously worked at OpenAI:

- "We had more thorough safety testing when [the technology] was less important," said one person currently testing OpenAI's upcoming O3 model

- As models become more capable, the "potential weaponization" risk increases, but testing is decreasing

- Some testers believe OpenAI is "just not prioritizing public safety at all" with their current approach

Daniel Kokotajlo, a former OpenAI researcher who now leads the non-profit AI Futures Project, explained: "There's no regulation saying [companies] have to keep the public informed about all the scary capabilities... and also they're under lots of pressure to race each other so they're not going to stop making them more capable."

The Competitive Pressure Factor

The rush to release models stems largely from "competitive pressures," according to people familiar with the matter. OpenAI is racing against Big Tech groups like Meta and Google, as well as startups including Elon Musk's xAI.

While OpenAI defends its practices, claiming efficiency improvements, the voluntary nature of safety reporting allows companies significant flexibility in how thoroughly they test their models before release.

This situation highlights a growing conflict between commercial interests and safety considerations in the rapidly evolving AI industry, with potential risks for users who rely on these increasingly powerful systems.

OpenAI's Previous Safety Commitments

OpenAI has previously made substantial commitments to safety and transparency in AI development. The company promised to test customized versions of its models to assess potential misuse, such as whether its technology could help make biological viruses more transmissible.

These commitments weren't casual - they were formal pledges to governments. Ahead of the UK AI Safety Summit in 2023, OpenAI called system cards "a key part" of its approach to accountability.

Similarly, before the Paris AI Action Summit in 2025, the company emphasized that system cards provide valuable insights into a model's risks.

However, recent practices tell a different story:

- OpenAI has only conducted limited testing on older, less capable models

- The company has never reported how newer models would score if fine-tuned

- Safety tests now receive days rather than months of attention

Former OpenAI safety researcher Steven Adler noted this disconnect, stating: "If it is not following through on this commitment, the public deserves to know."

AI Giants Are Dodging Safety Rules — And It’s a Problem

Major AI companies have actively opposed efforts to create mandatory safety regulations for advanced AI systems. Instead, they've pushed for self-regulation, arguing that government oversight would slow innovation and harm competitiveness.

This resistance is clearly seen in their opposition to California's Senate Bill 1047, which would have required AI developers to conduct and publish safety evaluations before releasing powerful models. OpenAI and other leading companies lobbied against this bill, effectively blocking standardized safety requirements.

The self-regulation approach shows significant limitations:

- Companies determine their own testing standards with no external verification

- Safety reports vary widely in detail and thoroughness

- Economic pressures consistently push companies to prioritize speed over safety

- There's no enforcement mechanism for voluntary commitments

This pattern of resistance to oversight creates a dangerous situation where AI capabilities continue advancing while safety practices decline. Without meaningful regulation, companies face few consequences for cutting corners on testing, potentially putting users and society at greater risk.

Conclusion

The rush to release AI models with less safety testing directly impacts everyday users. When companies like OpenAI slash testing time from months to days, models may contain undiscovered risks that affect your privacy, security, and the reliability of AI responses.

This reflects the ongoing tension between competitive pressure and safety considerations. Companies racing to outdo each other prioritize speed over thorough evaluation, creating a dangerous precedent as AI becomes increasingly powerful. As these models grow more capable of complex tasks, the potential risks of inadequate testing will only multiply.

Users have a crucial role in pushing for better standards. By questioning whether models have published safety reports, what specific testing was conducted, and what limitations exist, consumers can create market pressure for safer AI.

Following resources like Stanford's AI Index Report, the Center for AI Safety blogs, and the AI Vulnerability Database can help you stay informed about emerging concerns in this rapidly evolving field.

FAQs

1. Why did OpenAI release GPT-4.1 without a safety report?

OpenAI claims GPT-4.1 isn't a "frontier model" requiring a safety report, despite its significant improvements. This decision reflects a broader trend of reduced safety testing as competition increases, prioritizing faster releases over thorough evaluation of potential risks.

2. How has OpenAI's safety testing process changed over time?

OpenAI has drastically reduced safety testing timeframes from six months for GPT-4 to less than a week for newer models. Tests have become less thorough and are often conducted on preliminary versions rather than final models, raising concerns about undiscovered risks.

3. What are the dangers of rushing AI safety testing?

Rushed safety testing increases risks of harmful outputs, security vulnerabilities, and unforeseen consequences. Former OpenAI testers note that dangerous capabilities were only discovered after months of testing, suggesting current shortened timeframes may miss critical issues.

4. How can users protect themselves when using AI models with limited safety testing?

Users should research whether models have published safety reports, understand their limitations, use enterprise versions when available, enable safety settings, and stay informed through resources like Stanford's AI Index Report and the AI Vulnerability Database.

5. Are AI companies legally required to conduct safety testing?

Currently, AI safety testing remains largely voluntary in most jurisdictions. Companies have actively opposed regulations like California's SB 1047 that would mandate testing. The EU's AI Act will soon require safety testing for powerful models, but global standards remain inconsistent.

Comments

Your comment has been submitted