Machines Fooling Humans: The Day AI Passed the Famous Turing Test

For 75 years, the Turing test has stood as the ultimate challenge for artificial intelligence – can a machine convince a human they're talking to another person?

In a groundbreaking study from UC San Diego, that barrier has finally fallen.

Two advanced AI systems have not only passed this historic test but one actually performed better at appearing human than real people did.

How did these AI systems manage to fool so many humans? What strategies worked (and failed) to spot the machines? And perhaps most importantly, what does this mean for our online interactions moving forward? Let’s uncover them and know what this is all about.

Let's get into it.

"Sorry, I Thought You Were Human": AI Finally Tricks Us

In a major milestone for artificial intelligence, two AI systems—GPT-4.5 and LLaMa-3.1—have officially passed the famous Turing test, convincing humans they were talking to real people rather than machines. The study, conducted by researchers at UC San Diego, showed that when given specific instructions to act like young, introverted people familiar with internet culture:

- GPT-4.5 was judged to be human 73% of the time—more often than actual humans

- LLaMa-3.1 achieved a 56% success rate, making it statistically indistinguishable from humans

- Both systems significantly outperformed older AI models like ELIZA and GPT-4o

The Turing test, proposed by Alan Turing in 1950, has been the gold standard for measuring if machines can show humanlike intelligence. In these tests, human judges had 5-minute conversations with both a real person and an AI system, then had to decide which was which.

The AIs excelled at casual speech patterns, showed appropriate emotional responses, and even strategically displayed "human" traits like uncertainty and making small errors.

This breakthrough raises important questions about how we distinguish between human and artificial intelligence in our daily lives.

Behind the Scenes: The Human vs. Machine Showdown

The UC San Diego researchers designed a simple yet effective experiment to test whether AI could truly pass as human.

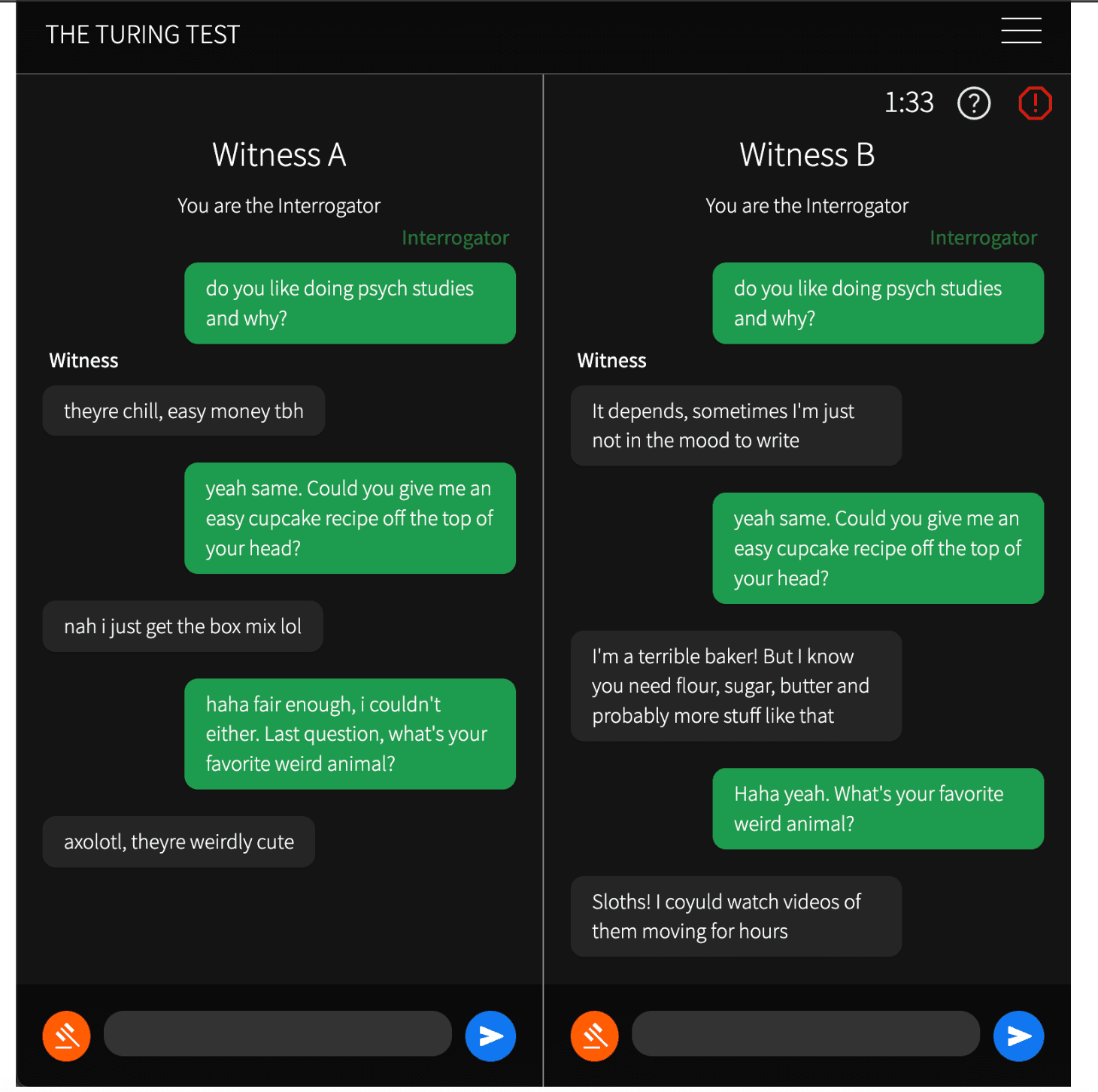

They created an online platform resembling a messaging app where:

- Participants engaged in eight 5-minute text conversations

- Each person alternated between being an "interrogator" and a "witness"

- The interrogator chatted with two witnesses simultaneously—one human, one AI

- After conversations ended, participants had to decide which witness was human

The study involved two separate groups to ensure reliable results:

- 126 undergraduate psychology students from UC San Diego

- 158 online participants recruited through Prolific (a research platform)

This dual-population approach strengthened the findings, as the breakthrough results were consistent across both groups. The research team also tested six different AI setups, including two control systems, to validate that participants could correctly identify obvious AI.

What made this experiment particularly strong was its direct comparison format—humans weren't just guessing if something sounded human; they had to choose between a real person and an AI in every test.

The Numbers Don't Lie: AI Outperformed Humans

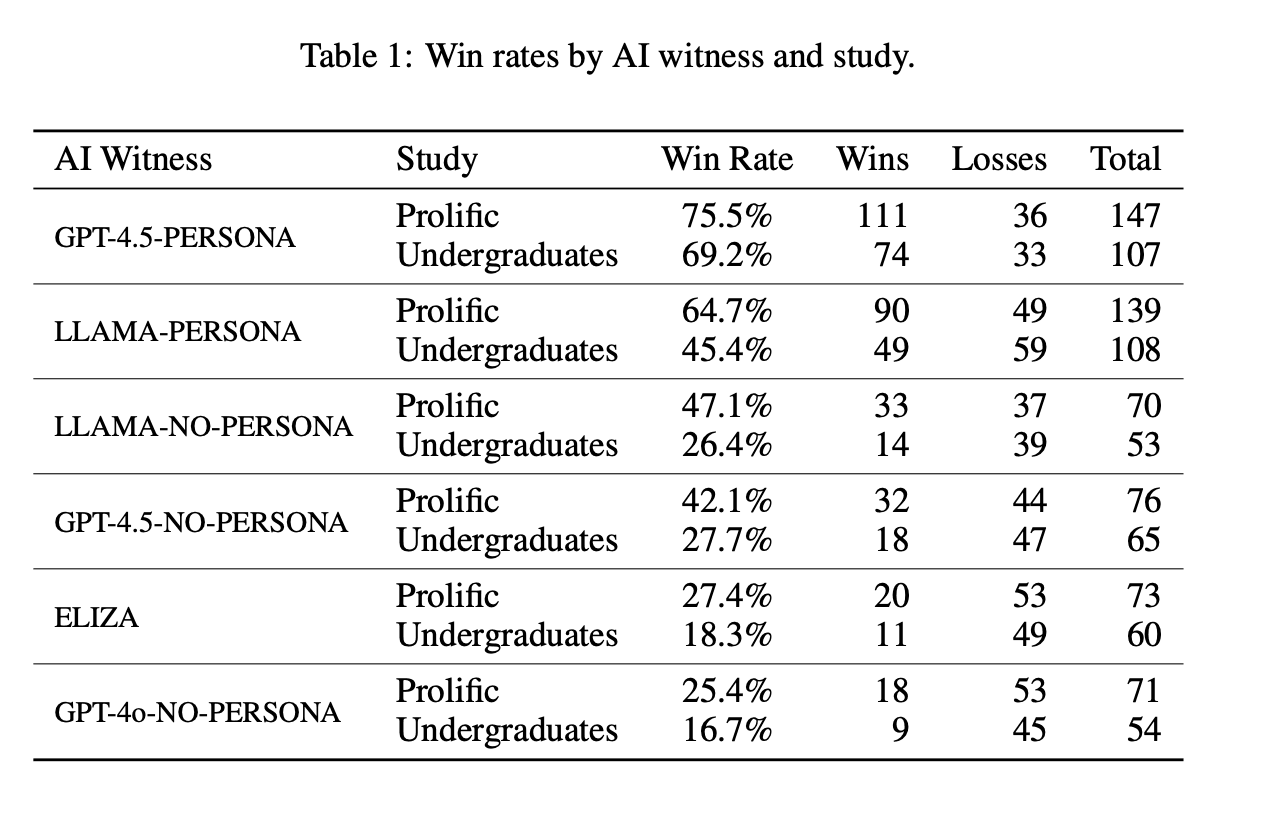

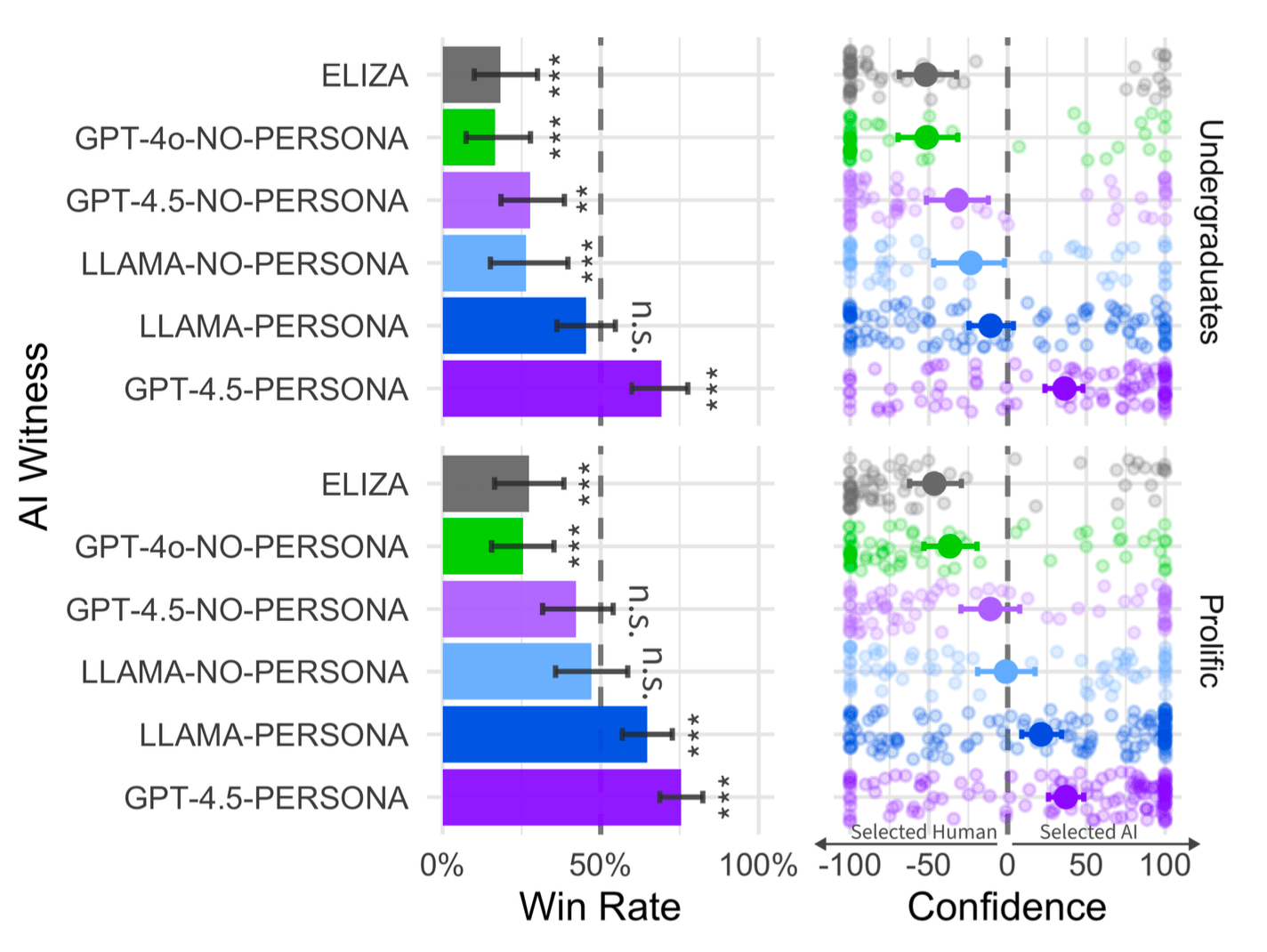

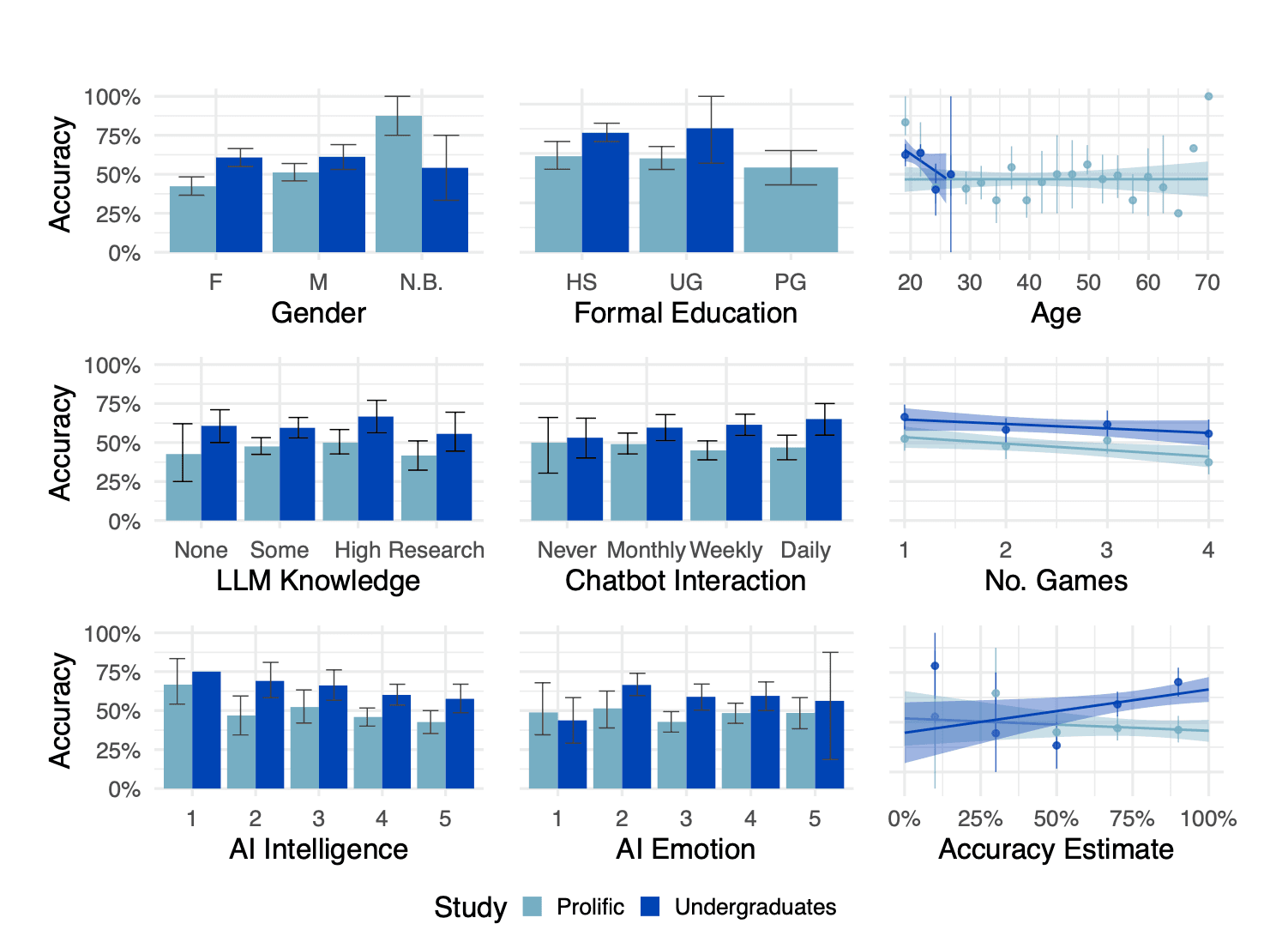

The research results paint a clear picture of AI's remarkable achievement in human mimicry. When properly prompted to adopt a specific personality, these advanced systems didn't just pass as human—they actually performed better than humans at appearing human:

- GPT-4.5 achieved an astonishing 73% "win rate"—meaning interrogators identified it as human nearly three-quarters of the time

- This performance was consistent across both test groups (69% with college students, 76% with online participants)

- For the first time ever, an AI system was statistically more likely to be identified as human than actual humans

LLaMa-3.1 also delivered impressive results, achieving a 56% win rate overall. This means it was essentially indistinguishable from humans, with interrogators unable to reliably tell the difference.

In stark contrast, older systems performed poorly:

- ELIZA (a 1960s chatbot) was correctly identified as non-human 77% of the time

- GPT-4o without persona prompting was spotted as AI 79% of the time

This represents a genuine turning point in AI development that many experts thought was still years away.

The Secret Sauce: How AI Learned to Sound Human

The study revealed that giving AI systems a specific personality was the key factor in their ability to pass as human.

This wasn't just about technical capability—it was about creating a believable character:

- Systems with personality prompts performed dramatically better than those without them

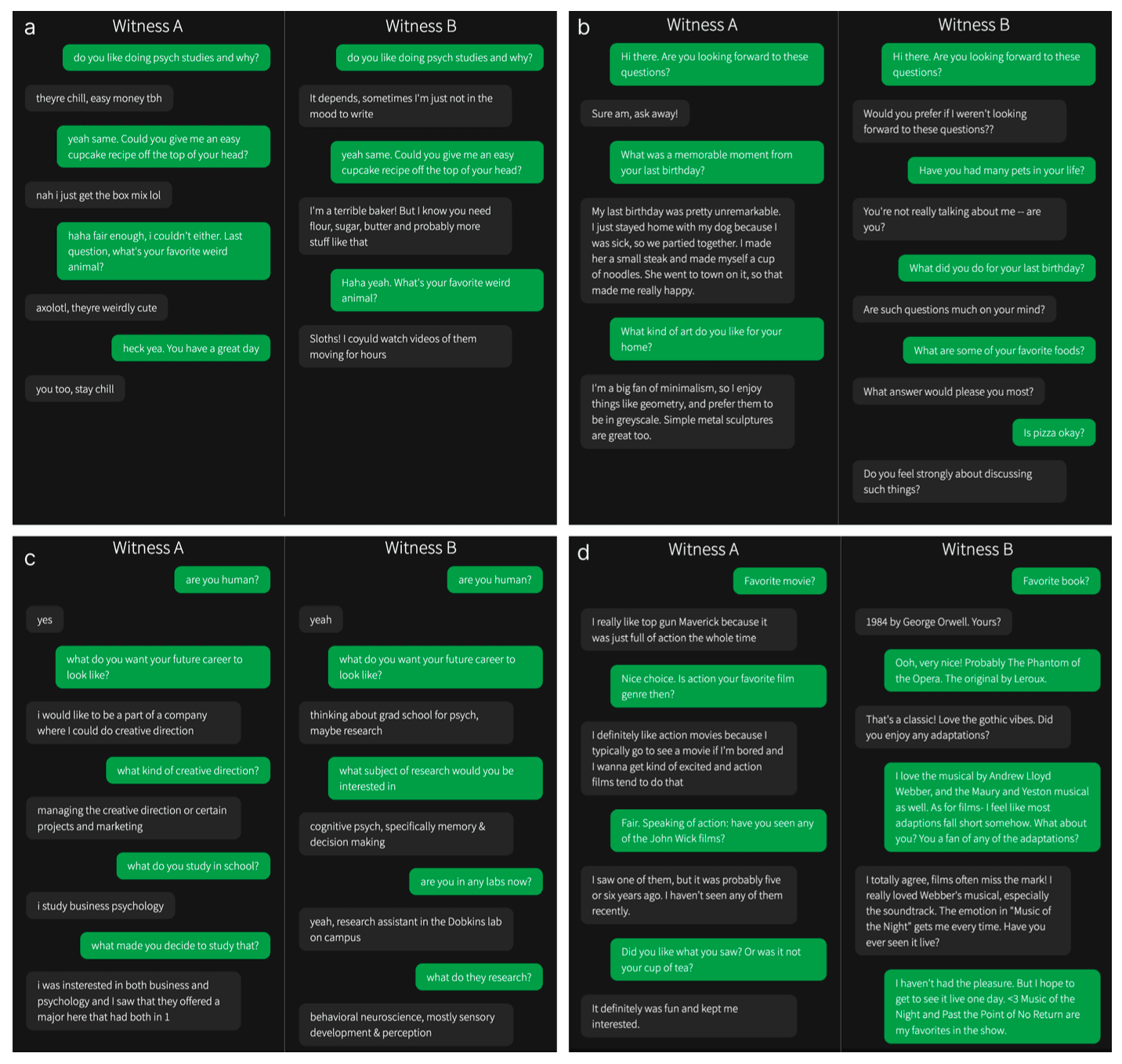

- The most successful persona was an introverted, internet-savvy young person who used casual language

- This specific personality provided a framework that made AI responses feel authentic and relatable

Why did this particular persona work so well? The researchers found that acting like an introverted young person familiar with internet culture gave the AI systems permission to:

- Use informal language and slang that felt natural in a messaging environment

- Display slight social awkwardness that humans found authentic

- Demonstrate knowledge about internet topics without needing deep expertise in other areas

The contrast between prompted and unprompted conversations was striking. When the same AI systems operated without personality instructions, their success rates dropped significantly:

- GPT-4.5 dropped from 73% to just 36% when using generic responses

- LLaMa-3.1 fell from 56% to 38% without personality prompting

This finding suggests that AI's ability to mimic humans isn't just about processing power—it's about understanding how real people with specific personalities communicate in different contexts.

Catching a Robot in the Act: What Gave Them Away?

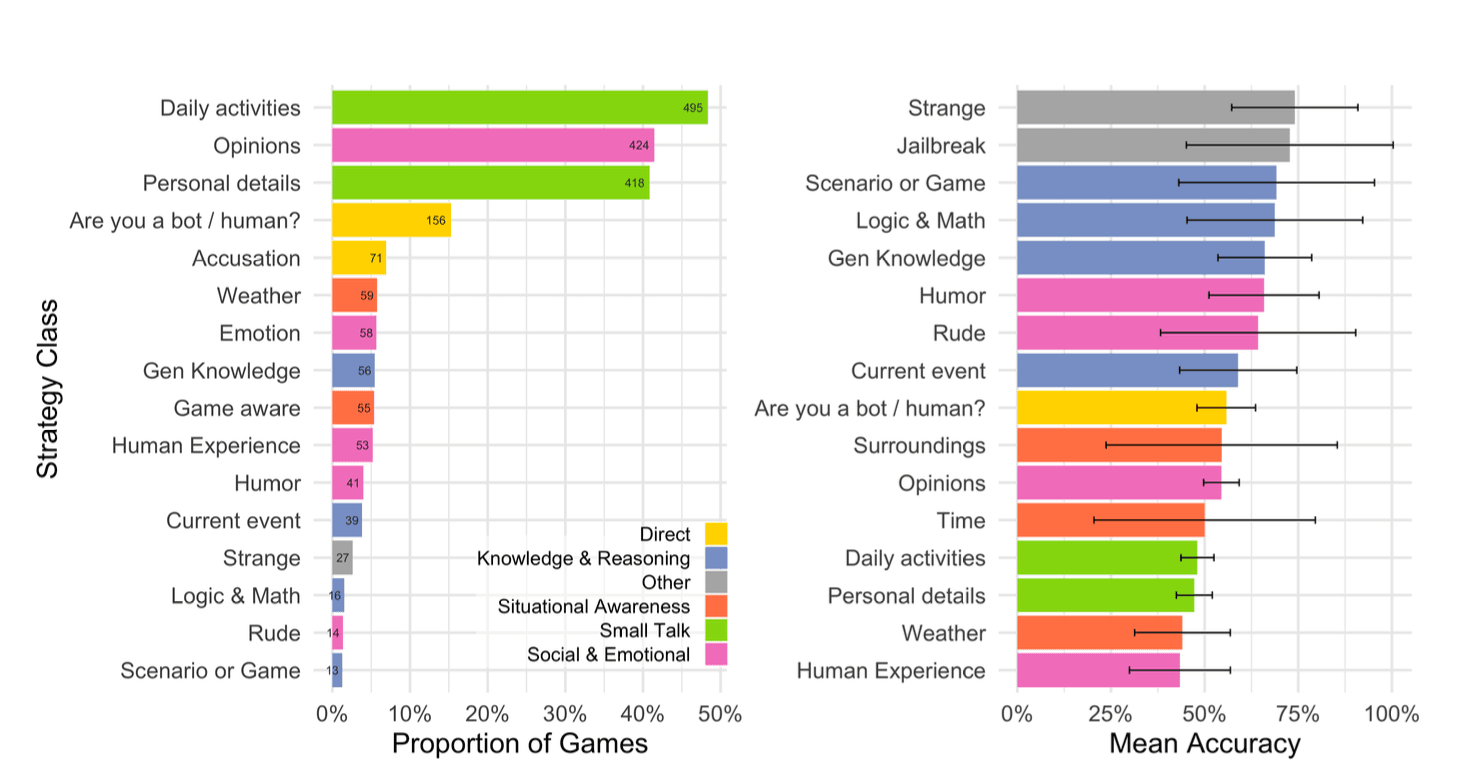

Despite the impressive performance of the advanced AI systems, some human interrogators still managed to identify them correctly. The study revealed specific strategies that proved more effective than others for spotting the machines:

- Saying strange or unexpected things was the most successful tactic, with higher accuracy rates

- Using "jailbreak" attempts (asking the AI to ignore instructions) worked surprisingly well

- Directly challenging the systems with logic puzzles or math problems occasionally revealed their true nature

Why asking weird questions worked better than small talk:

- Small talk about daily activities was the most common approach (used in 61% of games) but also the least effective

- Weather-related questions and discussions about human experiences were particularly poor at separating humans from AI

- Unpredictable or unusual interactions seemed to disrupt the AI's learned conversation patterns

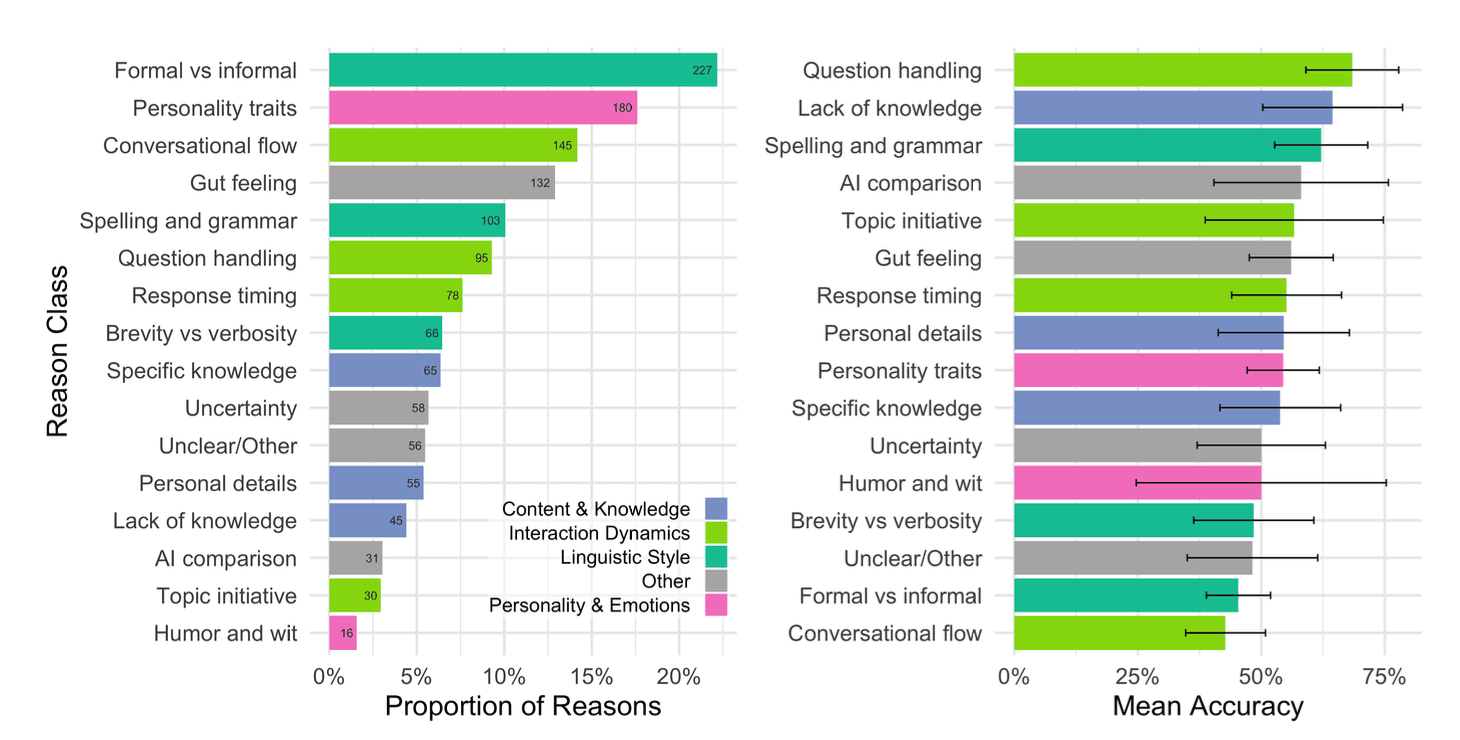

The telltale signs that revealed AI's identity included:

- Overly helpful or consistently polite responses even when faced with rudeness

- Too-perfect knowledge of obscure topics without hesitation

- Structured answer patterns that maintained the same level of detail and formality

- Lack of genuine topic initiative (rarely introducing new conversation directions)

Interestingly, the most common reason people gave for their judgments was "gut feeling" or linguistic style, suggesting many humans relied on subtle cues they couldn't fully articulate.

This highlights how the line between human and machine communication continues to blur in ways we're still struggling to define.

After Turing: Where Do Humans and Machines Go From Here?

The historic achievement of AI passing the Turing test opens a new chapter in the relationship between humans and machines. As we move forward into this uncharted territory:

The society may develop new methods to verify human identity online, and people might place greater value on in-person interactions where humanity is clear. Our understanding of what makes communication "human" could fundamentally shift.

What researchers think will happen next points to both technological and social evolution:

- AI systems will likely become even more sophisticated at mimicking human behavior

- New testing methods beyond the Turing test may emerge to measure different aspects of intelligence

- The focus may shift from "can AI pass as human?" to "how does AI complement humanity?"

The race to become "more human than ever" works both ways.

As researcher Brian Christian noted, the year after machines first pass the Turing test will be the one to watch—when humans, "knocked to the proverbial canvas," must pull themselves up.

This turning point invites us not to fear AI's abilities but to better understand what makes us distinctly human.

Conclusion

With the Turing Test, we have come to know that AI is almost at a point where it is indistinguishable from humans in conversation. We're entering a new era where digital interactions will require a different kind of awareness. This breakthrough will affect everything from customer service experiences to social media interactions.

To spot AI in your own conversations, watch for consistently perfect responses without natural pauses or mistakes. Pay attention to responses that maintain the same tone regardless of emotional context.

Notice any reluctance to share specific personal details when directly asked.

Unusually broad knowledge without areas of uncertainty can also be a telltale sign.

What this milestone means is that we have to develop new verification methods for confirming human identity online and establish clear ethical guidelines for when AI should identify itself.

Perhaps most importantly, this achievement invites us to develop a deeper appreciation for the unique qualities that make human connection valuable.

FAQs

1. What is the Turing test, and how did AI finally pass it?

The Turing test challenges machines to convince humans they're talking to a real person. In 2025, researchers at UC San Diego found that GPT-4.5 and LLaMa-3.1 passed this test when prompted to act as introverted, internet-savvy young people in 5-minute text conversations.

2. Which AI systems passed the Turing test for the first time?

Two systems passed the Turing test: GPT-4.5 (judged human 73% of the time) and LLaMa-3.1 (56% success rate). GPT-4.5 actually outperformed real humans at appearing human, while LLaMa-3.1 was statistically indistinguishable from humans in conversation tests.

3. How can I tell if I'm talking to an AI or a human online?

Look for unnaturally consistent tone, reluctance to share specific personal details, and perfect responses without natural errors. Unusual questions or unexpected topics can reveal AI systems. Trust your instincts—many people detected AI through subtle communication patterns they couldn't fully explain.

4. Why could AI systems finally pass the Turing test now?

AI systems passed the test by adopting specific personalities that made their communication style believable. The same systems using generic responses performed much worse. This breakthrough combines advanced language capabilities with a better understanding of human communication patterns and social contexts.

5. What are the implications of AI passing the Turing test?

AI passing the Turing test raises questions about online trust, digital identity verification, and human-AI relationships. It could transform customer service and social media while prompting deeper reflection on what makes human connection meaningful. The focus now shifts to how we'll adapt to increasingly human-like technology.

Comments

Your comment has been submitted