Ollama Review 2026: Pros, Cons, Pricing & Alternatives

According to a Deloitte 2024 Connected Consumer Survey, 48% of consumers experienced at least one security failure in the past year, up from 34% in 2023. With AI privacy incidents surging and trust in cloud AI providers declining, it's high time we explore local AI solutions that keep your data completely private and under your control.

In this article on Ollama Review, we'll explore everything you need to know about this open-source tool that lets you run powerful AI models directly on your Mac without cloud dependency.

Here's what we're going to cover:

- What is Ollama and its capabilities

- Key features and how Ollama works

- Drawbacks and limitations you should know

- Pricing and hardware requirements

- Five powerful alternatives including Elephas

- Customer reviews from real users

- Which tool is the best choice for different needs

By the end of this article, you'll understand whether Ollama fits your requirements or if one of its alternatives better suits your workflow, privacy needs, and budget constraints.

Let's get into it.

What is Ollama?

Ollama is an open-source platform that enables users to run large language models locally on their Mac computers without requiring cloud connectivity or recurring API costs. Often described as "Docker for LLMs," Ollama dramatically simplifies the process of downloading, managing, and running powerful AI models like Llama, Mistral, and DeepSeek directly on personal hardware through a command-line interface.

The tool is designed for developers, researchers, and privacy-conscious individuals who need complete data control and want to avoid cloud-based AI limitations. Ollama stands out because of its completely free, MIT-licensed approach to local AI deployment. Users can download pre-trained models and start interacting with them through the terminal or integrated applications without internet connectivity.

The platform offers native support for macOS, Windows, and Linux and supports over 100 open-source models. Most people choose Ollama when they want data sovereignty and cost-free AI access instead of subscription-based cloud services. The tool handles all LLM inference tasks locally while keeping things simple for users who need privacy-first AI solutions.

Key Points:

- Primary function: Run large language models locally without cloud dependency

- Target users: Developers, researchers, privacy-conscious professionals, healthcare/legal sectors

- Main differentiator: Completely free, open-source, command-line focused

- Basic how it works: Download models via CLI, run inference locally on your hardware

- Platform availability: macOS 11+, Windows 10 22H2+, Linux (Ubuntu, Fedora, CentOS)

Drawbacks of Ollama:

- Performance Limitations: Local models run significantly slower than cloud alternatives, with wait times of 10-30 seconds for basic outputs, making them unsuitable for high-throughput applications

- Significant Hardware Investment: Requires powerful GPUs/CPUs with upfront costs of $500-$3,000+ and runs slowly on weaker machines, creating a high barrier to entry for average users

- Accuracy Concerns: Users report that Ollama and local models can be "slow, inaccurate, and unpredictable," with historically lower accuracy compared to commercial cloud models like GPT-4 or Claude

- Limited Customization Features: Lacks cutting-edge features and advanced customization options that enterprise cloud platforms offer, making it feel limiting for users wanting granular control

- No Advanced Productivity Features: Ollama is just a model runner with no system-wide integration, automation capabilities, or document processing features that modern productivity tools provide

Ollama Customer Reviews

"I use it to create Ollama LLM Throughput Benchmark Tool" - Jason TC Chuang on Product Hunt

"No one should use ollama. A cursory search of r/localllama gives plenty of occasions where people have been frustrated by Ollama's limitations" - User on Hacker News

Quick Overview Table

Feature | Details |

|---|---|

Best For | Privacy-conscious developers, researchers, regulated industries (healthcare, legal, finance) |

Pricing Starts At | Free (open-source) |

Free Plan | Yes (100% free, MIT License) |

Platform | macOS 11+, Windows 10 22H2+, Linux |

Company | Ollama Inc., founded 2021 by Jeffrey Morgan & Michael Chiang |

Our Rating | 3.5/5.0 |

Top 5 Best Alternatives to Ollama

1. Elephas

Elephas is an AI-powered personal knowledge assistant designed exclusively for Mac, iPhone, and iPad users that combines local AI capabilities with deep macOS integration, knowledge management, and workflow automation. Unlike basic LLM runners like Ollama that simply provide command-line access to run models locally, Elephas is a complete productivity ecosystem that can actually use Ollama as its AI backend while adding system-wide integration, automation capabilities, and a user-friendly interface accessible anywhere on your Mac with a simple keyboard shortcut.

The platform handles local model execution through Ollama, LM Studio, or its own built-in offline models while providing enterprise-grade productivity features including Super Brain knowledge bases that process thousands of pages, multi-step AI workflow automation, context-aware writing assistance, and intelligent email replies. Elephas works with both cloud providers like OpenAI, Claude, and Gemini, as well as local models for 100% privacy, giving users flexibility to choose based on task requirements.

Teams use it for document processing, knowledge management, and automating repetitive tasks while individuals focus on writing assistance, research, and productivity enhancement. The technology supports 20+ file formats and can handle up to 1 million indexing tokens while maintaining complete data privacy with end-to-end encryption and local storage.

Key Features:

- System-Wide Integration Across Mac Apps Super Command provides context-aware AI assistance across your entire Mac ecosystem with Ctrl + Space universal access from any application. It automatically understands the context of your current screen and provides relevant assistance, allowing you to perform summarization, grammar fixes, rewrites, and translation directly within Mail, Google Docs, Obsidian, Slack, and more without context switching.

- Super Brain - Large Document ProcessingActs as your personal second brain with the ability to process thousands of pages efficiently, supporting 20+ file formats including PDFs, Word, Excel, Notion pages, YouTube videos, and audio recordings. Handle up to 1 million indexing tokens (equivalent to 50 books with 300 pages each) across multiple knowledge bases with 100% private indexing using offline models, ensuring your data never leaves your Mac.

- Advanced Workflow Automation with AI Agents Multi-step AI-powered task automation with pre-built templates for research, document summarization, web searches, mind maps, and PDF exports. Create custom workflow chains using AI agents, trigger workflows with "@" commands, and automate repetitive tasks including audio-to-text conversion, Super Brain searches, and presentation creation without coding knowledge.

- Multi-LLM Support & Flexible AI Engine Choose from multiple cloud providers(OpenAI GPT-4, Claude Sonnet, Gemini) and local model support through Ollama, LM Studio, Jan.ai, and DeepSeek for offline reasoning. Includes built-in offline AI models for 100% privacy with no internet required, plus API key flexibility to remove monthly credit limitations and switch between providers based on task requirements.

- Context-Aware Writing Assistance Intelligent writing tools including Smart Write for composing clear messages, one-click email responses that read incoming context and generate coherent replies, tone customization for professional or casual communication, real-time grammar and style fixes, AI-powered content continuation, multi-language translation, and document summarization without leaving your application.

Pricing: $9.99/month

Why is it better than Ollama

- Complete Productivity Ecosystem: While Ollama only runs models via command line, Elephas provides system-wide Mac integration, native app interface, and works across any application with one keyboard shortcut

- Advanced Automation & Workflows: Elephas offers multi-step AI workflow automation with pre-built templates and custom agents, while Ollama requires manual prompting for every single task

- Massive Document Processing: Super Brain handles thousands of pages (1M+ tokens) with persistent knowledge bases you can query repeatedly, whereas Ollama is limited by context windows of 4K-128K tokens per session

- Zero Technical Expertise Required: Beautiful native Mac/iOS app with no command-line setup needed versus Ollama's terminal-based workflow requiring technical proficiency

- Can Use Ollama as Backend: Elephas can actually integrate with Ollama to run local models while adding all productivity features on top, giving you local privacy PLUS enterprise capabilities

Customer Reviews

"Perfect overall Cold clone on a repo with many LFS assets is fast, but the first “git lfs pull” for big branches still takes time." - User on Capterra

“I purchased a lifetime subscription around 2 years ago. Elephas has significantly enhanced my customer relationships by refining my tone and professionalism in written communication. The ability to swiftly outline key points and tap the Elephas button has greatly improved my writing. Elephas transforms my rants and rambles into well-crafted, friendly or professional responses, saving me a lot of time and making me seem like a nice person. (Seriously) [sensitive content hidden] always offers prompt, kind, and supportive assistance whenever I encounter issues that I've created. He might be using Elephas too ;-) The program updates have kept pace with advancements in AI.”- User on Capterra

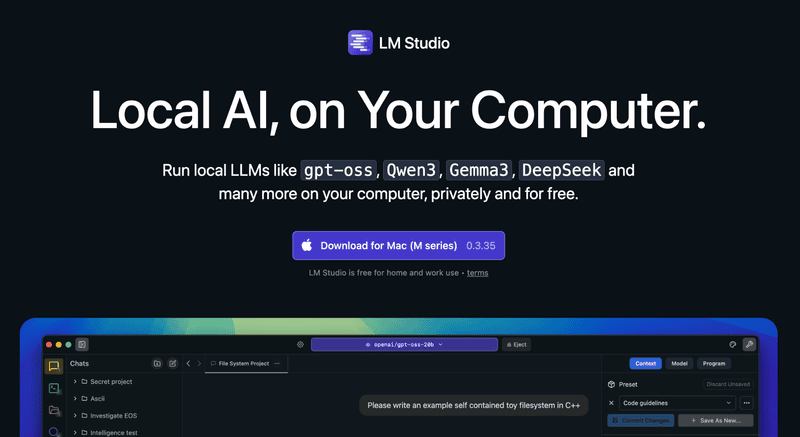

2. LM Studio

LM Studio is a desktop application that allows users to discover, download, and run local LLMs on their computer with a user-friendly graphical interface, eliminating the need for command-line operations or cloud dependencies. The platform provides a polished GUI for local AI that simplifies LLM interaction with a clean desktop interface requiring no CLI expertise. All features work completely offline without internet or recurring costs, preserving data privacy and ensuring regulatory compliance.

The platform supports access to over 1,000 open-source models including popular options for coding and multimodal tasks. Users can chat with local documents through retrieval-augmented generation capabilities while maintaining complete privacy.

The platform enables spinning up a local API server for connecting LM Studio-powered models to external applications. It includes SDKs in Python and TypeScript for seamless integration into custom workflows or desktop software, making it accessible for both beginners and developers seeking local AI solutions.

Key Features:

- 1,000+ Pre-configured Models Access to extensive library of open-source models including gpt-oss, Qwen3, Gemma3, DeepSeek, and many more with one-click downloads. The model library is constantly updated with the latest releases, making it easy to experiment with different AI capabilities without manual configuration or complex setup procedures.

- Local API Server Built-in capability to spin up local API endpoints for integration with external applications and development workflows. This allows developers to create custom applications that leverage locally-running models while maintaining complete privacy and avoiding cloud API costs, compatible with OpenAI API format for easy migration.

- RAG Document Chat Privacy-respecting retrieval-augmented generation allowing users to load and chat with local files without data leaving their machine. Support for multiple document formats enables intelligent question-answering over your personal documents, research papers, codebases, and knowledge bases while maintaining complete confidentiality.

- MLX Acceleration for Apple Silicon Optimized support for M1/M2/M3 chips with MLX framework providing faster inference and lower memory usage on Mac. This makes LM Studio generally use less memory and run faster on Apple Silicon compared to other local LLM runners, delivering near-cloud performance on modern Mac hardware.

- Developer SDKs Python and TypeScript SDKs enabling seamless integration into custom applications and desktop software projects. Developers can programmatically control model loading, inference, and chat interactions, making it easy to build AI-powered features into existing applications without dealing with low-level model management.

Pricing: Free

Why is it better than Ollama

- GUI-First Design: Polished graphical interface versus Ollama's CLI-first approach, making it significantly more accessible for non-technical users who don't want to work in the terminal

- MLX Optimization: Generally uses less memory and runs faster on Apple Silicon compared to Ollama due to dedicated MLX model support optimized specifically for Mac hardware

- Zero Configuration: Out-of-the-box experience with no command-line setup required versus Ollama's terminal-based workflow that requires learning CLI commands

- Visual Model Management: Intuitive model discovery and download interface with visual previews versus command-based model management that's harder for beginners

- Built-in RAG Features: Native document chat capabilities included versus requiring separate setup and configuration with Ollama

Customer Reviews

"LM Studio is absolutely beautiful User Interface and super easy to setup and start using" - User on Product Hunt

"LM Studio is now confusing and almost useless. System prompts are per chat, not system wide, and there are no prompt templates." - User quoted in Toksta reviews

3. GPT4All

GPT4All is a free, open-source desktop chatbot developed by Nomic AI that runs large language models completely offline on consumer hardware without requiring GPU acceleration or API calls. The platform provides a user-friendly desktop application for running LLMs entirely on your local machine with complete privacy and offline functionality.

It keeps all chat information and prompts exclusively on your device, operating without any API calls or cloud dependencies. GPT4All features LocalDocs, a built-in capability allowing users to chat privately with their documents including PDFs and text files without data leaving their device or requiring network connectivity.

The platform provides access to approximately 1,000 open-source language models from popular families like Llama and Mistral. GPT4All requires no GPU hardware and runs efficiently on major consumer hardware including Mac M-Series chips, AMD and NVIDIA GPUs. Users can adjust various chatbot parameters including temperature, batch size, and context length for customization to suit different use cases and performance requirements.

Key Features:

- LocalDocs RAG Integration Built-in document chat feature allowing private querying of PDFs, text files, and other documents without data leaving your device. This native RAG system enables you to build searchable knowledge bases from your local files and get instant answers from your document collections while maintaining complete privacy and offline capability.

- 1,000+ Model Library Extensive collection of downloadable open-source LLMs from popular model families including Llama, Mistral, and others. Easy model discovery and installation through the application interface makes experimenting with different AI capabilities straightforward, with detailed model information including parameter counts and recommended use cases.

- No GPU Required Optimized to run on CPU-only systems, making it accessible on any Mac without dedicated graphics requirements. This democratizes access to local AI by removing the hardware barrier that other platforms require, though GPU acceleration is supported when available for faster performance on capable systems.

- Complete Offline Operation Functions entirely without internet connectivity once models are downloaded, ensuring full privacy for sensitive work. Perfect for air-gapped systems, remote locations with poor connectivity, or anyone prioritizing data sovereignty, with no phone-home behavior or telemetry collection after initial setup.

- Adjustable Parameters Granular control over chatbot settings including temperature, batch size, context length, and other inference parameters. Advanced users can fine-tune model behavior for specific tasks, optimizing the balance between speed, creativity, and accuracy based on their particular requirements and hardware capabilities.

Pricing: Free

Why is it better than Ollama

- GUI Desktop Application: User-friendly graphical interface versus Ollama's command-line focused approach, making it much more accessible to non-technical users who prefer visual interfaces

- Built-in Document Chat: LocalDocs RAG feature is native and integrated versus requiring separate setup with Ollama through additional tools and configuration

- No GPU Requirement: Explicitly optimized for CPU-only operation versus Ollama's GPU preference when available, making it work better on basic Mac hardware

- Lower Technical Barrier: Designed specifically for privacy-focused non-technical users versus Ollama's developer audience that expects command-line comfort

- Flexible Toolbox Approach: Tries to be accessible to everyone with desktop app and SDK versus Ollama's opinionated runtime focused primarily on developers

Customer Reviews

"GPT4ALL has simplicity, stability (on Linux) and a certain je ne sais quoi that make it difficult to replace" - User on GitHub Discussions

"Very buggy. LocalDocs doesn't work on Mac" - Gary Pettigrew on Product Hunt

4. Jan.ai

Jan is an open-source ChatGPT alternative that runs completely offline, allowing users to run open-source AI models locally on their machine or connect to cloud models like GPT and Claude through a unified interface. The platform enables running large language models directly on your local machine with complete privacy through a ChatGPT-like cross-platform desktop interface.

Jan emphasizes a local-first approach while offering flexibility to integrate with various online AI providers when needed. It supports a diverse range of open-source models and provides real-time web search capabilities within conversations. Jan includes an OpenAI-compatible API for easy integration into existing applications and workflows.

For Mac users, Metal acceleration is enabled by default on M1, M2, and M3 chips, providing significantly faster performance than Intel-based Macs which operate on CPU only. The platform requires no licensing fees and operates with minimal setup, storing everything locally for complete data control and privacy while offering the option to leverage cloud models when additional capabilities are needed.

Key Features:

- Hybrid Local/Cloud Support Flexibility to run open-source models locally or connect to cloud providers (OpenAI, Claude) through one unified interface. This unique hybrid approach lets you maintain privacy for sensitive tasks with local models while accessing cutting-edge capabilities from cloud providers when needed, all within the same application without switching tools.

- Metal GPU Acceleration Default GPU acceleration on Apple Silicon (M1/M2/M3) providing faster inference than CPU-only operation. Jan takes full advantage of Mac's Metal graphics framework to deliver performance approaching cloud speeds, making complex reasoning tasks and long-form content generation significantly more responsive on modern Mac hardware.

- OpenAI-Compatible API Local API server with OpenAI-compatible endpoints for seamless integration into existing applications and scripts. Developers can migrate existing code from OpenAI to local inference by simply changing the API endpoint, maintaining the same code structure and function calls while gaining privacy and cost benefits.

- Real-time Web Search Built-in web search capabilities during conversations for accessing current information while maintaining local processing. This bridges the knowledge cutoff limitation of local models by allowing them to access up-to-date information from the internet when needed, combining the privacy of local AI with the freshness of web data.

- Cross-Platform Native Apps Native desktop applications for Windows, macOS, and Linux with consistent experience across platforms. The application feels truly native on each operating system with proper system integration, not just a web wrapper, ensuring responsive performance and proper integration with OS features like notifications and file handling.

Pricing: Free

Why is it better than Ollama

- All-in-One Desktop Experience: Includes UI, backend, and model hub in one application versus Ollama's engine-only approach requiring separate interfaces like Open WebUI or custom frontends

- Hybrid Architecture: Seamlessly switches between local and cloud models versus Ollama's local-only focus, giving flexibility to use GPT-4 or Claude when needed

- Built-in Web Search: Native real-time search integration versus requiring external tools with Ollama to access current information during conversations

- ChatGPT-like Interface: Familiar conversational UI out-of-the-box versus Ollama's CLI requiring separate frontends for comfortable chat experiences

- Lower Resource Usage: More lightweight than some alternatives but more UI-focused than Ollama's minimal footprint, striking a balance between features and efficiency

Customer Reviews

"Just install it, point it to a model, and go. You can easily get a local OpenAI compatible API by clicking the 'start server' button" - User on Hacker News

"One deal breaker for some users was the inability to talk to multiple models at once, with the app blocking until the first query is done, and Tauri apps feeling pretty clunky on Linux" - User on Hacker News

5. AnythingLLM

AnythingLLM is an all-in-one desktop and Docker AI application with built-in RAG (Retrieval-Augmented Generation), AI agents, no-code agent builder, and extensive document processing capabilities for running local LLMs with enterprise-grade features. The platform provides a comprehensive single-player application for Mac, Windows, and Linux with local LLMs, RAG, and AI agents requiring minimal configuration while ensuring complete privacy.

Everything from models to documents and chats is stored locally on your desktop by default. The platform works with both text-only and multi-modal LLMs in a single interface, handling images and audio seamlessly. It supports PDFs, Word documents, CSV files, codebases, and can import documents from online locations.

AnythingLLM comes with sensible, locally-running defaults for LLM, embedder, vector database, and storage. The 2025 version includes Model Context Protocol support for Docker deployments, enabling interoperability with external toolchains. No signup required as it operates entirely as local software without SaaS dependencies, perfect for teams and enterprises needing sophisticated document-based AI capabilities.

Key Features:

- Built-in RAG System Native retrieval-augmented generation with support for PDFs, Word docs, CSV, codebases, and online document imports with local vector database. Advanced document indexing enables semantic search across massive knowledge bases, automatically chunking and embedding documents for optimal retrieval while maintaining complete privacy with everything stored locally.

- AI Agents with No-Code Builder Agent skills including file generation, chart creation, web search (DuckDuckGo/Google/Bing), and SQL connectors (MySQL/Postgres/SQL Server). Build complex AI workflows without coding by chaining together pre-built agent capabilities, automating research, data analysis, and report generation tasks that would normally require custom development.

- Multi-Modal Support Handles text, images, and audio in single interface with support for both text-only and multi-modal LLMs. Process screenshots, diagrams, photos, and voice recordings alongside traditional text documents, enabling richer AI interactions and more comprehensive document understanding without switching between specialized tools.

- Model Context Protocol (MCP) 2025 compatibility with MCP for interoperability with external toolchains and development ecosystems. This emerging standard enables AnythingLLM to work seamlessly with other AI tools and services, future-proofing your investment and allowing integration with the broader AI tooling landscape as it evolves.

- Flexible Backend Support Works with Ollama, OpenAI, LM Studio, and other backends with multi-user permissions and team collaboration features. Switch between AI providers without changing your workflows, leverage different models for different tasks, and manage team access with granular permissions for different workspaces and knowledge bases.

Pricing: Free (Desktop) / $25/month (Cloud)

Why is it better than Ollama

- Enterprise RAG Features: Sophisticated document processing, indexing, and retrieval versus Ollama's basic model-only focus without built-in knowledge management

- Built-in AI Agents: No-code agent builder with multiple skills (web search, SQL, charts) versus Ollama requiring external agent frameworks like LangChain

- Multi-User Collaboration: Team features, permissions, and workspace management versus Ollama's single-user design without collaboration capabilities

- All-in-One Platform: Complete solution with UI, RAG, agents, and backends versus Ollama's engine-only architecture requiring multiple tools

- Business-Ready Features: Cloud hosting options, enterprise support, and managed instances versus Ollama's self-hosted only approach

Customer Reviews

"Switched to GPT4All to avoid monthly AI subscription costs that were eating into project profits. The writing assistance is solid for drafts and editing... Saved over $300 this year while maintaining decent output quality." - Freelance writer quoted in Alphr reviews

"while a template is working on a prompt, selecting another chat made with another template only to read that chat, processing stops" - robang74 on GitHub Discussions

Quick Comparison Table:

Tool | Starting Price | Best For | Key Strength | Main Weakness |

|---|---|---|---|---|

Ollama | Free | Privacy-focused developers | Open-source, model variety | No productivity features, CLI-only |

Elephas | $9.99/month | Mac users wanting complete AI productivity | System-wide integration + automation | Mac/iOS only, subscription cost |

LM Studio | Free | Beginners wanting GUI experience | Beautiful interface, MLX optimization | Less scriptable than Ollama |

GPT4All | Free | CPU-only systems, offline use | No GPU required, LocalDocs | Buggy on Mac, limited features |

Jan.ai | Free | Users wanting local + cloud flexibility | Hybrid approach, web search | Can't query multiple models simultaneously |

AnythingLLM | Free / $25+ | Businesses needing RAG + agents | Enterprise features, multi-modal | More complex than simpler alternatives |

Conclusion

Ollama delivers free, open-source local AI for privacy-conscious developers and researchers at zero ongoing cost. The tool shines when running offline models with complete data sovereignty but falls short at providing user-friendly interfaces or productivity features.

Compared to alternatives, it offers maximum model flexibility and community support though lacks the graphical interfaces and workflow automation that tools like Elephas and LM Studio provide. Users looking for command-line control and technical experimentation will find excellent model variety and zero licensing costs while those needing integrated productivity should consider alternatives with native Mac apps.

Best value comes from using Ollama as a backend for tools like Elephas, which adds the complete productivity layer that knowledge workers actually need for daily work. The tool meets 60% of typical Mac user requirements but for a complete AI productivity solution, consider Elephas which can use Ollama's local models while providing system-wide integration, workflow automation, and enterprise-grade knowledge management features that transform AI from a command-line experiment into a true productivity multiplier.

Comments

Your comment has been submitted