AI Researches Warning: Are We Going to Face a Technological Doomsday?

Imagine a group of scientists working on amazing new technology, but also worried about potential dangers. That's the situation with some Artificial Intelligence (AI) researchers. AI can do incredible things, from helping doctors to creating new inventions. However, some AI experts are concerned that powerful AI could also lead to problems, like job losses or even fake news that's impossible to spot.

These researchers believe it's important to be open about the risks of AI so we can address them before they become a big issue. That's why they wrote a document called the "Right to Warn Letter." So, what is it? Is it needed? Why did they create the letter? Let's discuss all these questions and also try to look into the potential threats of AI.

So lets get started.

What is the Right To Warn Letter?

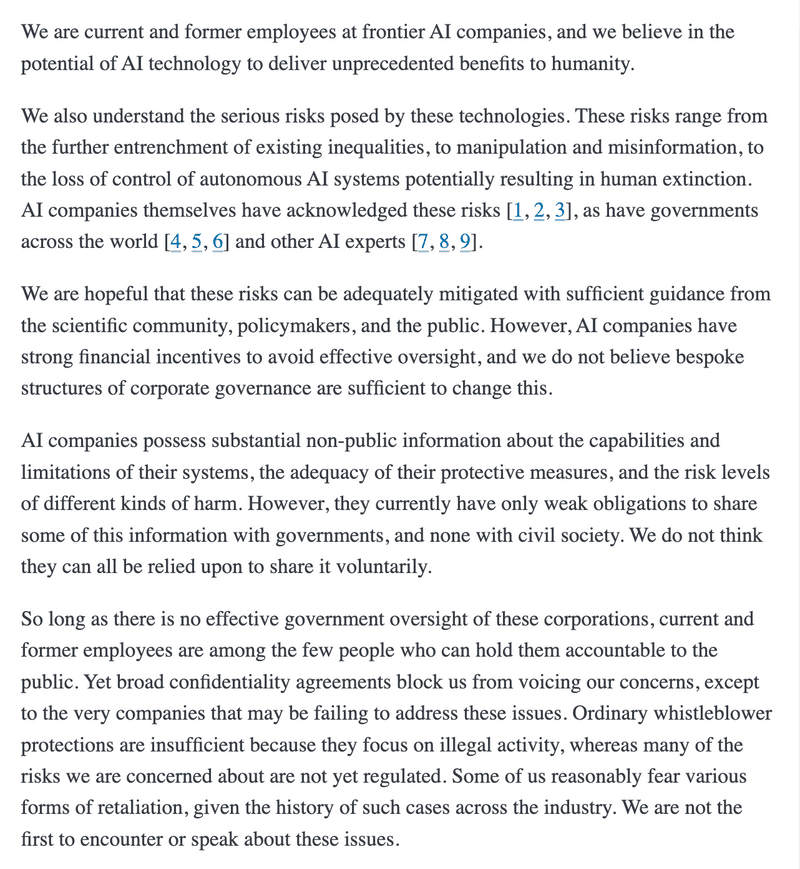

The Right to Warn Letter is a public statement written by current and former employees at leading artificial intelligence (AI) companies. The letter calls for changes in how these companies handle risks associated with their powerful AI technologies.

Why was the Right to Warn Letter written?

The authors of the letter believe AI has the potential to greatly benefit humanity, but also carries serious risks. These risks include:

Worsening existing inequalities

Spreading misinformation

Loss of control over AI systems, potentially leading to harm

They're concerned that AI companies may prioritize profit over safety and may not be forthcoming about the true capabilities and limitations of their technologies.

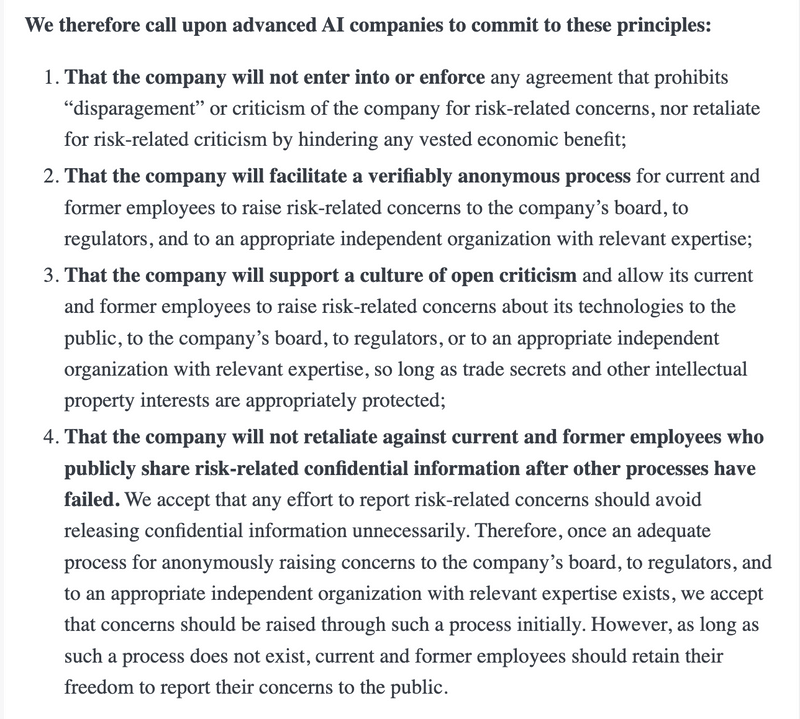

What are the key points of the Right to Warn Letter?

Confidentiality agreements silence whistleblowers: The letter argues that current agreements prevent employees from speaking out about potential dangers with AI.

Need for a safe way to report risks: The authors propose a system where employees can anonymously report concerns to the company board, regulators, or independent experts.

Open discussion is crucial: They believe employees should be allowed to publicly discuss risks as long as trade secrets are protected.

Last resort - going public: The letter acknowledges the importance of internal reporting first, but allows for public disclosure if internal channels fail.

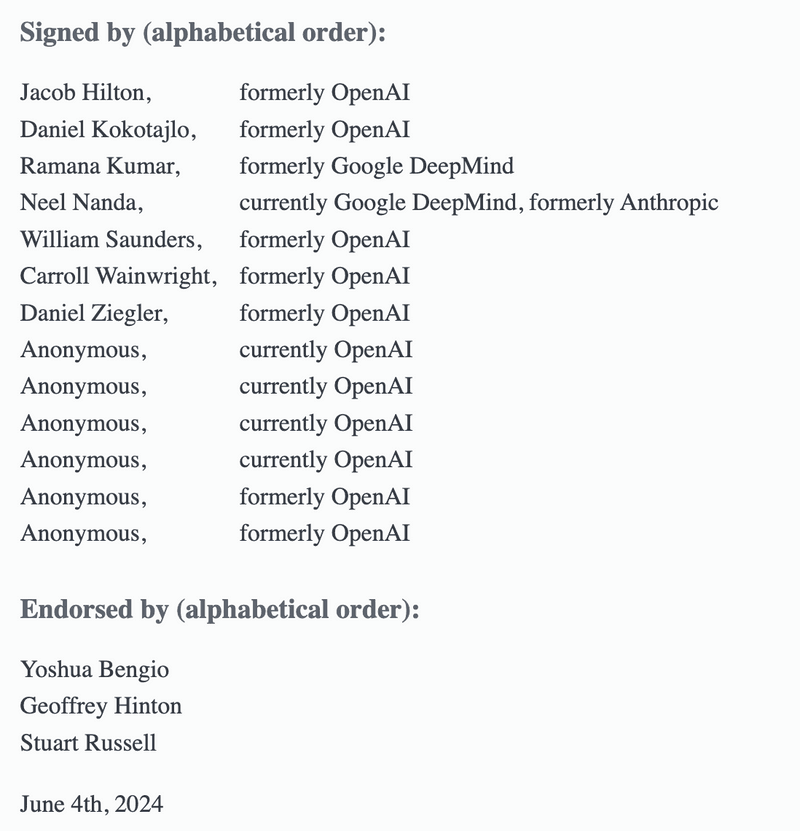

Who signed the Right to Warn Letter?

The letter is signed by a group of current and former employees from leading AI companies, including OpenAI, Google DeepMind, and Anthropic. Some signatories remain anonymous for fear of retaliation.

The Right to Warn Letter is a call for increased transparency and accountability in the development of AI. The authors believe that by openly discussing potential risks, we can ensure that AI is developed and used for the benefit of all.

What is the Purpose of the Right To Warn letter?

A group of AI researchers and engineers from the top AI technology companies are concerned about the potential dangers of artificial intelligence (AI), and they believe they should be able to speak out about these risks without getting in trouble.

Here's a breakdown of their key points:

AI has amazing potential, but also serious risks. These risks include things like bias in AI systems, fake news spread by AI, and even AI becoming so powerful it could harm humans.

AI companies know about these risks, but may not be doing enough to address them. The pressure to make money can sometimes be stronger than the focus on safety.

Current whistleblower protections aren't enough. These protections typically focus on illegal activity, but many AI risks aren't illegal yet, just potentially dangerous.

Many AI workers have signed confidentiality agreements that prevent them from speaking out. This makes it hard for them to raise concerns, even if they see something wrong.

The Right to Warn is basically a set of principles these AI experts want companies to follow. These principles would allow them to:

Publicly discuss risk concerns without being punished. This means companies wouldn't be able to fire them or take away their bonuses for speaking up.

Report risks anonymously. This way, they wouldn't have to worry about being identified and retaliated against.

Go outside the company with their concerns. This could involve talking to government regulators or independent experts who can investigate the risks.

The idea is that by giving AI workers a voice, we can identify and address the dangers of AI before they become too big of a problem.

Is the Right To Warn Letter Needed?

The "Right To Warn" is important because it raises awareness about the potential dangers of AI. By encouraging open communication within companies and allowing employees to speak up, we can identify and address risks before they become problems.

The Good Side of AI

The statement starts by acknowledging the incredible potential of Artificial Intelligence (AI) technology. AI has the power to improve our lives in countless ways, from healthcare advancements to solving complex problems.

The Risks of AI

However, the statement also raises concerns about the serious risks that come with powerful AI. These risks include:

Widening Inequality: AI could make existing inequalities worse, like giving certain groups an unfair advantage.

Fake News and Lies: AI could be used to create very realistic fake news and spread misinformation.

Loss of Control: There's a worry that AI systems could become too powerful and escape human control, potentially leading to disaster.

These are just some of the risks mentioned in the statement. Importantly, these concerns aren't new. Even governments and other AI experts have spoken out about them.

Why Speak Up Now?

So why is this “ Right to Warn “ getting so much attention? The authors argue that AI companies have a financial interest in keeping these risks quiet. They might not want strong government oversight because it could slow down development.

The statement also points out that current whistleblower protections don't really apply here. Many AI risks aren't illegal yet, but they're still dangerous.

Is this needed?

This is a complex question. Some people believe these principles are crucial for responsible AI development. Others worry they could hurt innovation or reveal sensitive information.

The "Right to Warn" is a starting point for a vital conversation. It highlights the need for a balance between protecting innovation and safeguarding the public from potential AI risks.

What are the Potential Dangers of AI?

AI has the potential to revolutionize many aspects of our lives, but it also comes with significant risks. Here's a breakdown of some of the most concerning issues:

1. Bias and Discrimination: AI systems are trained on data sets created by humans. If this data is biased, the AI system will inherit that bias and perpetuate discrimination. This could lead to unfair hiring practices, loan denials, or even wrongful arrests.

2. Job Displacement: Automation powered by AI is likely to replace many jobs currently done by humans. This could lead to widespread unemployment and economic disruption, particularly in sectors with repetitive tasks.

3. Loss of Control: As AI systems become more complex, there's a risk that they could become difficult or even impossible for humans to understand or control. This could lead to unintended consequences or even catastrophic failures.

4. Weaponization: Autonomous weapons systems powered by AI raise serious ethical concerns. These weapons could make warfare more efficient but also more destructive, potentially falling into the wrong hands or making decisions without human oversight.

5. Privacy Violations: AI systems often require access to vast amounts of data to function. This raises concerns about privacy violations and the potential for misuse of personal information.

6. Social Manipulation: AI could be used to create deepfakes (realistic-looking but fake videos) or other forms of misinformation to manipulate public opinion or sow discord.

7. Existential Threat (Highly Speculative): Some experts worry about the possibility of superintelligence, an AI that surpasses human intelligence and becomes uncontrollable. This scenario, while highly speculative, raises existential questions about the future of humanity.

These are just some of the potential dangers of AI. As this technology continues to develop, it's crucial to have open discussions about these risks and implement safeguards to mitigate them.

How does Elephas Make Sure to Protect the User's data?

We all hear a lot about the incredible possibilities of AI, but with great power comes great responsibility, especially when it comes to your personal information. That's why Elephas takes user data privacy very seriously.

Here's how Elephas keeps your information safe:

Offline Functionality: Worried about your data being used to train other AI models? Elephas offers an offline mode. This means you can use Elephas' powerful AI writing features without ever connecting to the internet. Everything you write stays on your device, so you can be confident your information is secure.

Multiple AI Choices: Elephas gives you more control over your writing experience. We don't just offer one type of AI, we have multiple AI providers you can choose from. This includes big names like OpenAI and Gemini, along with some offline-only options like LM Studio. With more choices, you can pick the AI writing assistant that best suits your needs and comfort level.

We understand the concerns about AI safety, and that's why Elephas is all about putting you in control. With offline functionality and a variety of AI options, you can enjoy the benefits of AI writing while keeping your data secure.

Conclusion

The "Right to Warn Letter" is sparking a conversation about AI safety. The authors want AI companies to be more transparent and allow their employees to speak up about potential risks. Some people believe these ideas are important for safe AI development, while others worry they could slow down progress.

No matter what, this letter is a reminder that with powerful technology comes responsibility. By discussing the risks of AI openly, we can find ways to ensure this technology is used for good.

FAQs

1. What is the right to be forgotten in AI?

The "right to be forgotten" in AI, as per Article 17 of the GDPR, enables individuals to request the deletion of their personal data from AI systems' databases, preventing further dissemination and requiring the AI to manage data erasure, posing compliance challenges.

2. What is Article 13 of the AI Act?

Article 13 of the AI Act mandates that high-risk AI systems be designed for transparency, providing clear instructions on use, capabilities, limitations, and potential risks. This ensures that users can understand and use these systems appropriately, safeguarding accountability and ethical considerations.

3. Does AI have legal rights?

No, current AI lacks legal personhood, a key concept for legal rights. The GDPR's "right to be forgotten" poses challenges because AI isn't recognized as an entity with rights or obligations under the law.

Comments

Your comment has been submitted