How to Install and Run DeepSeek R1 Locally on Mac for Free (2026) - The Complete Guide

DeepSeek has made some big moves in the AI world, and one of its standout features is its download option, allowing anyone to run it directly on their computers. Today, we're going to dive deep into this feature and everything you need to know about it.

In this article, we’ll cover:

What is DeepSeek?

Advantages and disadvantages of open-source models like DeepSeek

Step-by-step guide to installing and running DeepSeek R1 offline on Mac

How to use DeepSeek on the web

Setting up Open WebUI for a better experience

Enhancing DeepSeek with Elephas for more advanced features

A complete breakdown to help you choose the best way to use DeepSeek

By the end of this guide, you'll have everything you need to install, run, and maximize DeepSeek’s potential. Let’s get started!

What is DeepSeek?

DeepSeek is a powerful new LLM model that brings advanced capabilities of paid LLM models like ChatGPT to users at no cost. DeepSeek contains 670 billion parameters, making it the largest freely available language model today. What sets DeepSeek apart is that it was built for just $6 million, far less than similar AI models.

The platform offers several versions of its model to suit different needs. These include smaller versions based on Qwen and Llama architectures, ranging from 1.5B to 70B parameters. Each model specialises in specific tasks, with some focusing on mathematics while others excel at general knowledge and reasoning.

DeepSeek shows impressive performance across various tasks, particularly in mathematics, coding, and language processing. Its step-by-step reasoning approach helps it tackle complex problems effectively while using less computer memory than similar AI models.

Advantages of Open-Source Models like DeepSeek:

Free access for everyone

It can be run locally without an internet connection

Lower operating costs compared to paid alternatives

The community can improve and customize the model

Transparent technology that builds trust

Disadvantages:

May require technical knowledge to set up locally

Resource-intensive for personal computers

How to download DeepSeek?

This guide will walk you through the complete process of installing Deepseek on your computer. The process works on Windows, Mac, or Linux systems. You'll just need to be comfortable using a terminal window (command prompt).

Install Ollama

Ollama is the foundation tool that helps you run large language models locally.

Here's how to install it:

Visit the Ollama website: Ollama.com

Click the download button for your operating system (Windows, Mac, or Linux)

Mac Users:

Open Finder and go to Downloads

Double-click the downloaded Ollama.zip file

Drag the Ollama application to your Applications folder

Double-click Ollama in Applications to start

Then you have to give permission to install the command line, and then you get to see the run your first model: ollama run llama 3.2. Just click on finish. Don’t run this command as it downloads the llama model

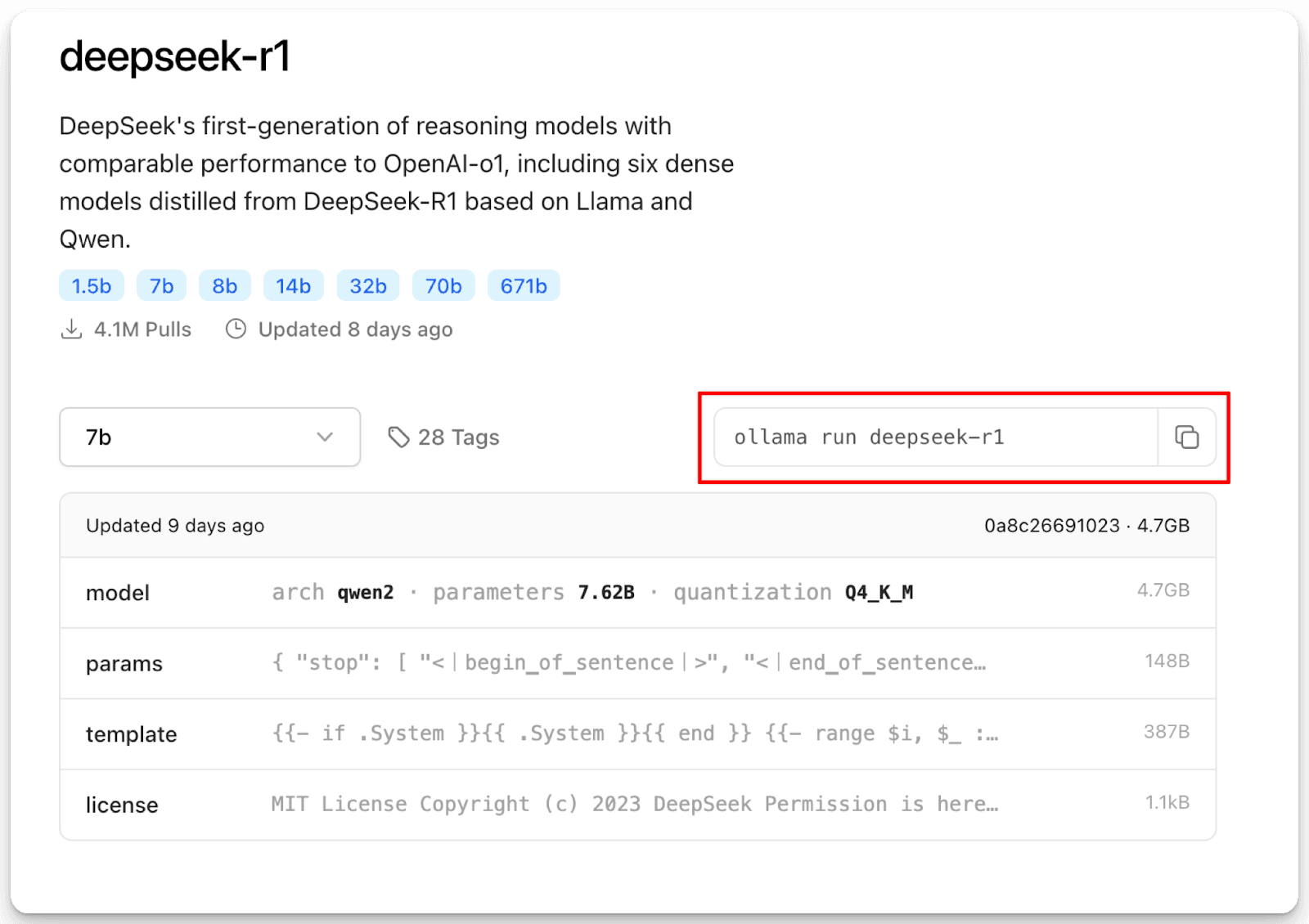

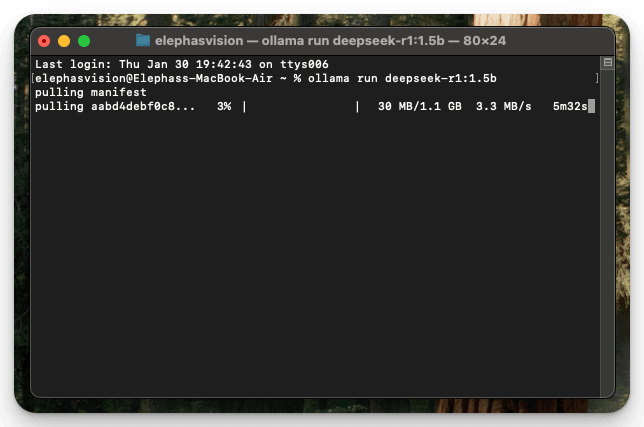

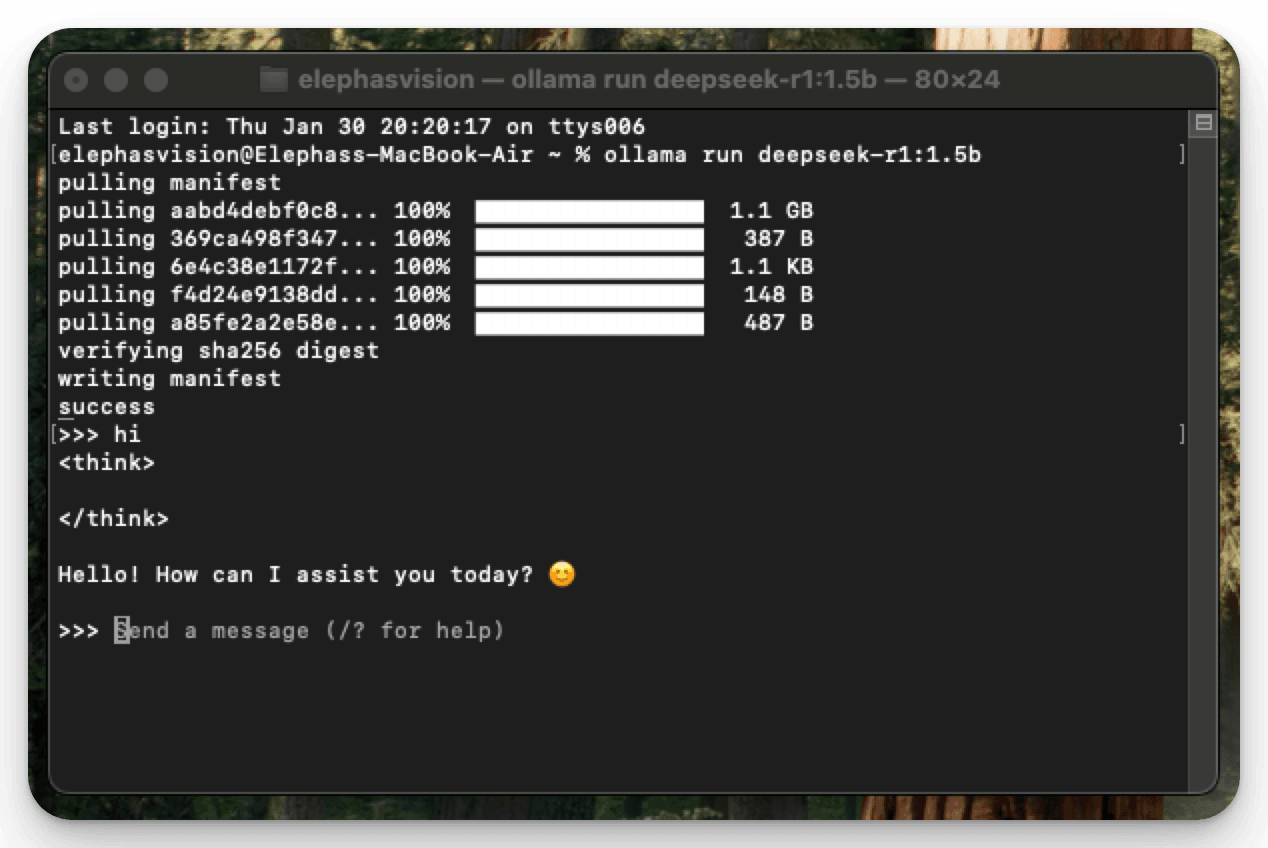

5. Go back to ollama.com and click on models and select the DeepSeek model. Then you can copy the command line and just run it on your mac terminal.

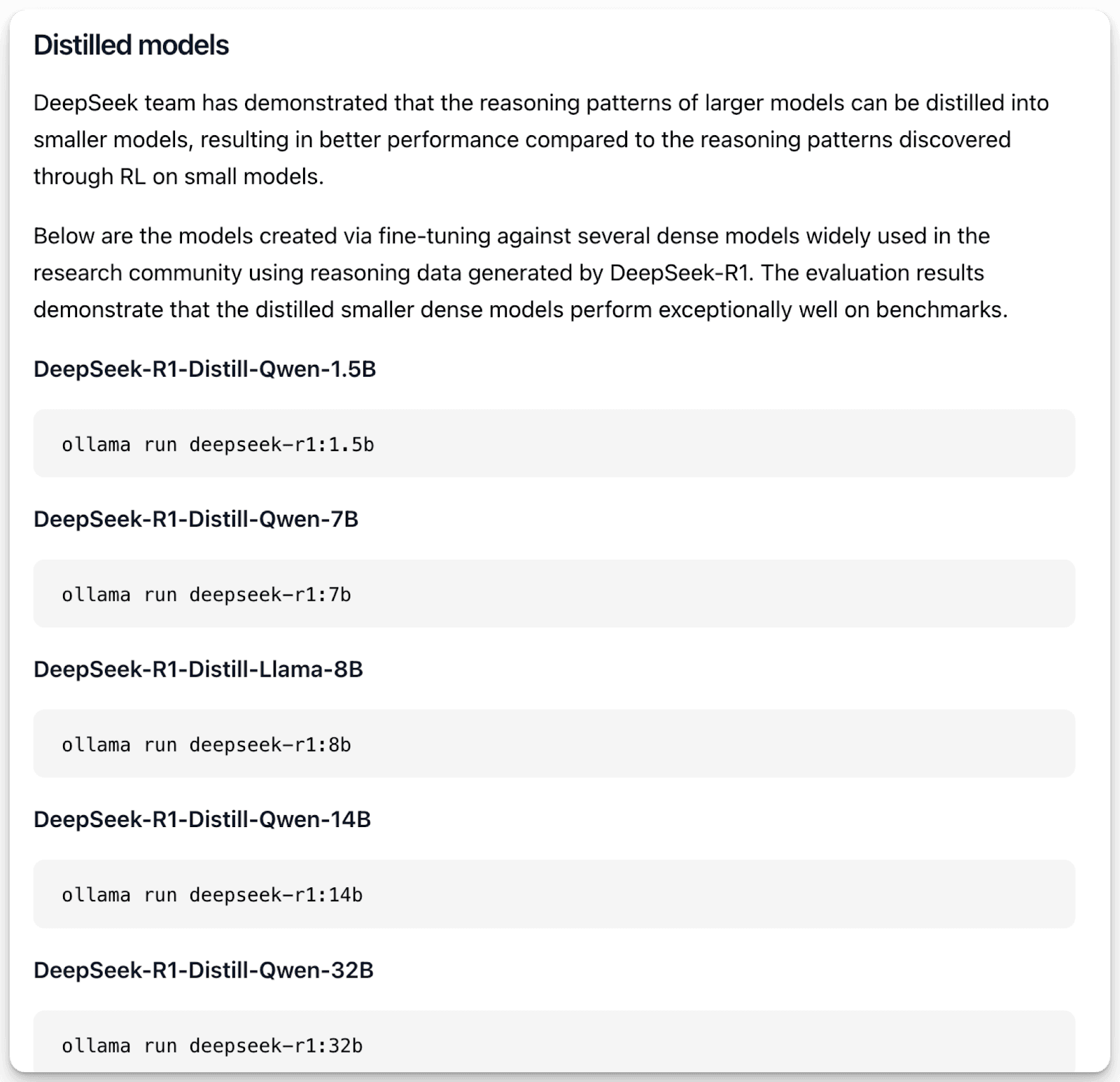

Make sure to check your system capabilities before installing the model, as most systems are unable to download large models. If you want, you can also run distilled models of DeepSeek by scrolling down on the same page and copying the code and pasting it in the terminal. These distilled models are as powerful as the top models, and they are also smaller in size.

That's it! Once the model is downloaded, you are good to go. You can chat with the DeepSeek model from the terminal itself.

Whenever you want to chat with the model, just open up your terminal and type in

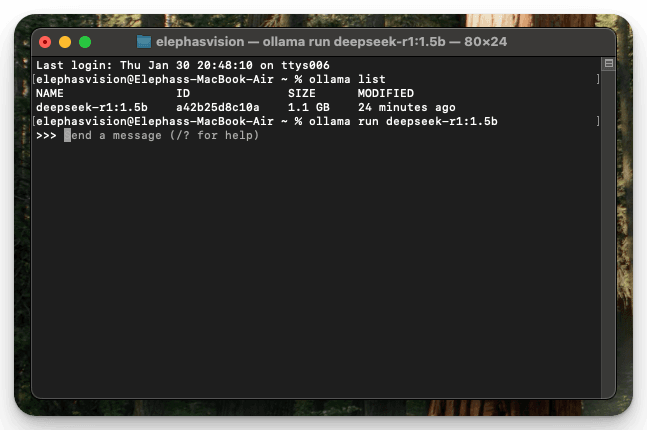

“Ollama run <model_name>” which is in our case “Ollama run deepseek-r1:1.5b“

Tip: Using the “Ollama list” command in the terminal will give you a list of all the downloaded models

How to use the DeepSeek Model on the Web?

The thing is, DeepSeek is great for running offline and protecting your privacy when used locally. However, like other offline AI models, it faces limitations with memory updates and lacks internet connectivity. Plus, most regular computers struggle to handle its full capabilities due to the model's size and hardware requirements.

But it's a different story with the web version of DeepSeek. Since everything runs on their powerful servers, you get much better performance and features. The web version can handle longer conversations, access current information, and process complex tasks more efficiently.

So, if you want to skip the complicated process of downloading and setting up DeepSeek locally, or if you want to use features that need internet access, there's a simpler way. You can directly use DeepSeek through their official website.

Just head over to deepseek.com to access their full-featured web version.

Here, you can chat with their most powerful 671B parameter model completely free. The web version gives you access to all of DeepSeek's capabilities, including advanced mathematical problem-solving, coding assistance, and multilingual support. The best part is that you don't need to worry about your computer's specifications or technical setup - everything just works right in your browser.

Using the web version, you get the full power of DeepSeek's technology without any of the technical hassles of local installation, making it perfect for both casual users and professionals who need reliable AI assistance.

But you may need to compromise on privacy and offline running of the model.

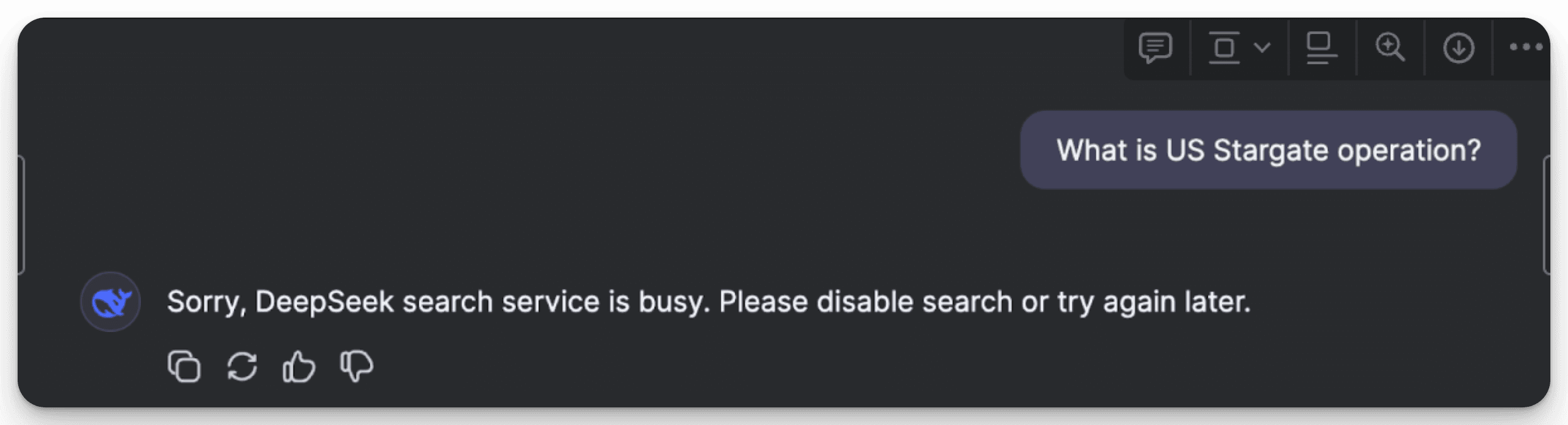

Also, there is a big catch here as the DeepSeek model, one of the most popular models, has high traffic, and the web version of the model quickly runs into high-traffic issues. As of now, they have also stopped the web search feature in DeepSeek.

But there is a workaround; what if you can use a local DeepSeek model with a file upload feature, and have a ChatGPT-type web interface? To get this option, we are going to use the Open Web UI.

Transform DeepSeek Local: Your Complete WebUI Setup Guide

For any local LLM model to get a ChatGPT web interface and also file and voice uploading features, you can use Open Web UI. It is also a super simple procedure, and we will walk you through the entire process.

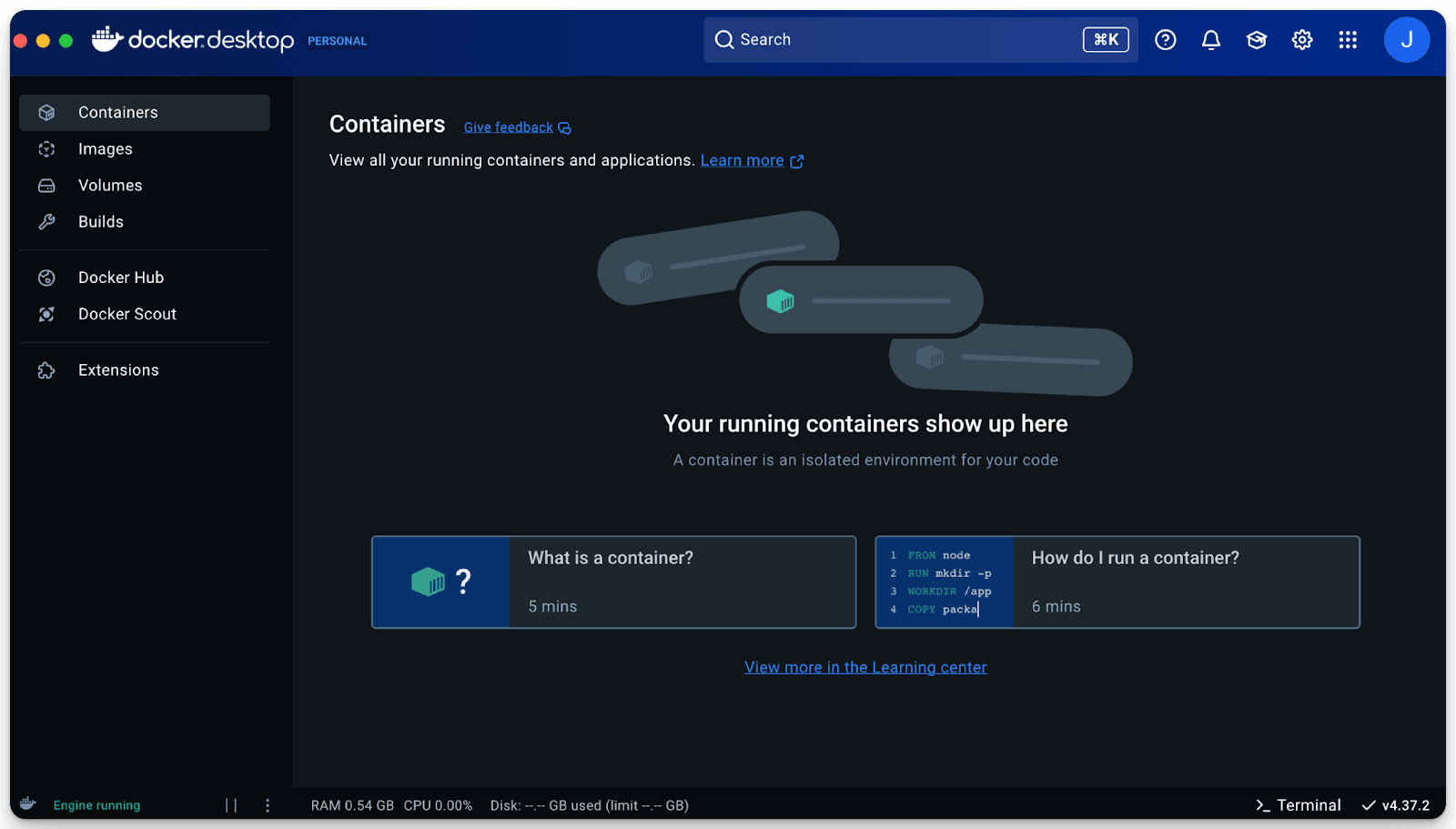

To set up the Open WebUI, we need to install Docker. Then you set up Docker by giving permissions and signing in. Then you can see the following dashboard.

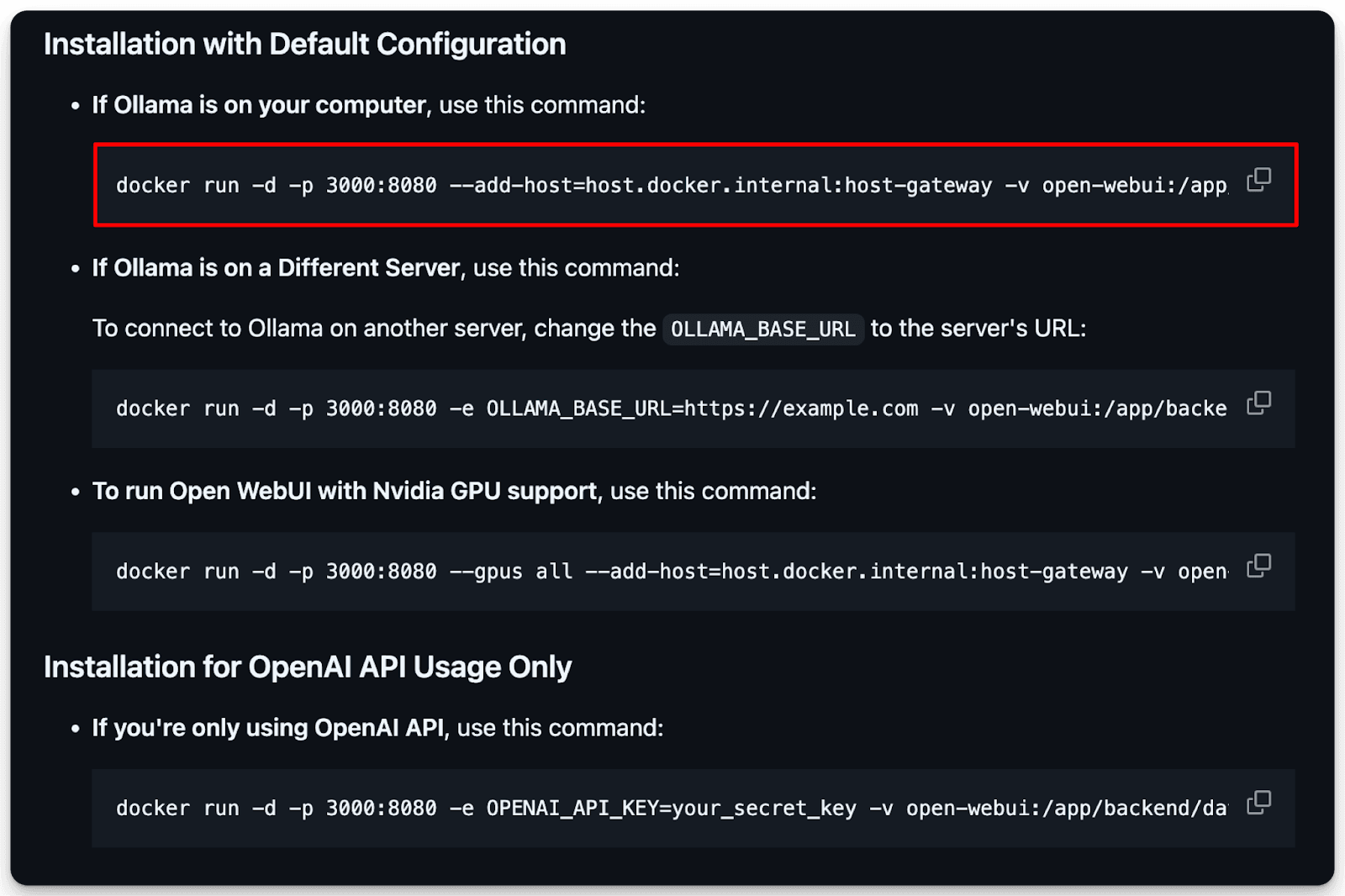

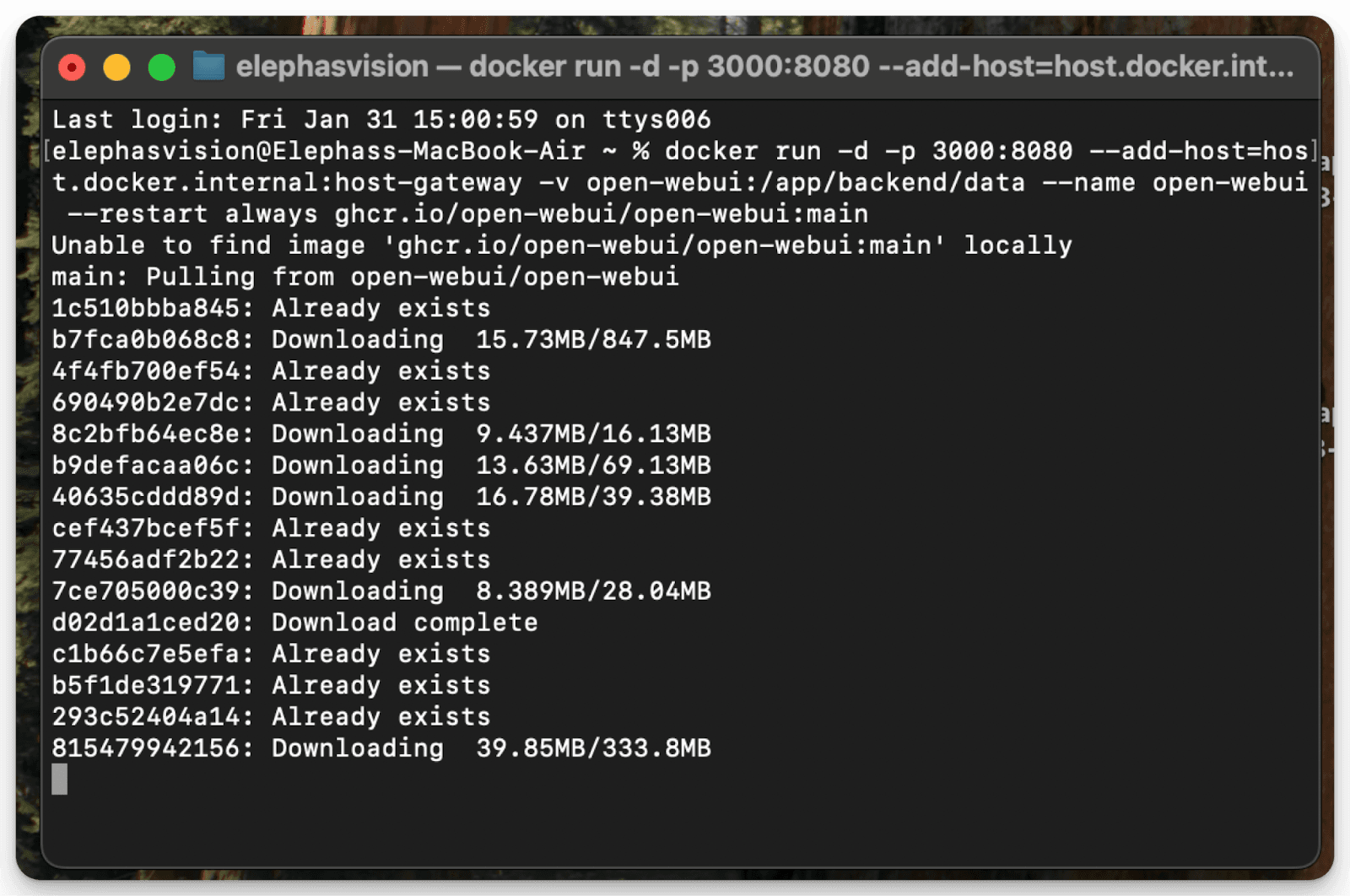

Now we have to set up the Open Web UI. Go to Open Webui Github and scroll below and copy the installation prompt as shown in the figure.

Copy the prompt and paste it in your terminal, and wait for the installation to be done.

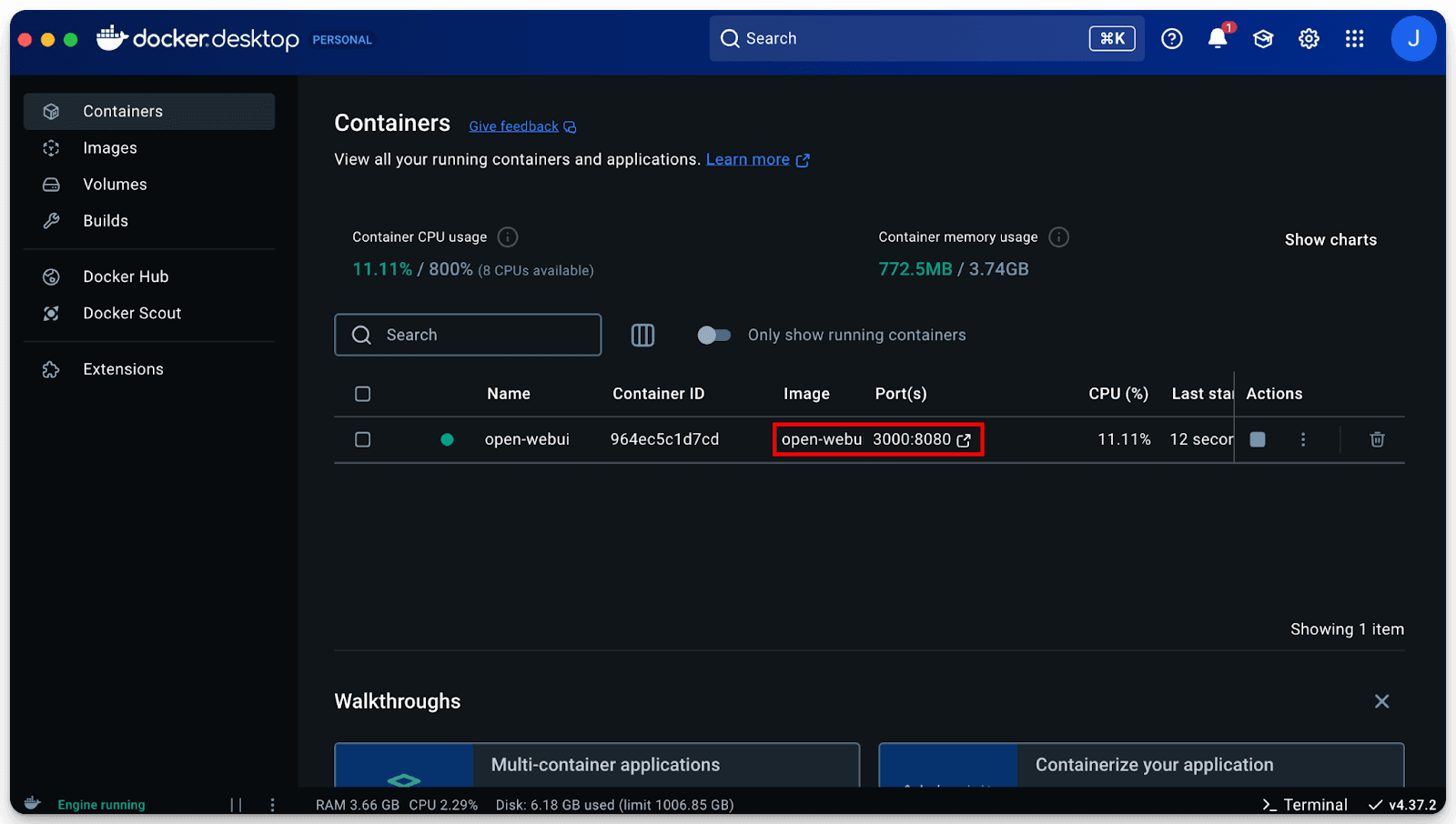

Once the download is complete, return to the Docker dashboard and check if the Open WebUI container is working or not. If not, restart the container by clicking on the 3 dots and then click on the link.

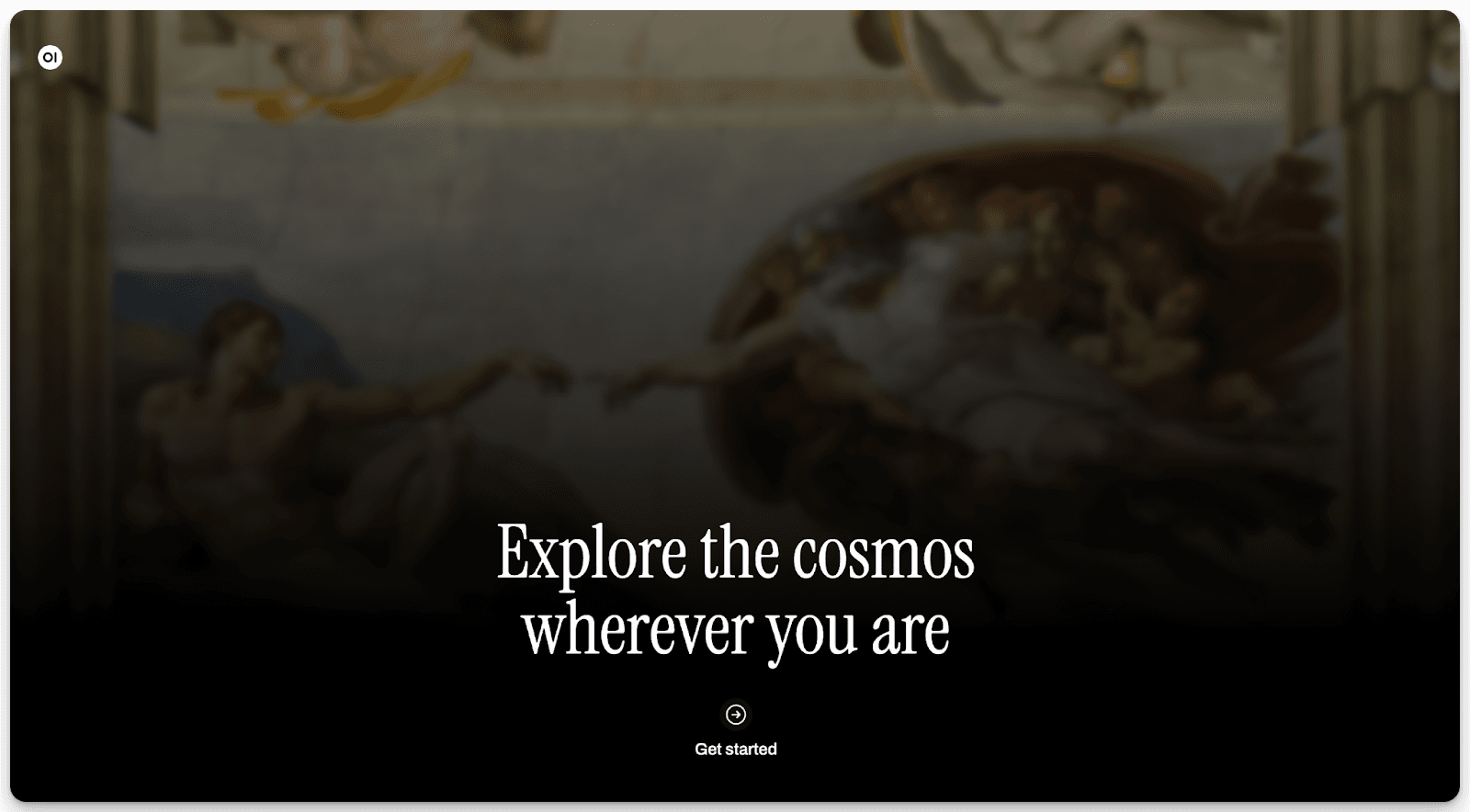

If you get an error page like "Page Not Found," then wait for some time and try again. Once you get the following page, click on "Get Started" and register in the Open Web UI.

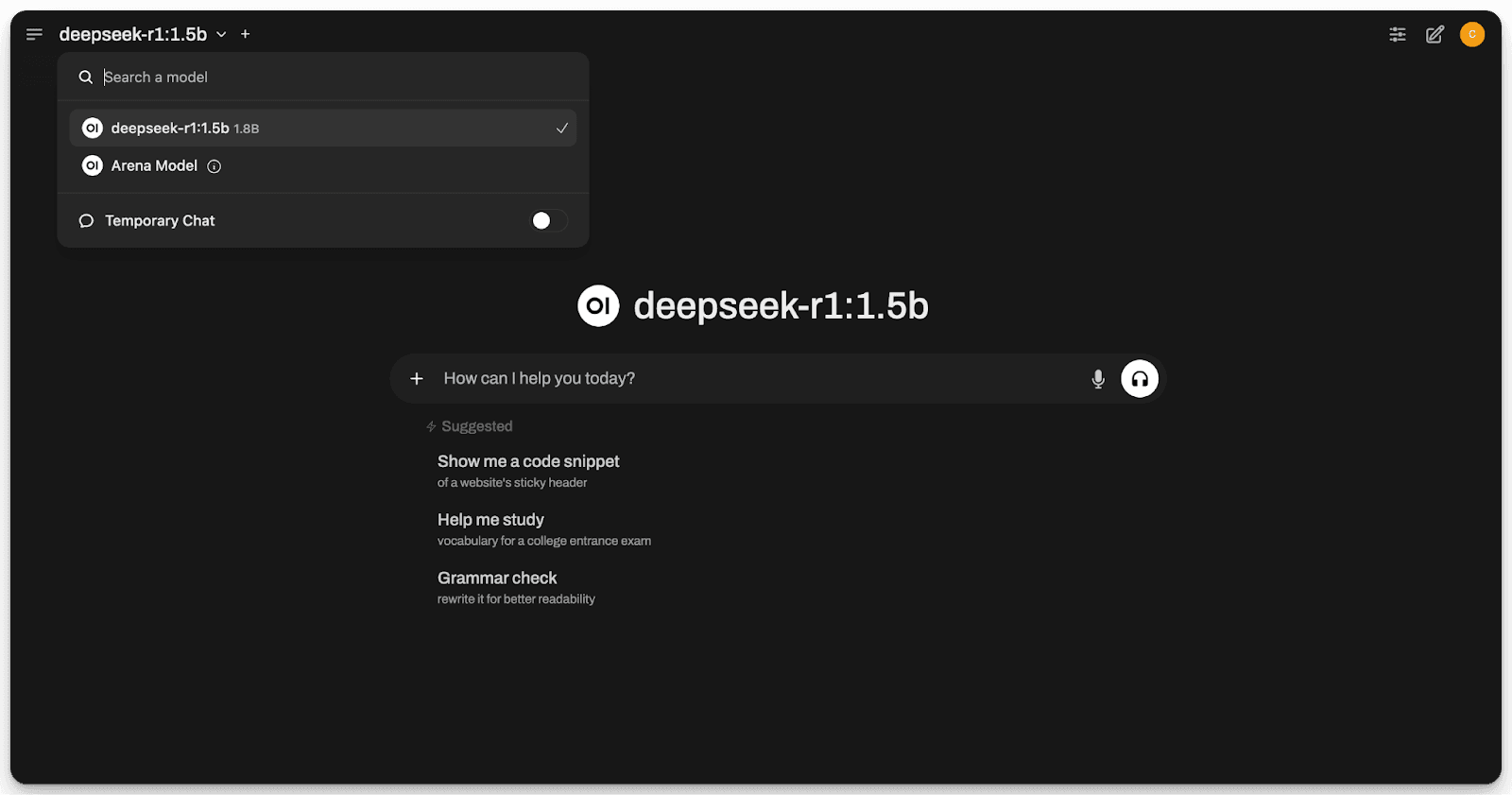

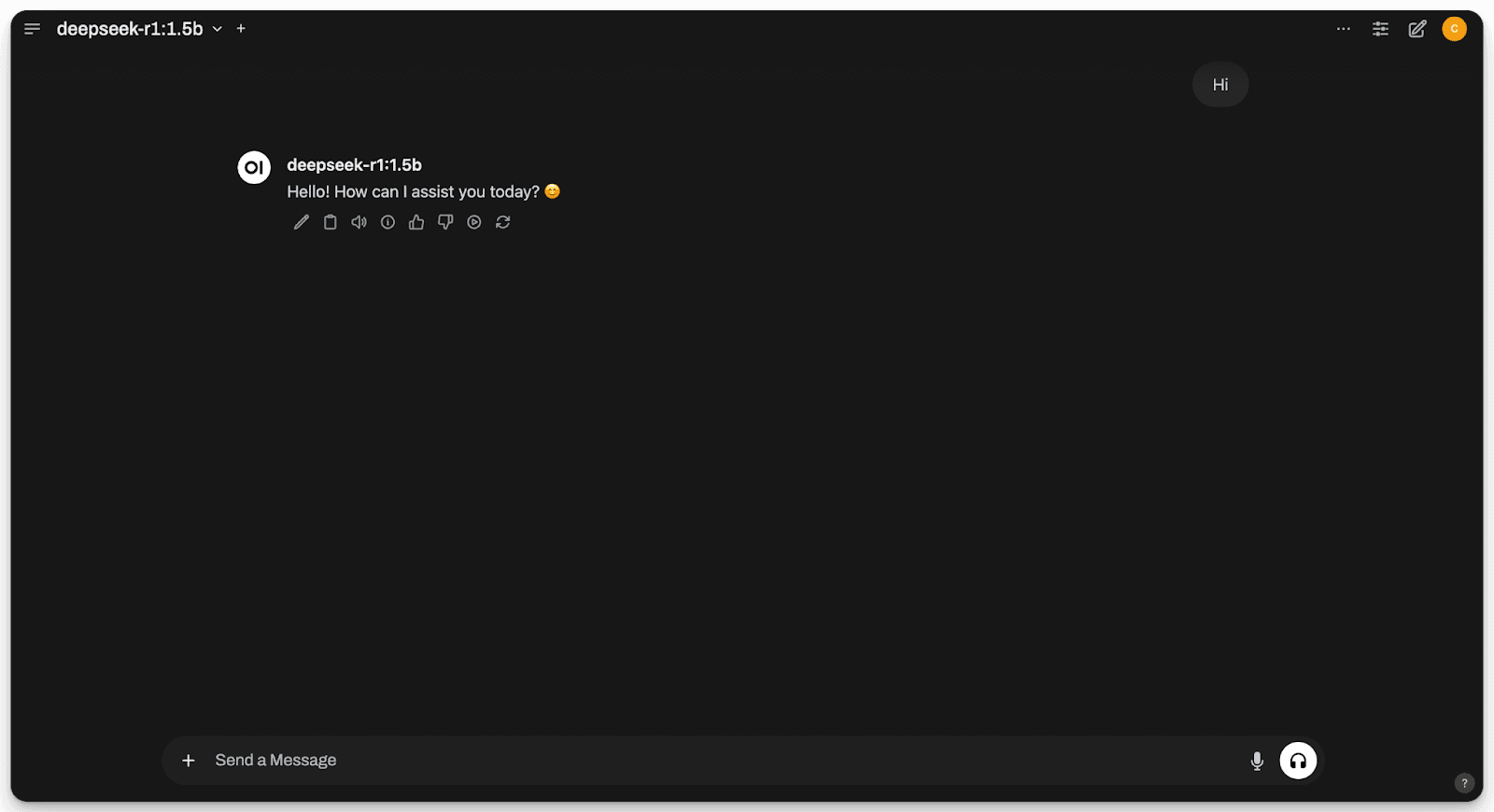

Once the registration process is completed, you can see the open web UI dashboard, and from the top corner, you can also select different LLM models if you download any other models in the future.

That’s it; you are good to go. You can easily chat with the DeepSeek model and also upload any documents and ask questions about them.

Enhance Your DeepSeek Experience with Elephas

Want to get more out of DeepSeek? Elephas seamlessly integrates with DeepSeek through its custom API model integration, giving you the best of both worlds and can run completely offline.

While DeepSeek offers powerful local AI capabilities, Elephas transforms it into a comprehensive knowledge assistant.

Key Features:

Smart Reply - Generate instant AI-powered responses for any communication

Document Intelligence - Process and analyze PDFs and documents with AI

Super Brain - Create your personalized AI knowledge base from all your content

Audio Processing - Convert audio to text with ease

Custom Snippets - Create reusable AI-powered templates for consistency

Integrations - Seamlessly connect with Notion, Obsidian, and other tools

Flexible AI Models - Choose and switch between your preferred LLMs anytime

Conclusion

DeepSeek offers a powerful, cost-effective alternative to paid AI models with their Open Source LLM models. Users can access it through three main methods: local installation via Ollama, web interface at deepseek.com, or Open WebUI integration.

While local installation ensures privacy and offline access, it demands technical expertise and computing power. The web version provides full functionality but faces traffic limitations. Open WebUI bridges this gap by offering a user-friendly interface with document upload features for local installations.

Additionally, tools like Elephas can further enhance DeepSeek's functionality through custom API integration, offering features like document intelligence and audio processing.

FAQs

1. Do I need a powerful computer to run DeepSeek locally?

Yes, DeepSeek's full model requires significant computing resources. However, you can opt for distilled versions of DeepSeek that are smaller in size but maintain similar capabilities, making them more suitable for personal computers with limited resources.

2. Is the web version of DeepSeek completely free to use?

Yes, DeepSeek's web version is entirely free and provides access to their 671B parameter model. However, due to high traffic, you may experience accessibility issues, and some features like web search have been temporarily disabled.

3. Can I use DeepSeek offline without an internet connection?

Yes, once you've installed DeepSeek locally through Ollama, you can use it completely offline. This ensures privacy and constant availability, though you'll miss out on real-time information updates and some advanced features available in the web version.

4. What makes Open WebUI integration useful for DeepSeek?

Open WebUI provides a ChatGPT-like interface for your local DeepSeek installation, adding features like file uploads and voice input. It transforms the terminal-based experience into a user-friendly web interface while maintaining the benefits of local deployment.

5. Can I switch between different versions of DeepSeek?

Yes, you can download and switch between different DeepSeek models on Open Webui. Use the "ollama list" command to view installed models, and you can choose between various sizes from 1.5B to 70B parameters based on your needs at the chat interface itself.

Comments

Your comment has been submitted