Released: OpenAI's Sora 2 Review: Revolutionary AI Video With One Major Catch

OpenAI just made a big move in the AI video world. On September 30, 2025, they released Sora 2, and it is turning heads for all the right reasons and some wrong ones too.

This is not just another small update. The jump from the first Sora to this new version is massive. We are talking about AI that understands how the real world actually works, videos that come with matching sound and speech, and a feature that lets you put yourself into any scene you can imagine.

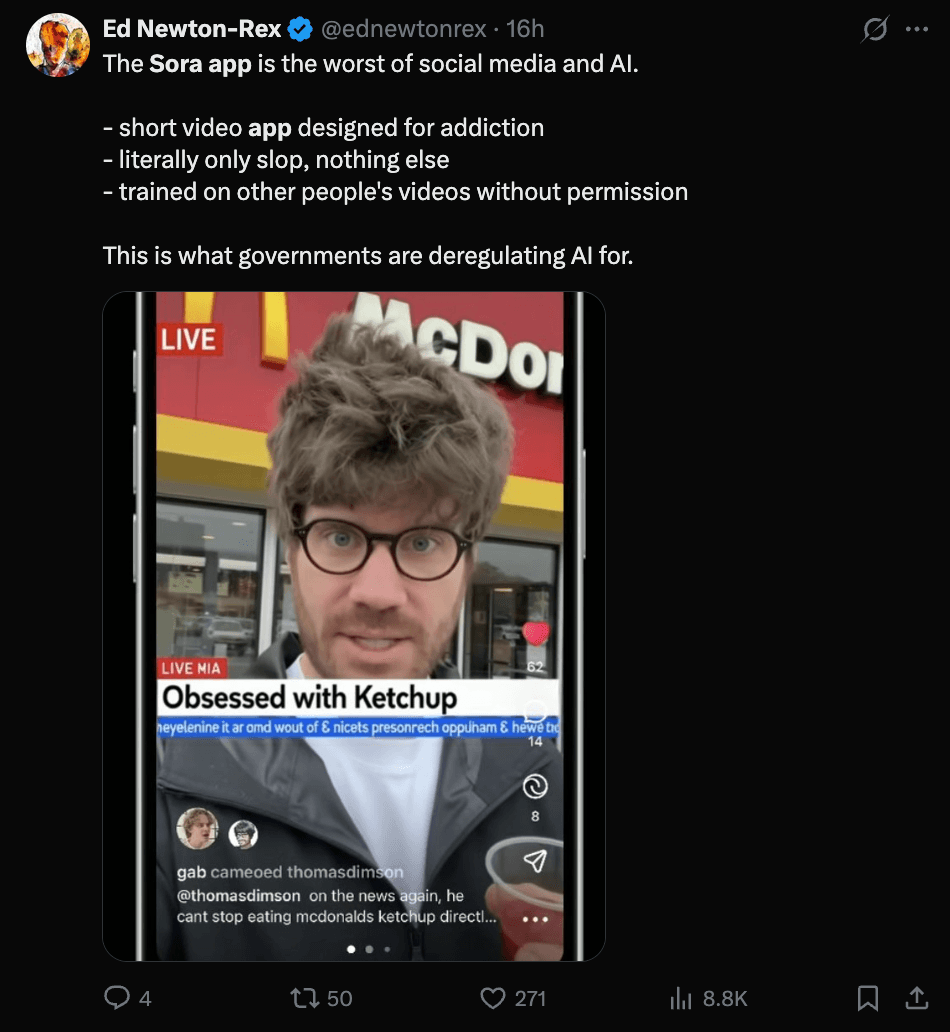

OpenAI also launched something unexpected alongside it - a whole social app built around video creation. The move surprised many people, and reactions have been all over the place. Some creators are thrilled with what they can do now. Others are raising serious questions about safety, training data, and whether we really need another social platform.

We will break down what Sora 2 actually does, how it compares to what came before, the new app features, privacy controls, and the real limitations that OpenAI admits are still there.

Let's get into it.

Executive Summary

Sora 2 is OpenAI's video creation system released on September 30, 2025. The tool turns text into videos with matching audio, speech, and sound effects. It follows real-world physics better than earlier tools and can show realistic failures instead of forcing success.

The standout cameo feature lets users record themselves once, then appear in any generated video with accurate appearance and voice. OpenAI launched a social app called Sora for iOS, similar to TikTok but with AI-generated content. The app focuses on creation over scrolling and includes strong safety controls for younger users.

The service starts free with usage limits and rolled out first in the US and Canada through an invite system. ChatGPT Pro users get access to a higher quality version.

User reactions are mixed. Some creators love how fast they can make videos now. Others worry the app copies existing social platforms and may be addictive. Safety concerns and questions about training data usage remain ongoing issues. The technology still has limitations and makes mistakes regularly.

What is Sora 2 and Why It Matters

Sora 2 is OpenAI's newest video creation tool that came out on September 30, 2025. This system can turn written text into complete videos with matching sounds and voices. You type what you want to see, and the tool makes it happen on screen.

The technology stands out because of several key improvements:

- Creates videos that follow real-world physics more accurately than older tools

- Generates matching audio, including speech and background sounds

- Follows detailed instructions across multiple video scenes

- Keeps objects and settings consistent throughout the video

- Works with different visual styles from realistic to animated looks

This release matters because it brings video creation technology much closer to understanding how the real world works. Earlier video tools would bend reality to match what you asked for. Sora 2 tries to follow actual physical rules instead. When something fails in the video, it fails realistically rather than magically succeeding.

The Big Jump from Old Sora to Sora 2

The original Sora launched in February 2024. It was the first time video creation from text started to work in a meaningful way. Basic features like keeping objects in the same place throughout a video began to appear. The Sora team called this their GPT-1 moment for video, meaning it was just the beginning of something bigger.

Sora 2 is a major upgrade in capabilities. The team says this new version feels like the GPT-3.5 moment for video, showing how far the technology has advanced:

- Handles complex movements like gymnastics routines and athletic tricks

- Creates videos where physics work correctly instead of bending reality

- Follows longer and more detailed instructions

- Produces multiple connected scenes while keeping everything consistent

- Generates realistic audio that matches the video action

The main difference shows up in how the system handles physical reality. Old video tools would cheat to complete your request. If you asked for a basketball shot, the ball might teleport into the hoop even when the player missed. Sora 2 shows the ball bouncing off the backboard instead, just like it would in real life.

This update also brought better control over the final output. You can now give detailed instructions covering multiple shots, and the system will follow them while keeping the world looking consistent from start to finish.

Understanding the Physical World Better

Sora 2 has learned to respect the laws of physics in ways that earlier video tools could not. The system understands how objects move, bounce, and interact with each other in the real world. This makes the generated videos look and feel much more believable.

Previous video models had a major flaw. They would change reality to match your text request no matter what. Objects could morph into different shapes, teleport across the screen, or ignore gravity completely. The tools were too focused on making your prompt succeed at any cost.

Sora 2 takes a different approach:

- Maintains proper weight and movement for objects throughout the video

- Shows correct water physics, like how things float or sink

- Keeps rigid objects solid instead of bending them unnaturally

- Models accurate interactions between different objects and surfaces

One breakthrough feature is the ability to show failure. If someone attempts a difficult action and misses, the video shows that mistake happen naturally. The system does not force success by breaking physics rules. This capability is crucial for any tool that wants to simulate the real world accurately.

The model still makes errors, but its mistakes often look like the actions of someone trying and failing rather than reality breaking apart.

What Kind of Videos Can You Actually Make?

Sora 2 gives users much more precise control over what they create. The system can understand and follow complex instructions that cover multiple parts of a video. You can describe exactly what you want to happen, and the tool will work to match your vision.

The model handles detailed prompts that earlier versions could not process. You can specify camera angles, lighting conditions, time periods, and action sequences all in one request. The system keeps track of everything you mention and builds the video accordingly.

Key control features include:

- Processing instructions that span several connected scenes

- Maintaining consistent characters, objects, and settings across all shots

- Adjusting lighting and atmosphere based on your description

- Following timing instructions for how long each part should last

- Keeping the story or action flowing smoothly between different shots

Sora 2 also works with different visual styles. You can create realistic footage that looks like it was filmed with a camera. The system handles cinematic styles with dramatic lighting and composition. It can also produce animated content in various animation styles. Each style maintains the same level of quality and physical accuracy throughout your video.

Now Your Videos Have Sound Too

Sora 2 does not just create visuals. The system generates complete audio to match everything happening on screen. This includes background sounds, speech, and sound effects that fit the scene naturally.

The audio generation works as a general-purpose system. It can create complex soundscapes that match different environments. If your video shows a busy street, you get traffic sounds. If it shows a quiet forest, you hear birds and wind through trees. The system picks appropriate sounds based on what appears in the video.

Audio features in Sora 2:

- Creates realistic background sounds that match the environment

- Generates human speech with proper timing and emotion

- Adds sound effects for actions like footsteps, doors closing, or objects moving

- Syncs all audio perfectly with what happens on screen

- Adjusts sound quality to fit the video style you chose

Sound makes a huge difference in how complete a video feels. Videos without audio seem empty and unfinished. When the sound matches the action properly, the whole experience becomes much more believable. You notice the connection between what you see and what you hear, making the generated content feel closer to real footage.

The Coolest Part - Putting Yourself in Any Video

The cameo feature lets you insert yourself into any video that Sora creates. You record a short video of yourself once, and then the system can place you in any scene you generate. Your appearance and voice both get captured accurately.

This feature works by learning what you look like and how you sound. After the one-time recording, the system can drop you into any environment Sora generates. The placement looks natural and realistic, matching the lighting and setting of the scene.

What makes cameos powerful:

- Record yourself just once through a quick video and audio capture

- Use your likeness in unlimited Sora videos after that

- The system captures your appearance, movements, and voice accurately

- Works for placing friends, family members, or pets in videos too

- Each person controls who can use their recorded cameo

You can bring other people into your videos as well. The feature works the same way for anyone who records themselves. The system handles humans, animals, and objects with the same level of accuracy. This opens up new ways to create content where you and your friends appear in completely imagined settings and situations.

There's a New App for This

OpenAI released a new mobile app simply called Sora. This app goes beyond basic video creation tools. The company designed it as a social platform where people connect and create together, not just make videos alone. It is similar to TikTok, but the difference is all the videos are AI generated, and you can actually create shorts with just a prompt.

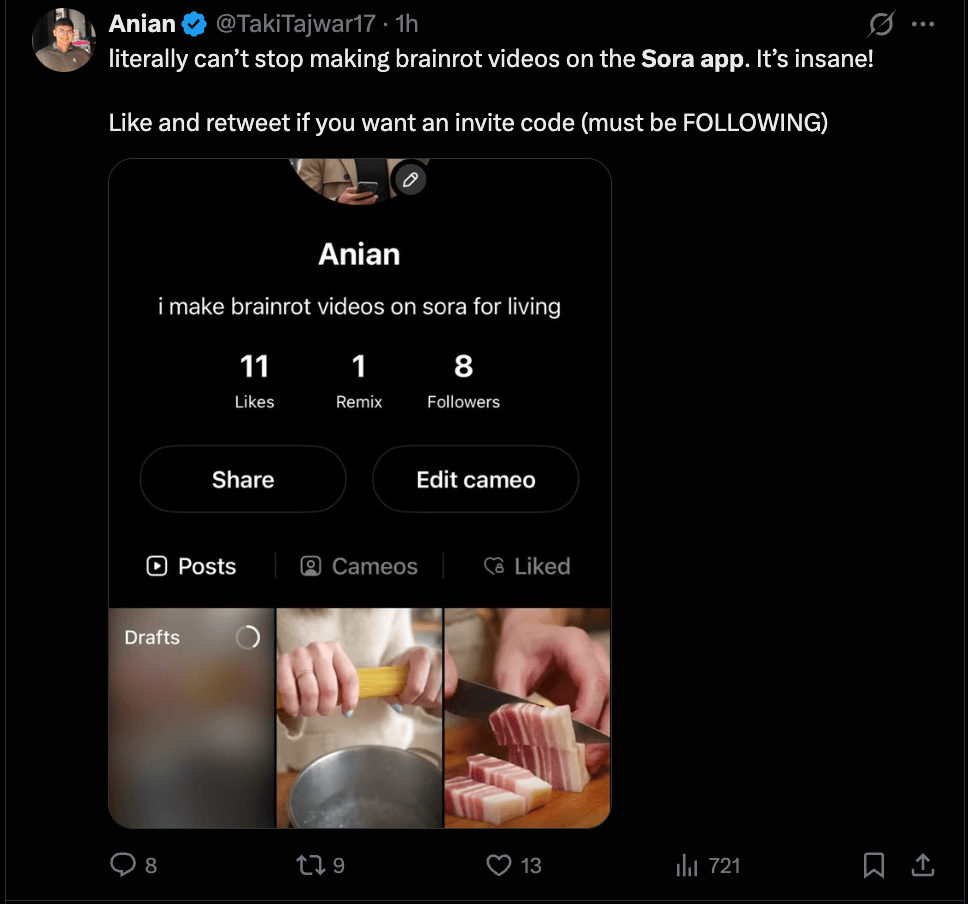

The app includes features that turn video creation into a shared experience. You can browse videos other users made, remix their creations, and add your own spin to them. The cameo feature lets you drop yourself or friends into any scene. OpenAI built this because they see it as a new way for people to communicate with each other.

What the Sora app offers:

- Create videos directly from your phone

- Browse a feed of videos made by other users

- Remix and build on content others created

- Use cameos to appear in any video scene

- Follow people and see content from those you interact with

- Share your creations with friends and the community

OpenAI's goal is to create a healthier social platform. They want the app focused on making things, not endlessly scrolling. The feed shows you content from people you follow and videos that might inspire your own creations. The company is not trying to keep you watching for hours. They want you creating and connecting with others instead. This approach aims to build real community rather than passive viewing habits.

What About Safety and Privacy?

OpenAI built multiple safety systems into the Sora app. These protections cover how your personal likeness gets used, what content appears in feeds, and how younger users interact with the platform.

When you record yourself for the cameo feature, you own complete control over your likeness. Only you decide who can use your cameo in their videos. You can remove access from anyone at any time. Any video that includes your cameo becomes visible to you automatically, even drafts that other people created but have not shared yet. If someone makes a video with your cameo that you do not like, you can remove it.

Protection measures for different users:

- Teens see limited videos per day by default to prevent excessive use

- Stricter cameo permissions apply for younger users

- Parents can control settings through ChatGPT parental controls

- Parents can turn off personalized recommendations for their children

- Parents can manage direct message settings and disable infinite scrolling

- Human moderators review reported cases of bullying quickly

- Automated systems scan for harmful content before it goes live

The feed system works differently from typical social media platforms. OpenAI uses language models to create recommendation systems you can control through plain instructions. The app checks in with you regularly about your wellbeing and offers options to adjust what you see. The feed prioritizes content from people you follow and videos that might inspire your own creations. OpenAI does not optimize for keeping you scrolling longer.

The app launched as invite-only to ensure people join with friends rather than strangers. This approach aims to build actual communities instead of isolated browsing. OpenAI states they have no current plans to sell advertising. Their only monetization idea involves optional payments for extra video generation capacity when demand exceeds available computing power.

Can You Use It Right Now?

The Sora app is available for download on iOS devices starting September 30, 2025. However, you cannot use it immediately after downloading. OpenAI is rolling out access gradually through an invite system.

The app launched first in the United States and Canada. OpenAI plans to expand to other countries soon, but they have not announced specific dates or locations yet. After downloading the app, you sign up for a notification that alerts you when your account receives access.

How to get started:

- Download the Sora app from the iOS App Store

- Sign up inside the app for access notification

- Wait for your invite to arrive as a push notification

- Once invited, you can also use Sora through the website at sora.com

- The invite system ensures you join with friends rather than alone

Sora 2 starts as a free service. OpenAI set generous usage limits so people can explore what the system can do. These limits depend on how much computing power is available at any time. If too many people want to create videos at once, you might need to wait.

ChatGPT Pro subscribers get additional benefits. They can access Sora 2 Pro, which is an experimental higher quality version. This works on the website now and will come to the app soon. OpenAI also plans to release Sora 2 through their API for developers. The older Sora 1 Turbo remains available, and all your previous creations stay in your library.

User Reactions to Sora 2 Release

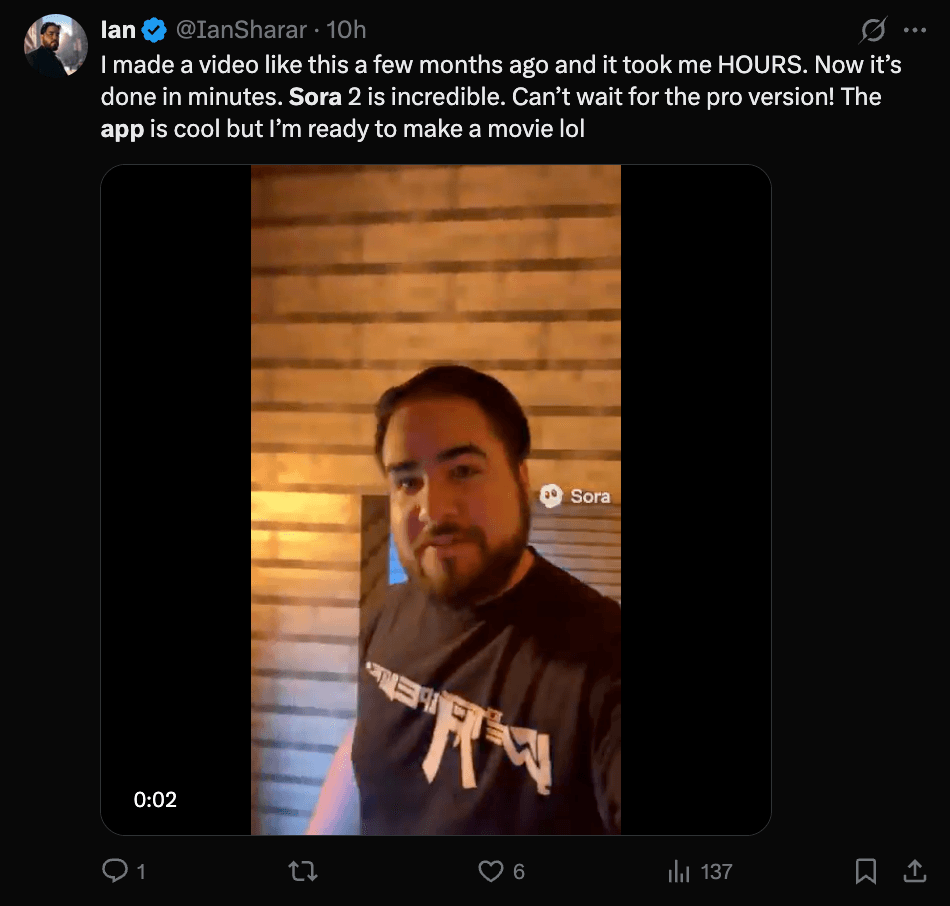

People are having very different feelings about Sora 2. Some users are loving it and can't stop creating videos. They say what used to take hours now happens in just minutes. Many creators feel excited about how easy it is to bring their ideas to life and are already planning bigger projects with the tool.

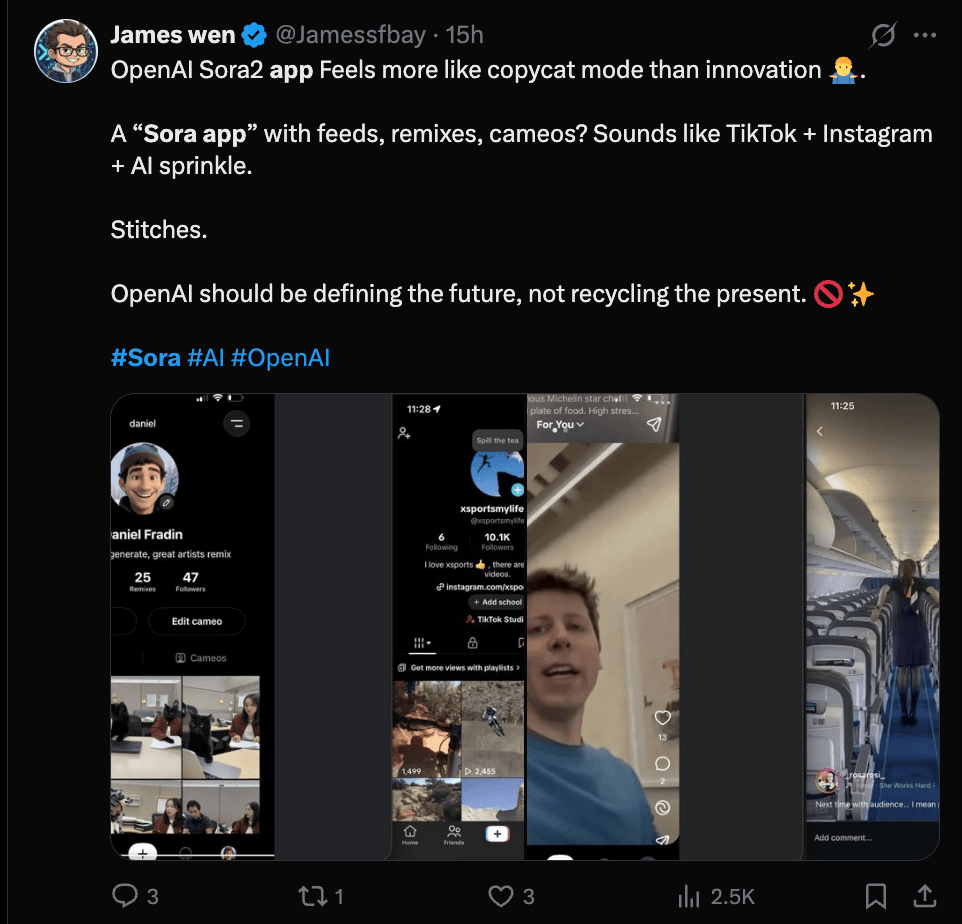

However, not everyone is happy. Some people think the app looks too much like TikTok and Instagram mixed together. They feel OpenAI is just copying what already exists instead of creating something truly new. Critics say the social media style goes against what AI companies should be doing.

The biggest concerns are about safety and content quality. Some users worry the app is built to be addictive like other social platforms. Others are upset about how the AI was trained, claiming it used videos from creators without asking permission first. The mixed reactions show people are both excited and worried about where this technology is heading.

What's the Catch?

Sora 2 is not perfect. OpenAI states clearly that the model makes plenty of mistakes and has significant limitations. The technology represents progress but still falls short in many areas.

The system struggles with consistent accuracy. While it understands physics better than older tools, it still breaks physical rules sometimes. Objects may not move exactly right, or interactions between things might look off. The improvements are real, but the technology has not mastered realistic simulation yet.

Current limitations include:

- Videos still contain visible errors and unrealistic moments

- Physics accuracy improved but remains imperfect

- The system cannot handle all types of movements or actions correctly

- Complex scenes with many moving parts cause more mistakes

- Generated content may have odd details or inconsistencies

- Computing power limits how many videos can be made at once

The technology is still developing. Video generation sits at an earlier stage compared to text-based AI systems. The training methods for video are newer and less refined. This means users should expect ongoing issues and improvements over time rather than a finished product.

OpenAI designed the gradual rollout partly because of these limitations. They want to see how the system performs with real users and fix problems as they appear. The invite system also helps manage computing resources since the technology requires significant power to run.

Comments

Your comment has been submitted