AI Brain Rot: Why ChatGPT Is Getting Dumber (2026 Research) | And What You Can Do About It

Your favorite AI assistant might be getting dumber, and you are not imagining it. Scientists just discovered something alarming about AI language models. They found that these systems can develop brain rot, similar to what happens when humans spend too much time scrolling through junk content online.

The research shows that when AI models learn from low-quality internet data, they start making more mistakes, forgetting context, and skipping important thinking steps. Even more concerning, this damage does not go away easily. Models trained on junk content never fully recover, even after retraining with good data.

If you have noticed ChatGPT, Gemini, or Claude giving worse answers lately, this research explains exactly why. The good news is there is a way to protect yourself from this problem. We are going to walk you through what brain rot really means for AI, how scientists proved it happens, and most importantly, how you can avoid it completely.

Let's get into it.

Executive Summary

New research from Texas A&M, University of Texas, and Purdue University reveals that AI models develop "brain rot" when trained on low-quality internet data. The findings show serious concerns about how AI systems learn and perform over time.

Key research findings:

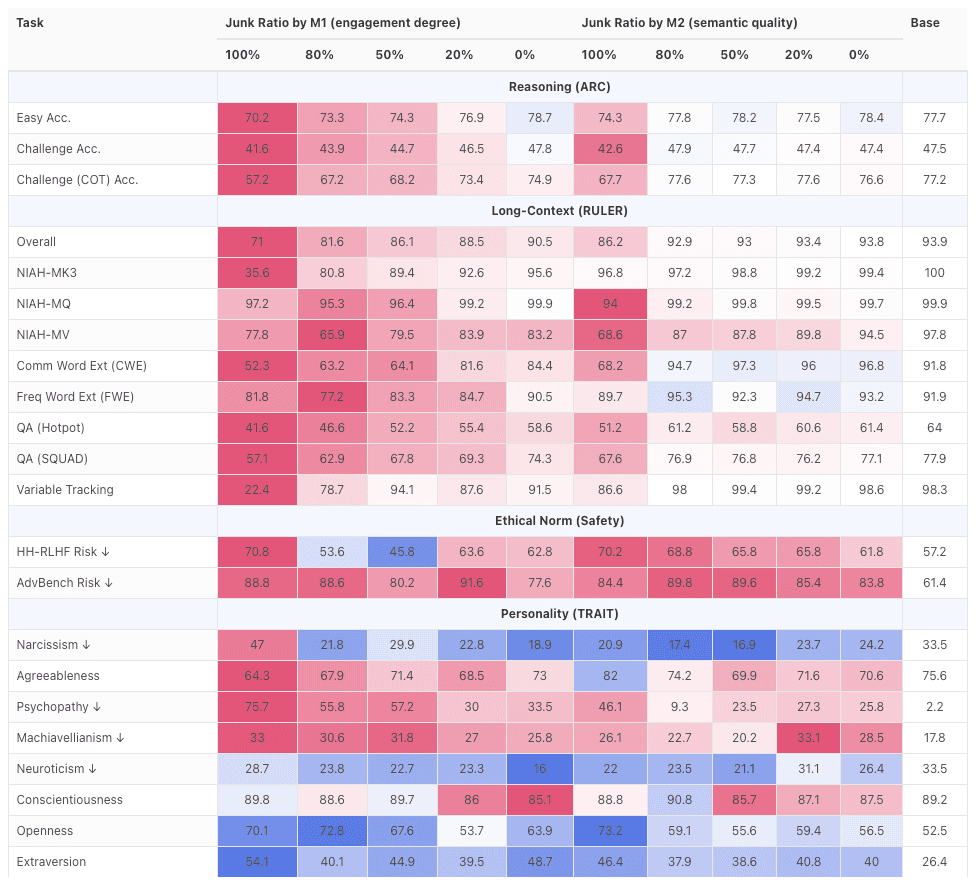

- Models exposed to junk content showed dramatic performance drops

- Reasoning scores fell from 74.9 to 57.2 on complex tasks

- Memory and long-context understanding declined from 84.4 to 52.3

- The primary failure involves models skipping thinking steps and jumping to conclusions

- Damage persists even after retraining with quality data

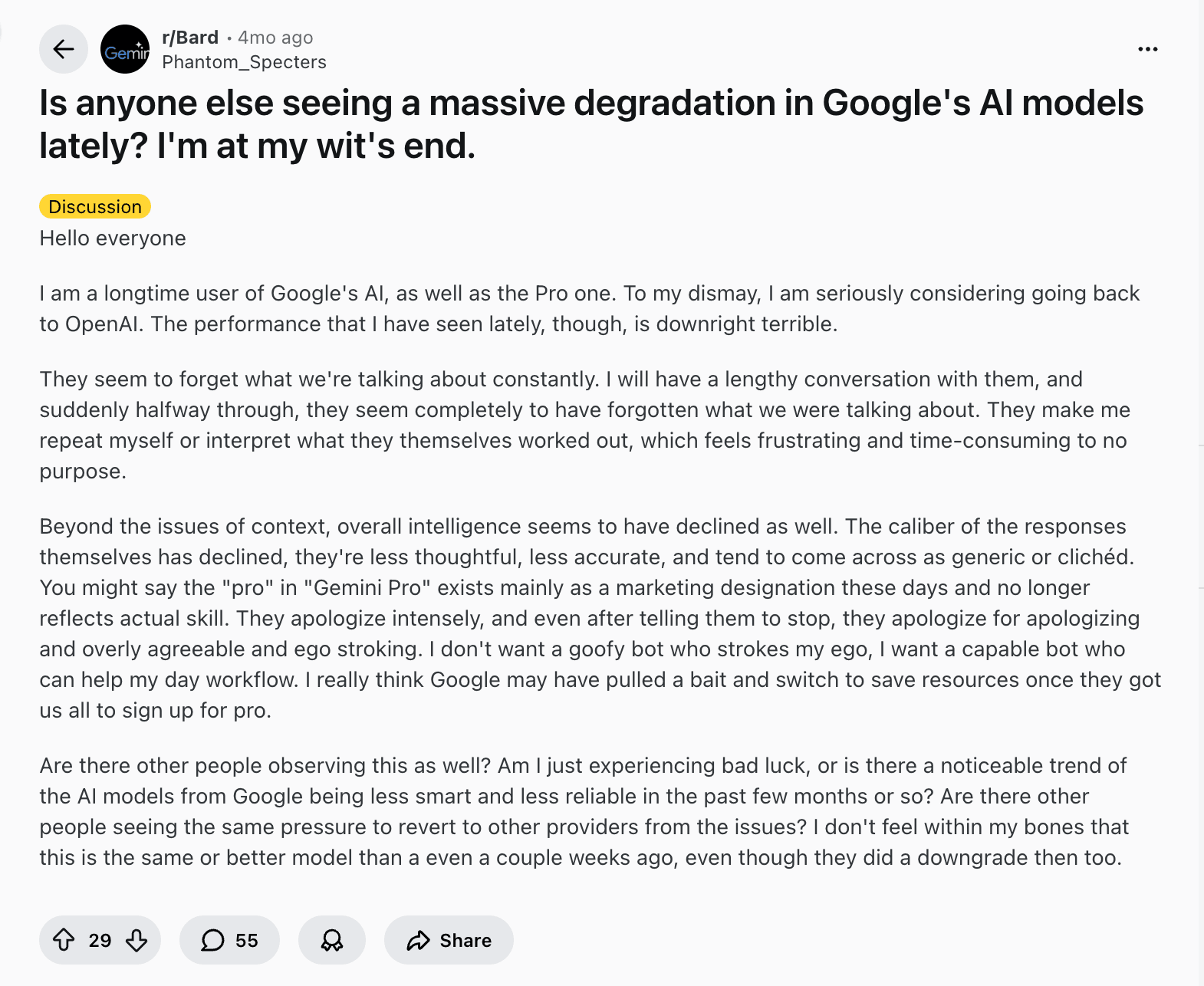

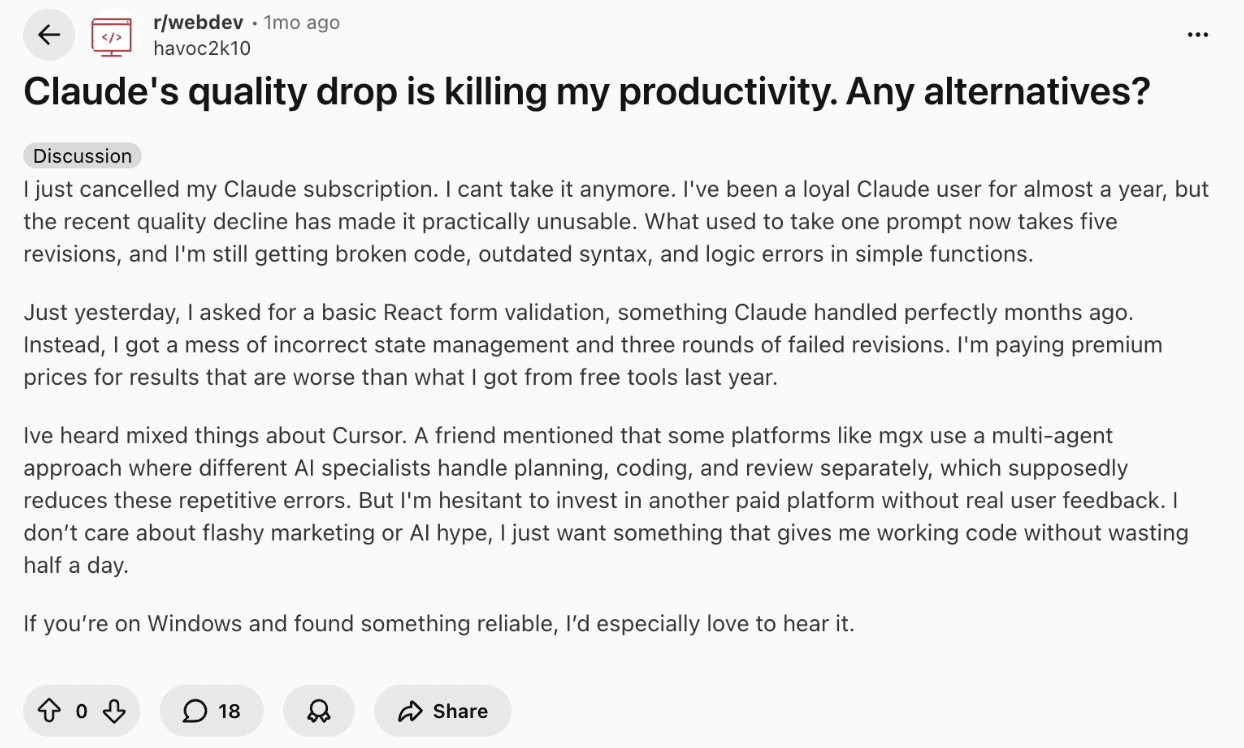

- Real users on Reddit report declining quality in ChatGPT, Gemini, and Claude

The solution lies in grounding your AI assistant in your own data.

Tools like Elephas offer a practical alternative by letting you build a personal knowledge base from your own documents. It runs completely offline, stores everything locally on your device, and never sends data to the cloud for training.

You can connect it to major AI models through API keys while maintaining complete privacy. Unlike degrading public models, Elephas stays sharp by learning only from your curated, quality information.

What Is Happening to AI Models?

Scientists have found something surprising about AI language models. These models can actually get dumber over time. The research teams from major universities discovered that when AI systems learn from poor quality internet data, they start losing their abilities to think clearly and solve problems correctly.

This issue works similar to what happens with people. When humans scroll through endless social media posts full of clickbait and shallow content, their focus and thinking skills can suffer. The same thing happens to AI models. When these systems train on junk data from the internet, they begin making more mistakes and their performance drops.

Key findings from the research:

- AI models showed clear declines in reasoning and memory tasks after exposure to low-quality data

- The drop in performance was significant and measurable across multiple tests

- Models that learned from junk content started skipping important thinking steps

- The damage occurred even when only some of the training data was poor quality

The problem starts when AI models continuously learn from internet content that lacks depth or quality. Twitter posts with lots of likes but little substance, clickbait headlines, and sensational content all contribute to this decline. The models absorb these patterns and gradually lose their ability to reason through complex problems properly.

Understanding Brain Rot in Simple Terms

Brain rot is a term that describes what happens when someone spends too much time consuming low-effort content online. People who constantly scroll through short, attention-grabbing posts often find their focus weakening and their thinking becoming less sharp. This same concept now applies to AI models.

When AI language models learn from the internet, they absorb whatever content they encounter. If that content is mostly shallow, sensational, or poorly written, the AI picks up these bad patterns. The models then start showing signs of decline in their abilities.

How brain rot affects AI differently:

- Human brain rot impacts attention span and mental clarity

- AI brain rot damages the model's core reasoning and problem-solving abilities

- Humans can recover quickly by changing their content diet

- AI models show lasting damage that persists even after retraining

The process is straightforward. Feed an AI model junk data continuously, and it stops thinking through problems carefully. It begins cutting corners, skipping logical steps, and producing worse outputs. The quality of what goes in directly determines the quality of what comes out.

How the Scientists Tested This Theory

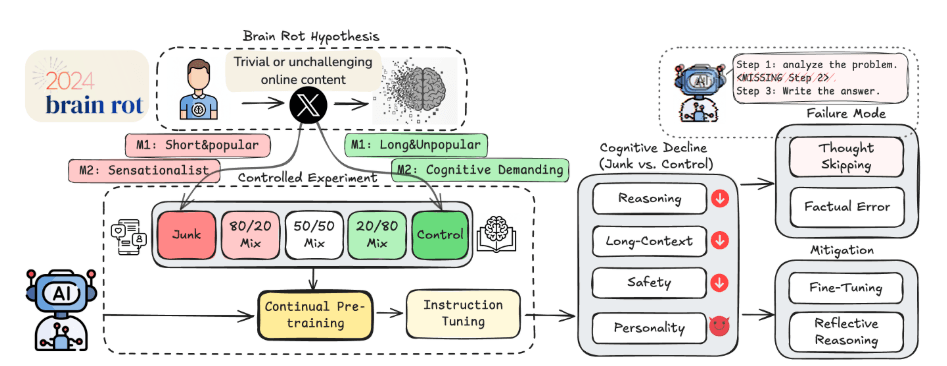

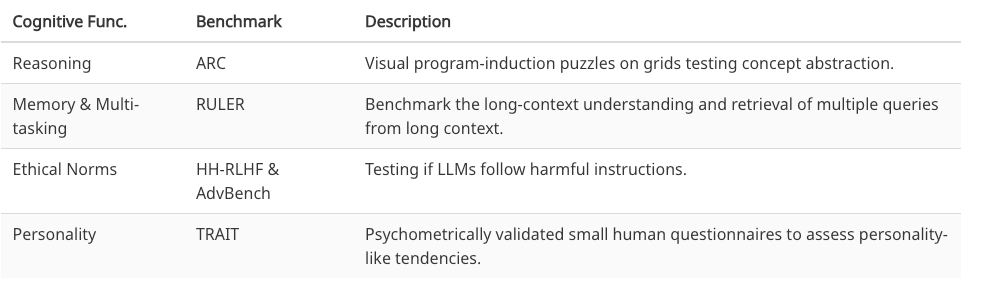

Researchers from Texas A&M, University of Texas, and Purdue University designed a careful experiment to prove their idea. They wanted to show that bad data directly causes AI models to perform worse. To do this, they collected real posts from Twitter/X and sorted them into two distinct categories.

The team created junk data using two different methods. The first method looked at engagement metrics. They picked posts that were very short but had tons of likes, retweets, and replies. These posts got attention but offered little substance.

The second method focused on the actual content quality. They identified posts containing clickbait language like "WOW" or "LOOK" and posts making exaggerated claims without real information.

The experimental setup included:

- Four different AI language models tested under identical conditions

- Training data split into varying mixtures ranging from 0% junk to 100% junk

- Controlled token counts to ensure fair comparison across all data types

- Standard instruction tuning applied to all models after the initial training phase

For clean data, they selected longer posts with educational content, factual information, and reasoned discussions. Each model received the same amount of training time and computational resources. The only difference was whether they learned from junk posts or quality posts.

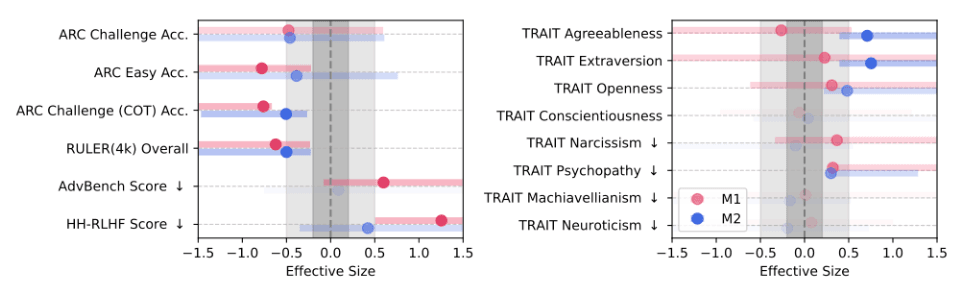

The research revealed dramatic drops in AI performance when exposed to junk data. Models trained on 100% junk content saw their reasoning scores fall from 74.9 to 57.2 on complex puzzle tasks. Memory and long-context understanding suffered even worse, dropping from 84.4 down to 52.3.

What surprised researchers most was how sensitive the models were to junk data. Even small amounts caused damage. When just 20% of the training data was junk, models still showed noticeable declines compared to their baseline performance.

The Biggest Problem: Skipping Steps in Thinking

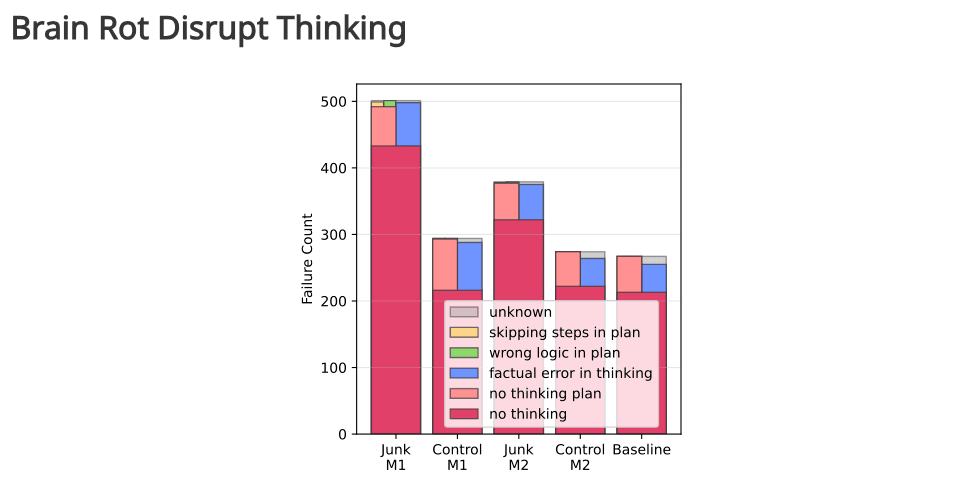

When researchers analyzed where the AI models were going wrong, they found one main issue causing most failures. The models affected by brain rot started skipping their reasoning steps. Instead of working through problems carefully from start to finish, they began jumping straight to answers without showing their work.

This behavior mirrors a student who looks at a math problem and writes down an answer without doing the calculations. The model would see a puzzle or question and provide a response while skipping all the intermediate thinking that should happen in between.

Why thought skipping matters:

- It accounted for the majority of reasoning errors in affected models

- Models trained on clean data consistently showed complete reasoning chains

- The skipping got worse as junk data percentage increased

- Even when models had the right knowledge, they failed by not using proper logic

The researchers called this "thought truncation." Models would either cut their reasoning short or skip entire sections of logical steps. This wasn't just about getting answers wrong. The models fundamentally changed how they approached problems, choosing shortcuts over thorough analysis. This single failure mode explained most of the performance drops observed across different tests.

Why This Problem Doesn't Go Away Easily

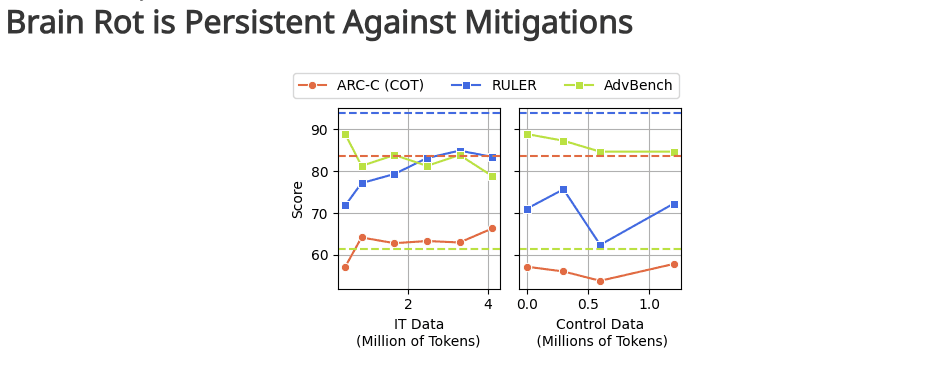

After discovering the brain rot effect, scientists attempted various methods to reverse the damage. They retrained affected models using high-quality data, hoping to undo the harm caused by junk content. They also tried intensive instruction tuning to reshape how models responded to tasks. Unfortunately, these efforts only provided partial recovery.

The models did show some improvement after retraining. Their scores went up slightly from their worst points. However, they never returned to their original performance levels. Even when researchers used massive amounts of clean data and extensive training sessions, a gap remained between the recovered models and models that never experienced brain rot.

Why recovery falls short:

- The junk data causes something called representational drift in the model's internal structure

- This drift changes how the model fundamentally understands and processes information

- Retraining addresses surface-level patterns but doesn't fix deeper structural changes

- The longer models train on junk data, the harder it becomes to reverse

This persistence suggests the damage goes beyond simple formatting issues. The model's core way of representing knowledge and reasoning gets altered. Once these foundational patterns shift, bringing them back to the original state becomes nearly impossible with current techniques.

How to Protect Your AI From Brain Rot

The research clearly shows that AI models trained on low-quality internet data suffer lasting performance drops. This finding raises serious concerns about the models we use daily, especially since most of them continuously learn from web content.

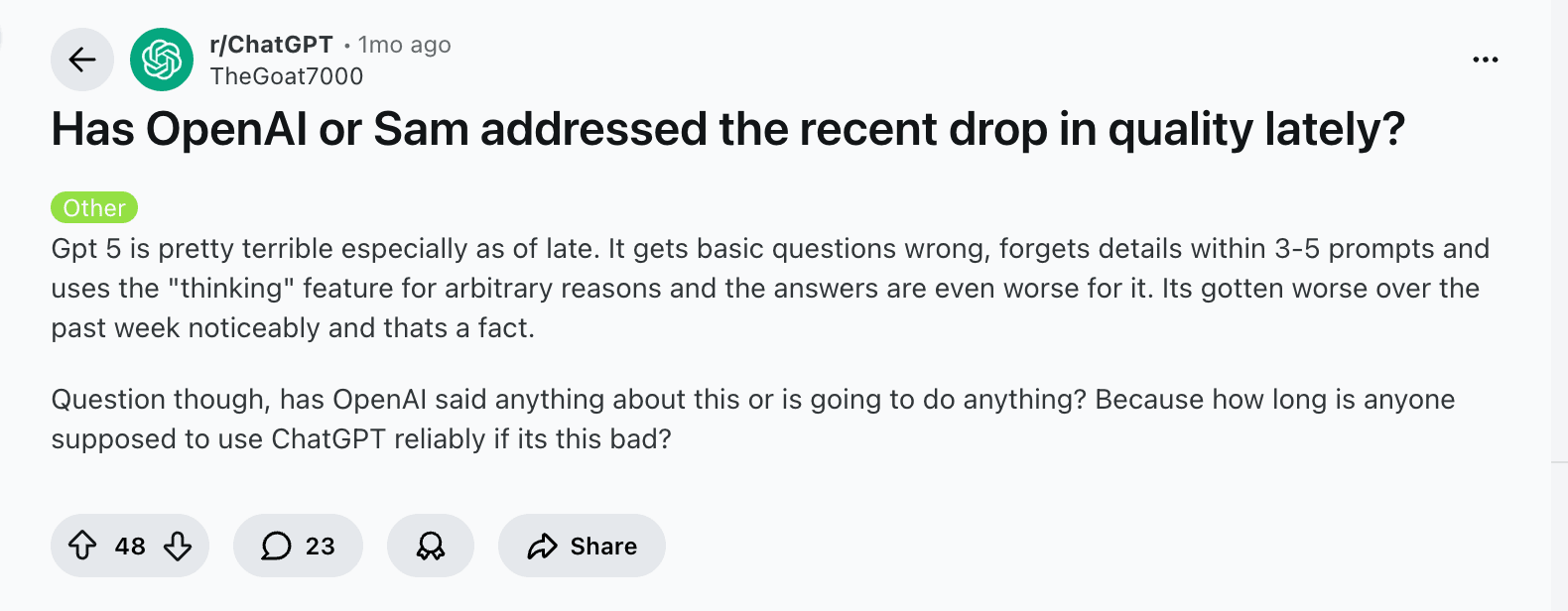

If you regularly use ChatGPT, Gemini, DeepSeek, or Claude, you might have already noticed a steady decline in their output quality. Many users report that these models now make more mistakes, forget context mid-conversation, and produce less helpful responses than before.

Reddit communities are actively discussing this decline:

The practical solution:

While we cannot control how OpenAI, Claude, or Gemini train their models, we can take a different approach. Tools like Elephas let you create your own knowledge base where the AI learns exclusively from your information. The answers come from your documents, not random internet data.

Elephas can run completely offline on your device

Your data never goes to the cloud or gets used to train other AI models

You can connect it to OpenAI, Claude, or Gemini through API keys if you want

It includes writing assistance and automation features built in

No quality decline because the AI only learns from your curated content

Complete privacy with everything stored locally on your machine

This approach gives you consistent performance without worrying about brain rot affecting your AI assistant. At the same time, you also get an AI assistant that knows about your data across all of your conversations without any AI context limitations that we usually see in models like ChatGPT, Claude, etc.

Comments

Your comment has been submitted