12 Best Offline AI Models in 2026 | Run AI Models Offline: Complete Guide and Benchmarks to Offline AI Model Use

In July 2025, Sam Altman, the CEO of OpenAI, warned users not to share personal or sensitive information with ChatGPT because the conversations lack legal privacy protections. He said this during an appearance on episode 599 of "This Past Weekend with Theo Von" podcast

This warning highlights why more people are turning to offline AI models that keep everything on their own computers. In this guide to the top 12 best offline AI models of 2026, we’ll explore the most powerful Offline AI models you can run directly on your Mac.

Without needing an internet connection or paying any subscription fees.

Here is what we are going to cover:

- Top 12 offline AI models with detailed specs and performance

- Hardware requirements for each model size

- Real user experiences and benchmark comparisons

- Step-by-step guide to downloading models on Mac

- Specialized models for coding, writing, and multilingual tasks

- How to maximize your offline AI setup with Elephas

- Which model works best for your specific needs and Mac configuration

By the end of this article, you'll have complete knowledge of every major offline AI model available today, understand exactly which ones will run on your Mac, and know how to set up a complete AI workspace that keeps your data private while delivering professional-grade results.

Let's get into it.

Best Offline AI models of 2026 at a Glance

- DeepSeek R1 - Best for complex problem-solving and step-by-step reasoning tasks

- Llama 3.3 70B - Best for replacing expensive cloud AI services with GPT-4 level performance

- Llama 3.2 - Best for entry-level Macs and everyday writing tasks

- Qwen 2.5 - Best for multilingual support and international users

- Mistral 7B - Best for reliable performance on older Macs with limited RAM

- Qwen 2.5 Coder - Best for developers wanting GitHub Copilot replacement offline

- DeepSeek Coder - Best for Python developers and web development projects

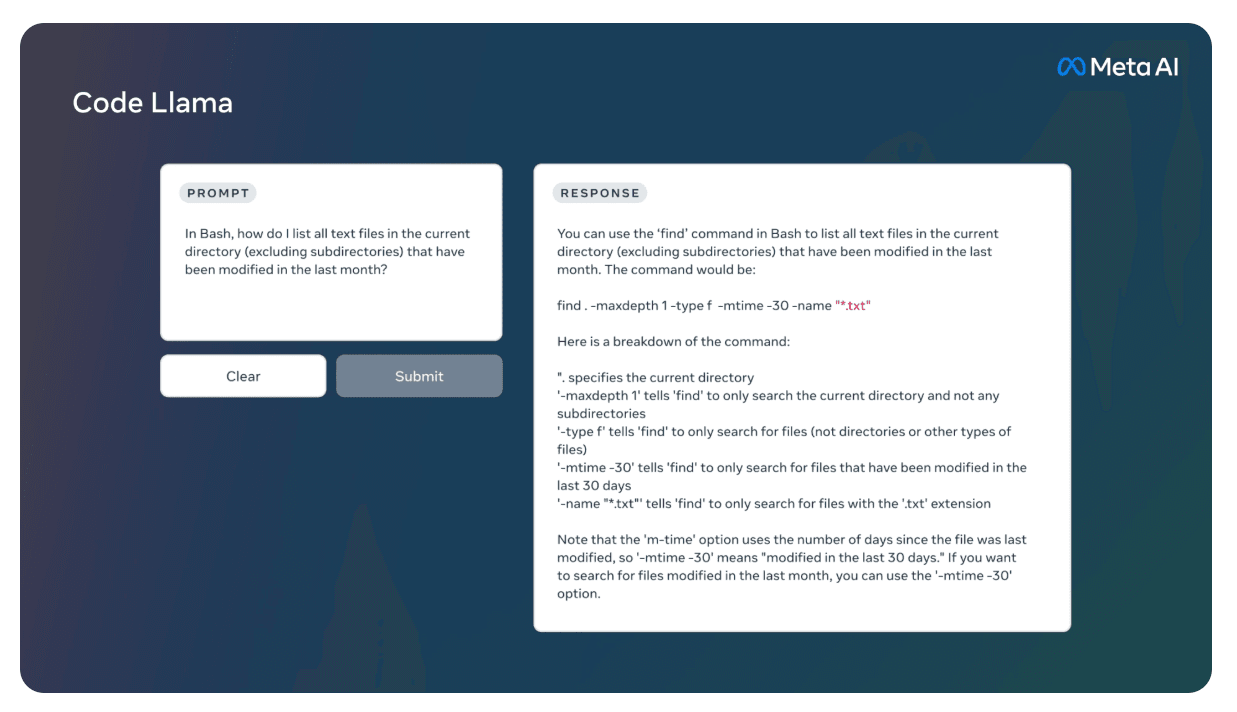

- Code Llama - Best for full-stack developers needing code completion features

- Phi-4 14B - Best for users wanting large model capabilities without high RAM requirements

- Nous-Hermes - Best for natural conversations and chatbot development

- WizardCoder - Best for developers needing strong instruction-following for coding tasks

- Stable Diffusion - Best for creating images from text descriptions and visual content

1. DeepSeek R1 (7B/14B/32B/70B) - Revolutionary reasoning breakthrough

DeepSeek AI from China released DeepSeek R1 on January 20, 2025. This open-source model comes with an MIT license and offers thinking abilities similar to OpenAI's o1 models. Users can download it through Ollama, from LM Studio , or directly from Hugging Face. The model comes in many sizes from 1.5B to 671B parameters to fit different Mac configurations.

Why users love it:

DeepSeek R1 brings advanced reasoning to Mac users without needing internet connection. The model shows its thinking process step by step, helping users understand how it reaches answers. It can solve complex problems, write code, and handle math tasks that other local models struggle with. The MIT license means anyone can use it for free, even for business purposes.

Hardware requirements:

- Best performance: M4 Max with 64GB+ RAM for 32B variant

- Minimum requirements: M1 with 16GB RAM for 7B variant

Model Benchmarks

General Knowledge & Reasoning

- MMLU: 90.8%

- MMLU-Pro: 84.0%

- GPQA Diamond: 71.5%

Math & Coding

- MATH-500: 97.3%

- AIME 2024: 79.8%

- HumanEval: 85.0%+

- Codeforces: 2,029 Elo (beats 96.3% of humans)

Source: DeepSeek R1 Technical Report

Dev.to benchmark show 55 tokens/sec for 7B model on M2 MacBook Pro 16GB

Who it is Best for:

Developers, students, and professionals who need strong problem-solving abilities offline. Perfect for debugging code, solving math problems, writing technical documents, and any task that needs step-by-step reasoning. Great for education since it shows how it thinks through problems.

Real user experience:

"DeepSeek R1 solved complex logic problems I couldn't get other local models to handle" - MacRumors forum user.

2. Llama 3.3 70B - The GPT-4 killer for Macs

Meta AI(formerly Facebook) released Llama 3.3 on December 6, 2024, as their most powerful open model. This 70 billion parameter model was trained on 39.3 million GPU hours and handles 128,000 token context length. Users can download it through Ollama, from Hugging Face, or use it in LM Studio with built-in optimizations. The model uses Apache 2.0 license for free use.

Why users love it:

Llama 3.3 70B delivers performance that matches GPT-4 while running completely offline on Mac hardware. Tech experts calls it "genuinely GPT-4 class" after testing on their mac’s. The model scores higher than Claude 3 Opus on benchmarks while using less memory than the previous 405B version. Users save hundreds of dollars monthly compared to cloud APIs.

Hardware requirements:

- Best performance: Mac Studio with 128GB+ RAM

- Minimum requirements: 64GB RAM (45GB needed for Q4 quantized version)

Model Benchmarks

General Knowledge

- MMLU Chat (0-shot): 86.0%

- MMLU-Pro (5-shot): 68.9%

- GPQA Diamond: 50.5%

Coding

- HumanEval: 88.4%

- IFEval: 92.1%

Source: Meta Llama 3.3 70B

Who it is Best for:

Professionals replacing cloud AI services, researchers needing GPT-4 level capabilities offline, writers creating long-form content, and developers building AI applications. Excellent for complex tasks like data analysis, creative writing, and technical documentation that need high-quality outputs.

Real user experience:

I tried a lot of models and always turn back to llama3.3 70b. It follows prompts very well and can perform any general task I throw at it. For working with multilingual texts as well as web search, title generation etc. Reddit

3. Llama 3.2 (1B/3B) - Perfect for everyday Macs

Meta AI launched Llama 3.2 on September 25, 2024, at their Connect conference. These smaller models are designed for edge devices and personal computers with 128,000 token context windows. Download options include Ollama or Hugging Face. All models use Llama 3.2 Community License.

Why users love it:

Llama 3.2 makes AI accessible on regular MacBooks without needing expensive upgrades. The 3B model runs smoothly on base M1 MacBook Air with just 8GB RAM. Users report it handles daily tasks like email writing, coding help, and document summaries perfectly. The small size means quick downloads and instant responses.

Hardware requirements:

- Best performance: 16GB+ RAM for smooth operation

- Minimum requirements: 8GB RAM for 3B model, 4GB for 1B model

Model Benchmarks

- MMLU: 49.3% (1B), 63.4% (3B)

- IFEval: 59.5%(1B), 77.4%(3B)

- GSM8K: 44.4%(1B), 77.7%(3B)

- MGSM: 24.5%(1B), 58.2%(3B)

Source:Ollama

Performance benchmarks show consistent 60+ tokens/sec on M1 Max hardware Github

Who it is Best for:

Students, casual users, and anyone with entry-level Macs who want AI assistance. Great for everyday writing tasks, quick coding help, language translation, and general questions. Perfect starting point for people new to local AI models.

Real user experience:

This is the first small model that has worked so well for me and it's usable. It has a context window that does indeed remember things that were previously said without errors. Reddit

4. Qwen 2.5 (7B/14B/32B/72B) - Multilingual powerhouse

Alibaba Cloud released Qwen 2.5 on September 25, 2024, trained on 18 trillion tokens. This model family supports multiple languages excellently and comes in four sizes. Users can download through Ollama, Hugging Face, or get optimized versions from MLX Community. The model uses Apache 2.0 license.

Why users love it:

Qwen 2.5 stands out for amazing multilingual support, handling over 29 languages smoothly. Users praise its ability to follow complex instructions and maintain context over long conversations. The model performs especially well on reasoning tasks and technical content. Many users switched from Llama models after seeing Qwen's superior non-English performance.

Hardware requirements:

- Best performance: 128GB+ RAM for 72B model

- Minimum requirements: 16GB RAM for 7B, 32GB for 14B, 64GB for 32B

Model Benchmarks:

General Performance

- MMLU (72B): 86.1%

- MMLU (7B): 74.2%

- GPQA: 59.1% (72B)

Coding & Math

- HumanEval (72B): 88.2%

- HumanEval (7B): 84.8%

- MATH (72B): 83.1%

- MATH (7B): 75.5%

- MBPP: 88.2% (72B)

Source: Qwen 2.5 Official Blog

Who it is Best for:

International users needing multilingual support, developers working with global teams, content creators targeting multiple languages, and researchers analyzing multilingual data. Excellent for translation tasks and cross-language communication.

Real user experience:

I use Qwen, DeepSeek, paid ChatGPT, and paid Claude. I must say, I find myself using Qwen the most often. It's great, especially for a free model! Reddit

Qwen 2.5 VL (72b and 32b) are by far the most impressive. Both landed right around 75% accuracy (equivalent to GPT-4o’s performance). Qwen 72b was only 0.4% above 32b. Within the margin of error. Reddit

5. Mistral 7B - Community favorite for reliability

Mistral AI from France released Mistral 7B on September 27, 2023, with Apache 2.0 license. This 7.3 billion parameter model became popular for its efficiency and reliability. Download options include Ollama, Hugging Face, and LM Studio's model gallery. The model uses sliding window attention for better performance.

Why users love it:

Mistral 7B offers the best balance between size and performance, running fast even on older Macs. Users consistently praise its reliable outputs and efficient memory usage. The model rarely produces errors or nonsense, making it dependable for production use. Community tests show it delivers more tokens per second per GB of RAM than any competitor.

Hardware requirements:

- Best performance: 16GB+ RAM

- Minimum requirements: 8GB RAM (with careful memory management)

Model Benchmarks:

- MMLU: 60.1%

- HellaSwag: 81.3%

- PIQA: 83.0%

- Winogrande: 78.4%

- HumanEval: 29.8%

- MBPP: 47.5%

Source: Mistral 7B

Who it is Best for:

Users with limited RAM who need reliable AI assistance, developers building lightweight AI applications, and anyone wanting fast responses. Perfect for chatbots, content generation, and code assistance where speed matters more than absolute quality.

Real user experience:

7B is convenient because it can be fit in 4Gb/6Gb/8Gb VRAM with proper quantization, while still remain reasonable and keep track on much more complex logic than 3b models can. I'm honestly yet to find a good 3b model. Reddit

6. Qwen 2.5 Coder (7B/14B/32B) - Developer's coding companion

Alibaba Cloud released Qwen 2.5 Coder in November 2024, trained on 5.5 trillion code tokens. This specialized version supports 92 programming languages with 128K context window. Download through Ollama, or from Hugging Face. Uses Apache 2.0 license for free commercial use.

Why users love it:

Qwen 2.5 Coder matches or beats GitHub Copilot for code generation while running offline. Developers report switching from paid services after testing this model. It understands context better than general models and produces cleaner, more efficient code. The model handles code refactoring, bug fixes, and new feature development smoothly.

Hardware requirements:

- Best performance: 64GB+ RAM for 32B model

- Minimum requirements: 16GB RAM for 7B, 32GB for 14B

Model Benchmarks:

- HumanEval (32B): 92.7%

- HumanEval (7B): 88.4%

- MBPP (32B): 86.8%

- MultiPL-E: 85.0%+ average

- DS-1000: Strong performance across Python libraries

Source: Qwen 2.5 Coder GitHub

Who it is Best for:

Software developers, data scientists, DevOps engineers, and anyone writing code regularly. Excellent for code completion, debugging, refactoring, and learning new programming languages. Works great with VS Code through Continue extension.

Real user experience:

It is the best coder at that size and my main local coding model. Rarely, I need to go to Claude for complex stuff. I would like a 72b qwen coder, I think it would meet my coding tasks also for the most complex ones. Reddit

7. DeepSeek Coder (6.7B/33B) - Strong coding benchmarks

DeepSeek AI released DeepSeek Coder V1 in November 2023 and V2 in June 2024 with MIT license. Models range from 1.3B to 33B parameters, specializing in code generation and understanding. Download via Ollama or from Hugging Face. The model supports 87 programming languages.

Why developers use it:

DeepSeek Coder offers lower memory requirements than DeepSeek R1 while maintaining excellent coding performance. The model excels at Python development and handles complex code completion tasks smoothly. Developers appreciate its ability to understand project context and suggest relevant code improvements.

Hardware requirements:

- Best performance: 48GB+ RAM for 33B model

- Minimum requirements: 16GB RAM for 6.7B model

Model Benchmarks:

- HumanEval (33B): 79.3%

- HumanEval (6.7B): 78.6%

- MBPP (33B): 70.0%

- MultiPL-E: Strong multilingual coding

- DS-1000: 40.4% (33B)

Source: Github

Who it is Best for:

Python developers, web developers, and programmers working on complex projects. Great for code review, optimization suggestions, and learning programming best practices. Particularly strong for backend development and algorithm implementation.

Real user experience:

Just got this model last night, for a 7B it is soooo good at web coding!!!I have made a working calculator, pong, and flappy bird.I'm using the lite model by lmstudio. best of all I'm getting 16 tps on my ryzen!!! Reddit

8. Code Llama (7B/13B/34B/70B) - Meta's coding specialist

Meta AI released Code Llama on August 24, 2023, with the 70B version arriving January 29, 2024. Built on Llama 2 foundation with 500 billion code tokens training. Download through Ollama (variants: 7b, 13b, 34b, 70b) or Hugging Face. Uses custom license allowing commercial use.

Why developers use it:

Code Llama pioneered fill-in-the-middle capabilities, making it excellent for code completion within existing files. The Python-specialized versions deliver even better performance for Python developers. Meta's extensive testing ensures high-quality outputs across 40+ programming languages.

Hardware requirements:

- Best performance: Mac Studio Ultra 128GB+ for 70B

- Minimum requirements: 16GB RAM for 7B, 32GB for 13B

Model Benchmarks:

- HumanEval (34B): 53.7%

- HumanEval (70B): 67.0%

- HumanEval (7B): 33.5%

- MBPP (34B): 56.2%

- MBPP (70B): 65.0%

- MultiPL-E: Competitive across languages

Source: Meta Code Llama Blog

Who it is Best for:

Full-stack developers, teams needing code review assistance, and developers learning new languages. The 70B model rivals commercial services for complex programming tasks. Excellent integration with VS Code and other development environments.

Real user experience:

It's good for large scale text processing where dumb but fast, cheap at scale, and reliable structured output and function calling matters more. Reddit

9. Phi-4 14B - Microsoft's efficient champion

Microsoft Research released Phi-4 on December 12, 2024, with MIT license. This 14 billion parameter model was trained on 9.8 trillion tokens focusing on reasoning and efficiency. Download from Hugging Face or through Ollama. Despite smaller size, it competes with much larger models.

Why users choose it:

Phi-4 delivers exceptional performance for its size, making it perfect for Macs with moderate RAM. Microsoft's training approach emphasized quality over quantity, resulting in outputs that match 70B+ models in many tasks. The model excels at mathematical reasoning and complex problem solving.

Hardware requirements:

- Best performance: 32GB RAM

- Minimum requirements: 16GB RAM

Model Benchmarks:

- MMLU: 84.6%

- GPQA: 53.6%

- GSM8K: 91.5%

- HumanEval: 82.6%

- HellaSwag: 89.1%

Source: Microsoft Phi-4 Documentation

Who it is Best for:

Users wanting larger model capabilities without extreme hardware requirements. Perfect for students, researchers, and professionals needing strong reasoning abilities. Excellent for mathematical problems, logical puzzles, and technical analysis.

Real user experience:

As a GPU poor pleb with but a humble M4 Mac mini (24 GB RAM), my local LLM options are limited. As such, I've found Phi 4 (Q8, Unsloth variant) to be an extremely capable model for my hardware. My use cases are general knowledge questions and coding prompts. It's at least as good as GPT 3.5 in my experience and sets me on the right direction more often then not. Reddit

10. Nous-Hermes (7B/13B/70B) - Conversation specialist

Nous Research released latest Hermes 3 in August 2024, based on Llama 3.1 foundation. Optimized for multi-turn dialogue and natural conversations. Download via Ollama, Hugging Face, or in LM Studio. Uses Llama license.

Community focus:

Nous-Hermes prioritizes natural conversation flow and context retention across long chats. The model remembers previous messages better than most alternatives and maintains personality consistency. Users praise its ability to engage in detailed discussions without losing track.

Hardware requirements:

- Best performance: 64GB+ RAM for 70B models

- Minimum requirements: 16GB RAM for 7B models

Model Benchmarks:

- MMLU (70B): 83.6%

- MMLU (13B): 59.2%

- MMLU (7B): 50.7%

- HellaSwag (70B): 87.3%

- TruthfulQA (70B): 62.1%

- HumanEval (13B): 59.0%

Source: Nous Research Models

Who it is Best for:

Customer service applications, chatbot development, roleplay scenarios, and extended conversations. Perfect for applications needing consistent personality and memory across interactions. Great for creative writing collaborations.

Real user experience:

I just tested the new Theta version and found it pretty great, one of the best L3 versions I've tested. Their previous L3 also scored great on most of my tests. Reddit

11. WizardCoder (15B/34B) - Specialized coding performance

WizardLM Team released WizardCoder on June 16, 2023, with latest 33B version in January 2024. Built on CodeLlama using Evol-Instruct method for better instruction following. Download through Ollama (132K+ downloads) or Hugging Face. Uses Llama 2 license terms.

Technical edge:

WizardCoder improves base models through special training that makes it understand coding instructions better. The Evol-Instruct method helps it generate more accurate and complete code solutions. Benchmark tests show it approaching GPT-3.5 performance levels.

Hardware requirements:

- Best performance: 48GB+ RAM for 34B

- Minimum requirements: 32GB RAM for 15B

Model Benchmarks:

- HumanEval (33B): 79.9%

- HumanEval (15B): 59.8%

- MBPP (33B): 78.9%

- HumanEval+ (33B): 73.2%

- MBPP+ (33B): 66.9%

Source: WizardCoder HuggingFace

Who it is Best for:

Developers needing strong instruction-following for coding tasks, teams working on complex software projects, and anyone wanting near-commercial performance offline. Particularly good for step-by-step code explanations.

12. Stable Diffusion - Visual creativity on Mac

Stable Diffusion launched August 2022, with Apple Core ML optimizations arriving December 6, 2022, in macOS 13.1. Unlike text models, this creates images from text descriptions. Download Draw Things free from App Store, use Apple's ml-stable-diffusion, or get Diffusers from Mac App Store.

Mac implementation:

Apple optimized Stable Diffusion specifically for Mac hardware, making it much faster than original versions. Draw Things provides one-click setup with no technical knowledge needed. Supports SD 1.5, SD 2.x, SDXL, plus ControlNet and LoRA for advanced features.

Hardware requirements:

- Best performance: 32GB+ RAM

- Minimum requirements: M1 with 16GB RAM

Mac performance:

- M1 Max (64GB): 9 seconds for SD 1.5 512x512 image

- M2 (24GB): 21.9 seconds GPU, 13.1 seconds ANE

- M4 Max: 22-24 seconds for SDXL 1024x1024

- 30-60 seconds per image typical on M1 Mac

Who it is Best for:

Artists, designers, content creators, and anyone wanting to create images from text. Great for concept art, illustrations, marketing materials, and creative exploration. Limited compared to text models but best option for local image generation on Mac.

How to download offline AI models on a MAC

You can download offline AI models through LM Studio, Ollama, or Hugging Face. Out of all these options, Ollama is the easiest and most convenient way to get started.

Installing Models with Ollama

- Visit ollama.com and download the installer for Mac

- Open the downloaded file and drag Ollama to your Applications folder

- Launch Ollama and give it permission to install command line tools

- Go to Ollama models, select an LLM Model, and copy the installation code.

- Open Terminal and type "ollama run [model-name]" to download any model

- Use "ollama list" to see all your downloaded models in terminal.

- Start chatting with any model by typing "ollama run [model-name]"

If you want a step-by-step guide, then check out our detailed article on how to download DeepSeek R1 on Mac for a complete walkthrough.

Managing AI models through command line can be tricky though. If you want an easier way, try AI Deck - our free Mac app that gives you a beautiful interface for all your Ollama models. You can discover new models, track downloads, and manage everything with simple clicks instead of typing commands.

Get More From Your Offline AI Models With Elephas

Running offline AI models on your Mac is great, but there's a way to make them even better. Elephas is a Mac app that works with all the models we just covered. It takes your offline setup and makes it way more useful.

Think about it - you have these powerful models running on your Mac, but you're probably just chatting with them. Elephas lets you do so much more. You can feed your own documents into these models, automate boring tasks, and build workflows that actually save you time.

The best part? Everything stays on your Mac. No data goes anywhere else. You get the same privacy benefits as running the models alone, but with tons more features. Your offline models become part of a complete workspace instead of just chat tools.

Also, on local LLM models or even cloud top models like Claude, OpenAI can’t process 100’s of documents, and they hallucinate and give wrong information, whereas Elephas can process 1000’s of files and does not hallucinate at all because the answers are grounded to the knowledge base.

What Makes Elephas Worth Using

- Super Brain - Turn your files into a smart database your AI can search through

- Workflows - Set up tasks that run automatically to handle routine work

- Writing Help - Fix grammar, rewrite text, and continue your writing

- Multiple Models - Switch between different AI providers as needed

- Note Apps - Connect with Obsidian, DevonThink and other tools you already use

- Web Search - Find fresh information when your offline models need it

- File Support - Works with PDFs, documents, spreadsheets and more

Conclusion

Running AI models offline on your Mac gives you complete control over your data while saving money on cloud services. These twelve models cover everything from basic writing help to advanced coding and multilingual support. Whether you have an entry-level MacBook or a powerful Mac Studio, there's a model that fits your needs perfectly.

The best part about offline models is the privacy and freedom they provide. Your conversations and documents never leave your computer. You can work anywhere without internet and never worry about data breaches or usage limits and moreover they are free.

However, just running these models through terminal commands only scratches the surface of what's possible. Most people end up using maybe 10% of their model's true potential because they're limited to simple chat interactions.

This is where Elephas changes everything. Instead of just chatting with your offline models, you can build complete workflows, process hundreds of documents, and create a smart knowledge system that actually remembers your work history and that you can chat with.

Elephas turns your collection of offline models into a powerful workspace that grows smarter with every document you add, making your AI setup truly work for you.

Comments

Your comment has been submitted