DeepSeek vs Llama (2026 Comparison): Which Local AI Model is Best?

In this article on DeepSeek vs. Llama, we'll explore everything you need to know about these powerful AI models:

Here is what we gonna cover:

What is DeepSeek and its capabilities

What makes DeepSeek different

What is a Llama and its capabilities

What makes Llamas different

Cost comparison between both platforms

Reddit users' opinions on both models

Everyday use case tests on both models:

Coding performance

Topic explanation

Elephas - a Mac AI assistant that works with both models

Which AI is the best choice for different needs

By the end of this article, you'll have a clear understanding of the strengths and weaknesses of both AI models to make an informed decision about which one better suits your requirements.

Let's dive in.

What is DeepSeek?

DeepSeek is a Chinese AI chatbot that has quickly risen to prominence in the tech world. Launched in 2023 by Liang Wenfeng, a specialist in AI and quantitative finance, this platform has become the most downloaded free app on Apple's App Store in several countries. DeepSeek uses advanced AI models that can understand and generate human-like text while showing its reasoning process.

DeepSeek Capabilities

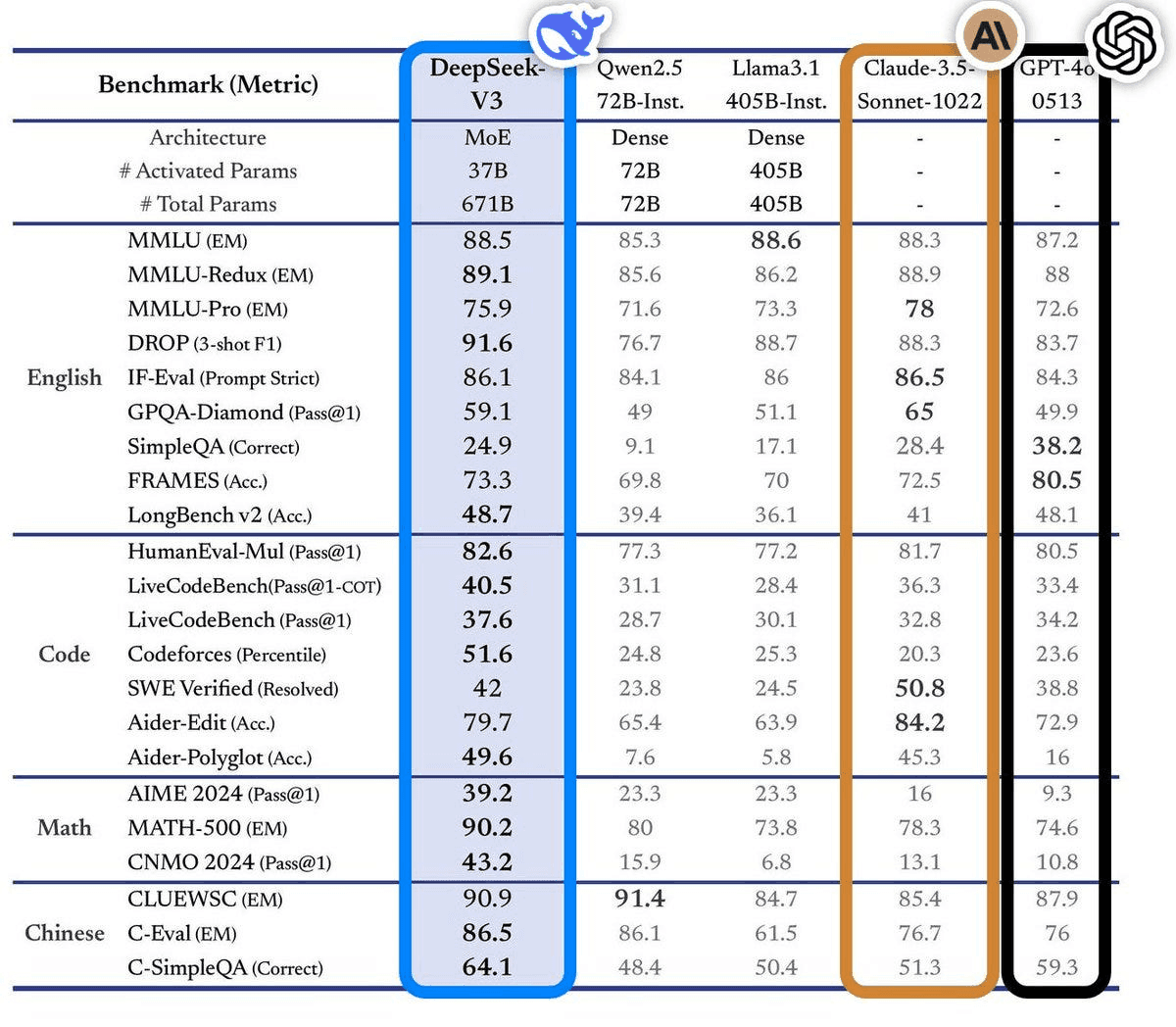

DeepSeek uses a Mixture of Experts (MoE) architecture with 671B total parameters but only activates 37B at any time. This efficient design allows it to achieve high performance while using fewer computational resources during operation.

English Language Processing

DeepSeek excels in English language tasks with standout scores:

Achieves 91.6% on DROP (reading comprehension) - the highest among all models

Scores 89.1% on MMLU-Redux for general knowledge

Shows solid performance on IF-Eval (86.1%) for following instructions

Coding Capabilities

DeepSeek demonstrates exceptional coding abilities:

Leads all models in LiveCodeBench (40.5%)

Dominates in Codeforces with 51.6% - more than double some competitors

Shows strong code editing skills (79.7% in Aider-Edit)

Handles multiple programming languages effectively (49.6% in Aider-Polyglot)

Mathematical Reasoning

Mathematics is where DeepSeek truly shines:

Achieves an outstanding 90.2% on MATH-500

Scores 39.2% on AIME 2024 - significantly higher than Claude (16%) and GPT-4o (9.3%)

Performs exceptionally well on CNMO 2024 (43.2%)

Chinese Language Proficiency

As a Chinese-developed model, DeepSeek naturally excels in Chinese:

Strong performance on C-Eval (86.5%)

Leads in C-SimpleQA with 64.1%

Demonstrates true bilingual capabilities

This benchmark data confirms DeepSeek's position as a top-tier AI model with particular strengths in mathematics, coding, and multilingual processing

Different DeepSeek Models

DeepSeek offers several models with different strengths:

DeepSeek R1 - The flagship model with 671 billion parameters (though only 37 billion are active at once)

DeepSeek-V3 - Their foundational language model

Distill versions - Smaller, more accessible versions that can run on less powerful hardware

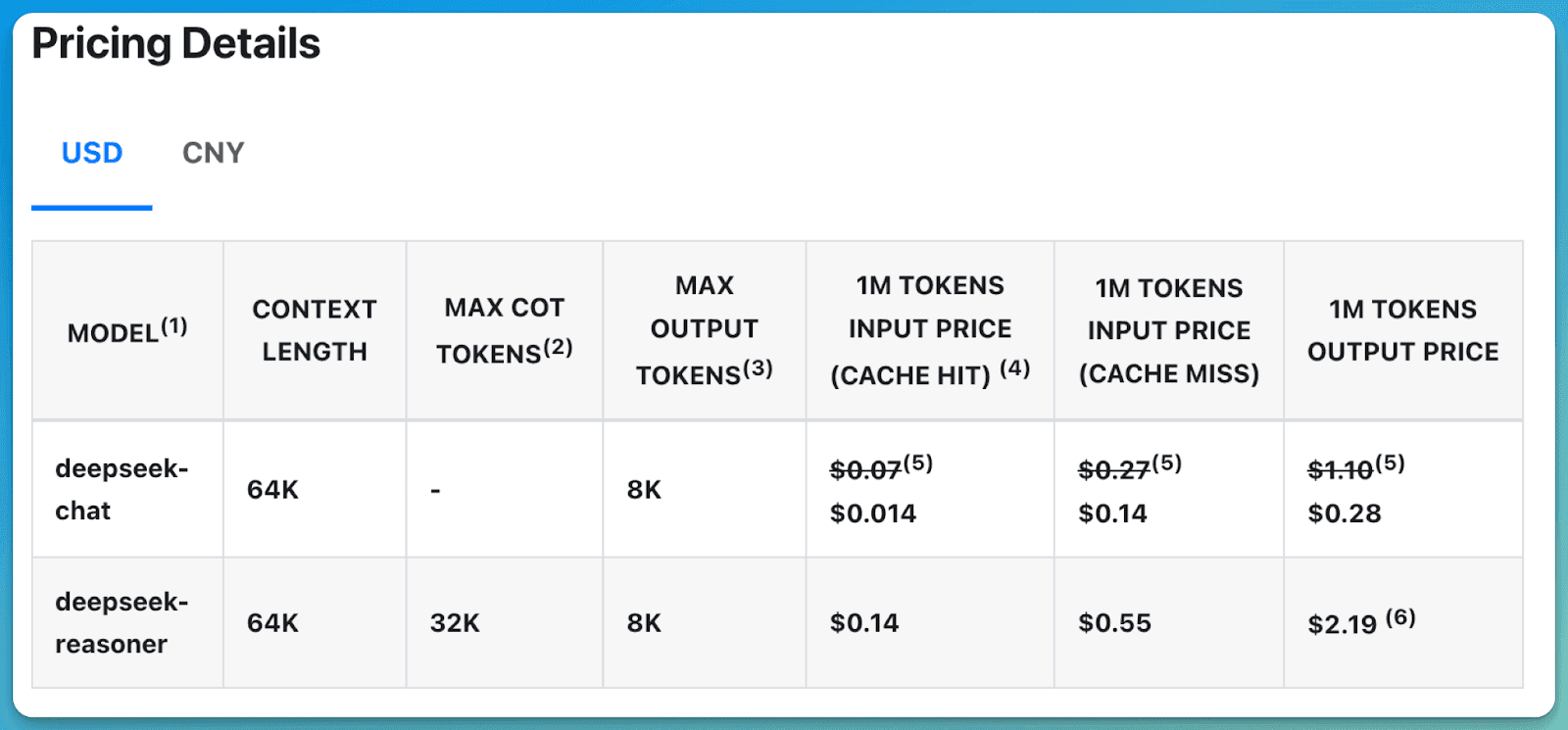

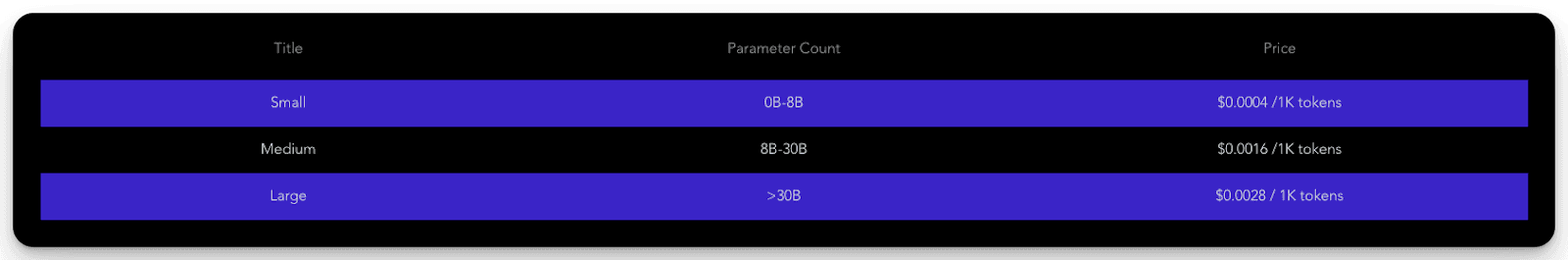

DeepSeek Pricing

What makes DeepSeek stand out is its cost structure. You can use it completely free and even download the model directly to your system and use it without any internet connection.

Although you cannot probably run the entire 600B parameter version, you can download the lower 7B parameter model or other models that suit your system hardware.

If you are a developer and want to use the DeepSeek API, then the costs are as follows

What makes DeepSeek Different?

DeepSeek stands apart from other AI platforms through:

Open-source availability allowing developers to modify and build upon it

Step-by-step reasoning that mimics human thinking patterns

Lower memory requirements making it more accessible

Cost efficiency in both development and operation

Strong performance despite having fewer resources than Western competitors

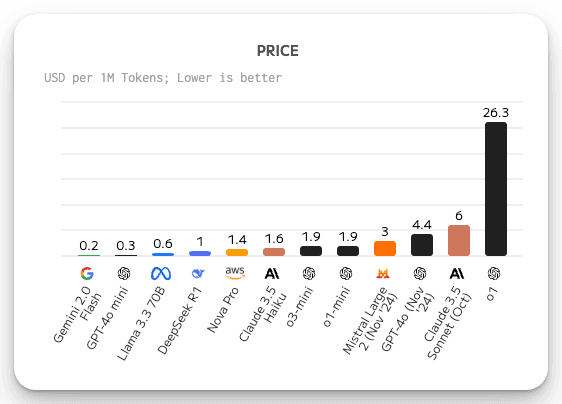

DeepSeek vs Llama Cost Comparison

When choosing between AI models, cost plays a major role in decision-making. The chart clearly shows that Llama offers better pricing compared to DeepSeek when looking at cost per million tokens processed.

Price comparison highlights:

Llama 3.5 70B costs approximately $0.6 per million tokens

DeepSeek Pro costs around $1 per million tokens

Llama is nearly 40% cheaper than DeepSeek for the same amount of processing

This price difference becomes significant when scaling AI applications or running frequent large queries. For businesses processing billions of tokens monthly, choosing Llama could save thousands of dollars in operational costs.

Both models sit in the affordable mid-range of AI pricing. They're much cheaper than premium options like Claude 3.5 Sonnet ($6) or the extremely expensive o1 model ($26.3), while offering better capabilities than the ultra-cheap options like Gemini 1.5 Flash ($0.2).

The cost advantage makes Llama particularly attractive for startups and developers who need capable AI without breaking their budget.

What is a Llama?

Llama is a family of AI language models created by Meta (formerly Facebook). These models can understand text and generate human-like responses. Unlike similar AI models from companies like OpenAI and Google, Llama models are freely available for almost anyone to use for both research and business purposes.

Key features of Llama:

Available in different sizes (from 1 billion to 405 billion parameters)

Some versions can understand images as well as text

Works by predicting what text should come next based on patterns it learned

Trained on trillions of text samples from websites, books, and other sources

Llama models have become very popular with AI developers because they offer powerful capabilities without the restrictions of closed-source alternatives.

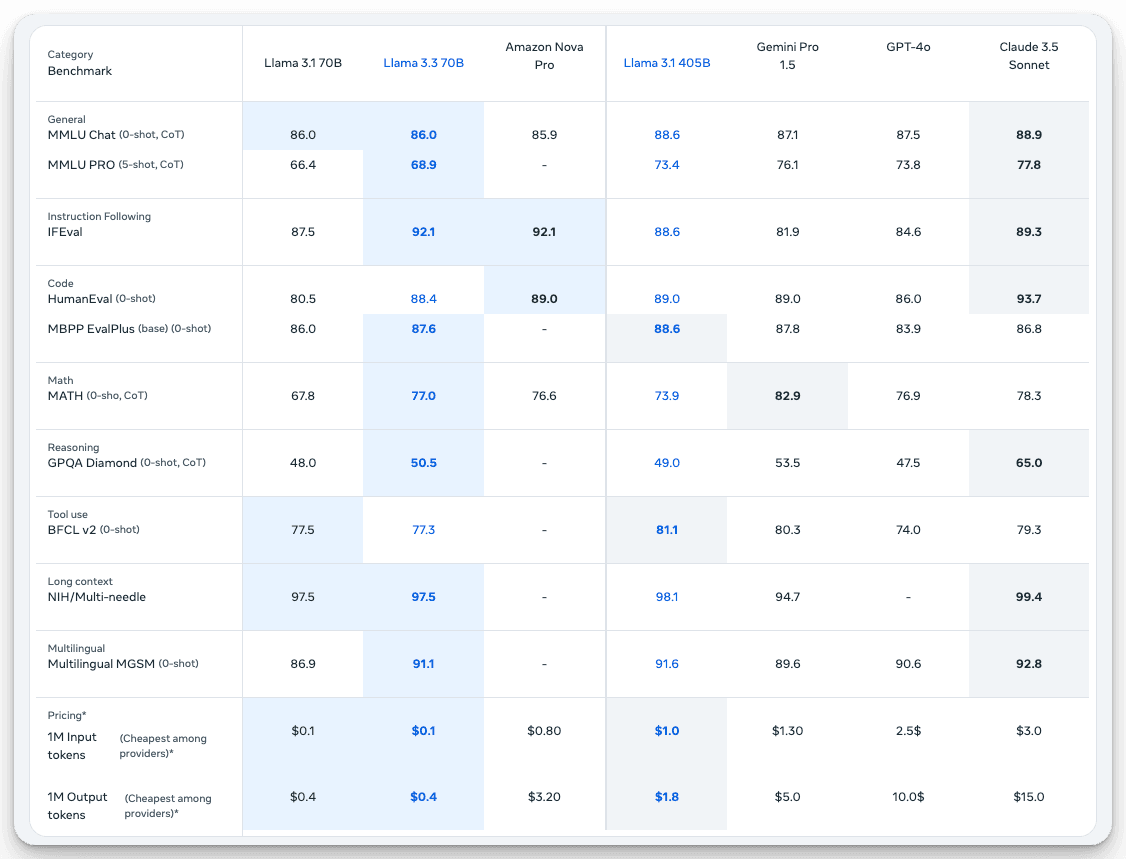

Llama Capabilities

Llama models show impressive performance across a wide range of AI benchmarks, making them versatile tools for many applications. Based on the benchmark data, Llama models deliver competitive results compared to other leading AI systems.

Standout capabilities:

Strong instruction following - Llama 3.3 70B scores 92.1 on IFEval, matching Amazon Nova Pro

Excellent long-context handling - Both Llama models achieve over 97.5 on the NIH/Multi-needle test

Improving code generation - The 3.3 70B version scores 88.4 on HumanEval, showing significant improvement over the 3.1 version

Multilingual proficiency - Scores over 90 on multilingual benchmarks, making it suitable for global applications

While Llama models still lag behind Claude in reasoning tasks (GPQA Diamond), they offer a remarkable balance of performance and affordability. At just $0.1 per million input tokens for the 70B models, Llama delivers enterprise-grade AI capabilities at a fraction of the cost of GPT-4o or Claude 3.5.

The larger 405B model provides even better performance across most metrics for users needing maximum capability.

Different Llama Models

Meta has released many versions of Llama models that vary in size and abilities. The numbers in each model name (like 8B or 405B) show how many parameters or "thinking parts" they have.

Key differences:

Smaller models (1B, 3B) can run directly on phones and laptops

"Instruct" versions are specially trained to follow human directions

"Vision" models can understand both text and images

Larger models (70B, 90B, 405B) are more powerful but need more computing resources

The latest models come in two families: Llama 3.1 (released July 2024) and Llama 3.2 (released September 2024), with the newer versions adding visual capabilities.

Llama Pricing

Lama is completely free to use at Meta.ai, where you can use their most powerful model for free without any restrictions, or you can also download one of their models according to your device compatibility.

However, for the Llama API, the costs are as follows, but comparatively, they are lower than other AI models' API pricing.

What makes Llama Different?

Llama stands out as the largest openly available AI model, with its flagship 405B version rivalling top proprietary systems. Unlike DeepSeek, Meta's infrastructure ensures reliable performance without frustrating "server busy" errors, even for lengthy chats at Meta.ai.

What makes Llama truly unique:

Completely free for research and most commercial uses

Full transparency with published research papers explaining how it works

Downloadable models that can run on your own hardware

Consistent reliability on Meta's platform without timeout issues

Strong performance that competes with closed-source alternatives

This open approach creates a level playing field for developers and businesses who don't want to be locked into closed AI ecosystems. As Mark Zuckerberg stated, this openness helps ensure "AI benefits aren't concentrated in just a few companies' hands."

Redditors Opinion on DeepSeek vs Llama

Let's also know about Redditors' opinions. We found this post on Reddit:

The discussion revealed interesting perspectives from various users:

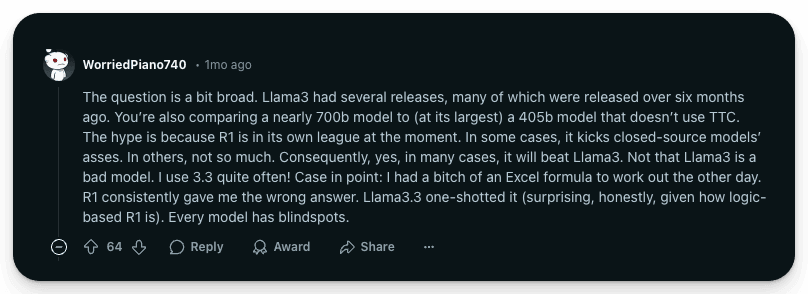

WorriedPiano740 pointed out the huge size difference: "You're comparing a nearly 700B model to a 405B model." They noted that R1 sometimes "kicks closed-source models' asses" but also shared a personal experience where Llama 3.3 solved an Excel formula that R1 couldn't.

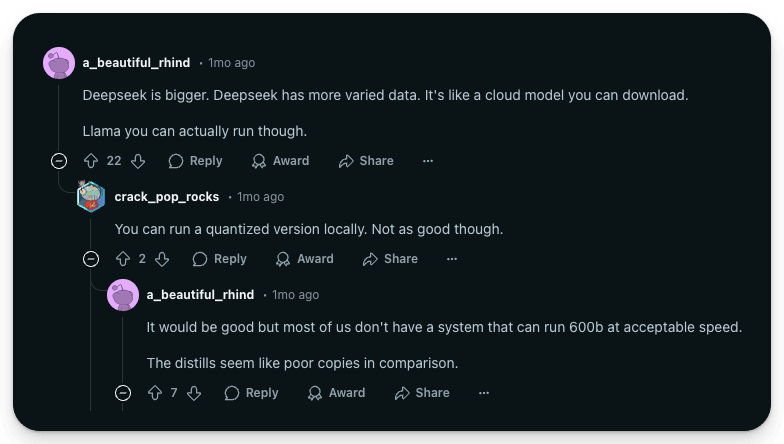

Several users clarified hardware requirements. According to a_beautiful_rhind, "Deepseek is bigger. Deepseek has more varied data. It's like a cloud model you can download. Llama you can actually run though." Running the full R1 model locally would require massive resources - around 400GB of memory.

On model strengths, stddealer explained: "Llama3 is better as a language model than r1. For more complex tasks that require reasoning, Deepseek r1 is better."

powerflower_khi highlighted a fundamental difference: "Deepseek has a loop back, compare and think, while the other process query based on certain subsets."

For creative applications, Expensive-Paint-9490 found R1 more impressive: "The result blows Llama, Mistral, and the other champions out of the water. DeepSeek-R1 has a creativity that the other models just don't have."

Most users acknowledged both models have their strengths, with the choice depending on specific use cases and available hardware.

Comparing DeepSeek vs Llama: Which is best?

To know which is better, we are going to perform a few practical day-to-day life tests on both the LLM models and determine which is best.

Note: As of now, most of the DeepSeek capabilities are affected by server issues, and they have even temporarily stopped the web search feature.

Also, we cannot perform all the tests that we usually do in our other LLM model comparison articles, as DeepSeek does not have many features that Llama has, such as the image generation feature and web search (temporarily stopped).

At the same time, Llama doesn't have key features that DeepSeek has, such as reasoning capabilities and the file or image upload feature. So we have to limit the tests to two, and those will be on coding and topic explanation.

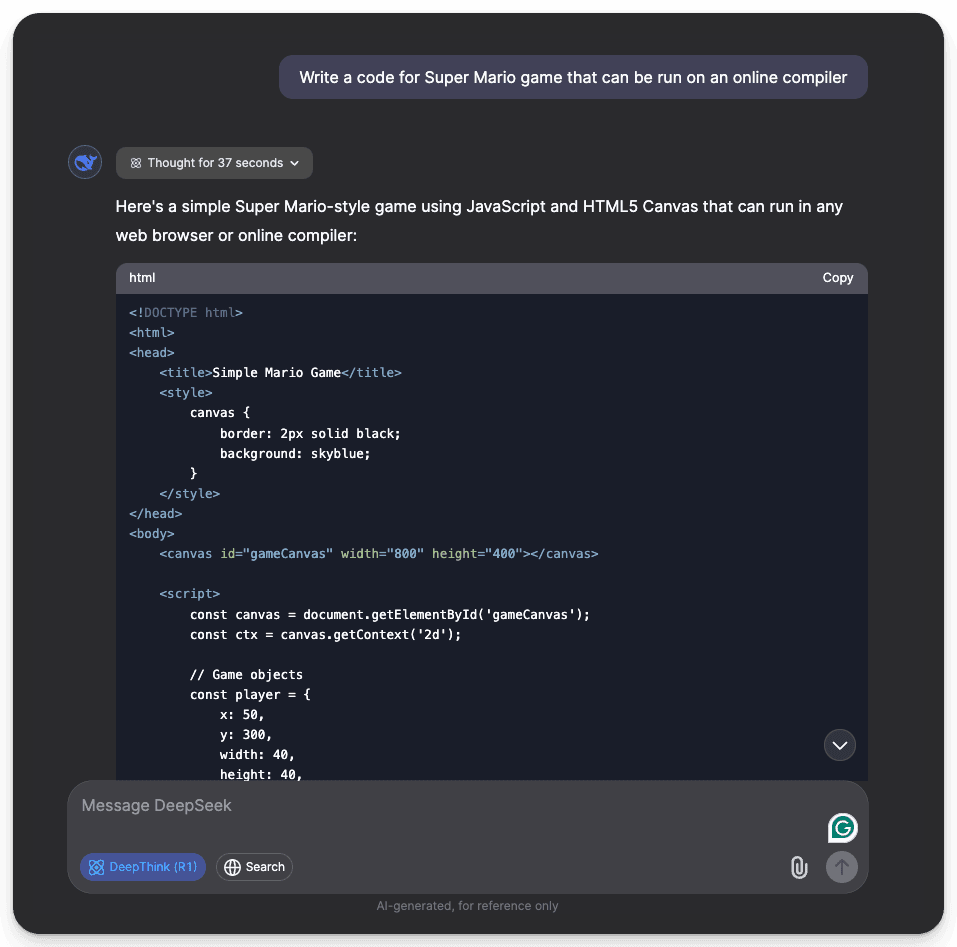

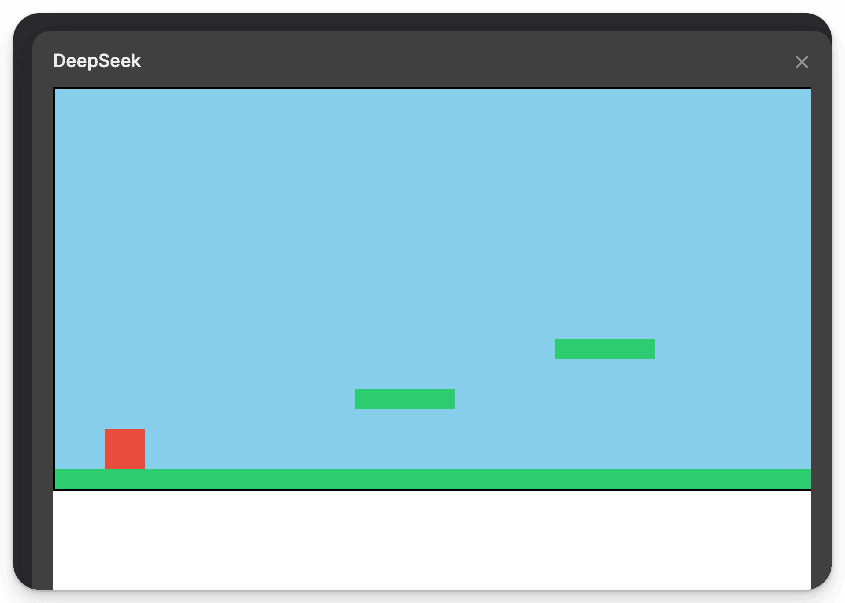

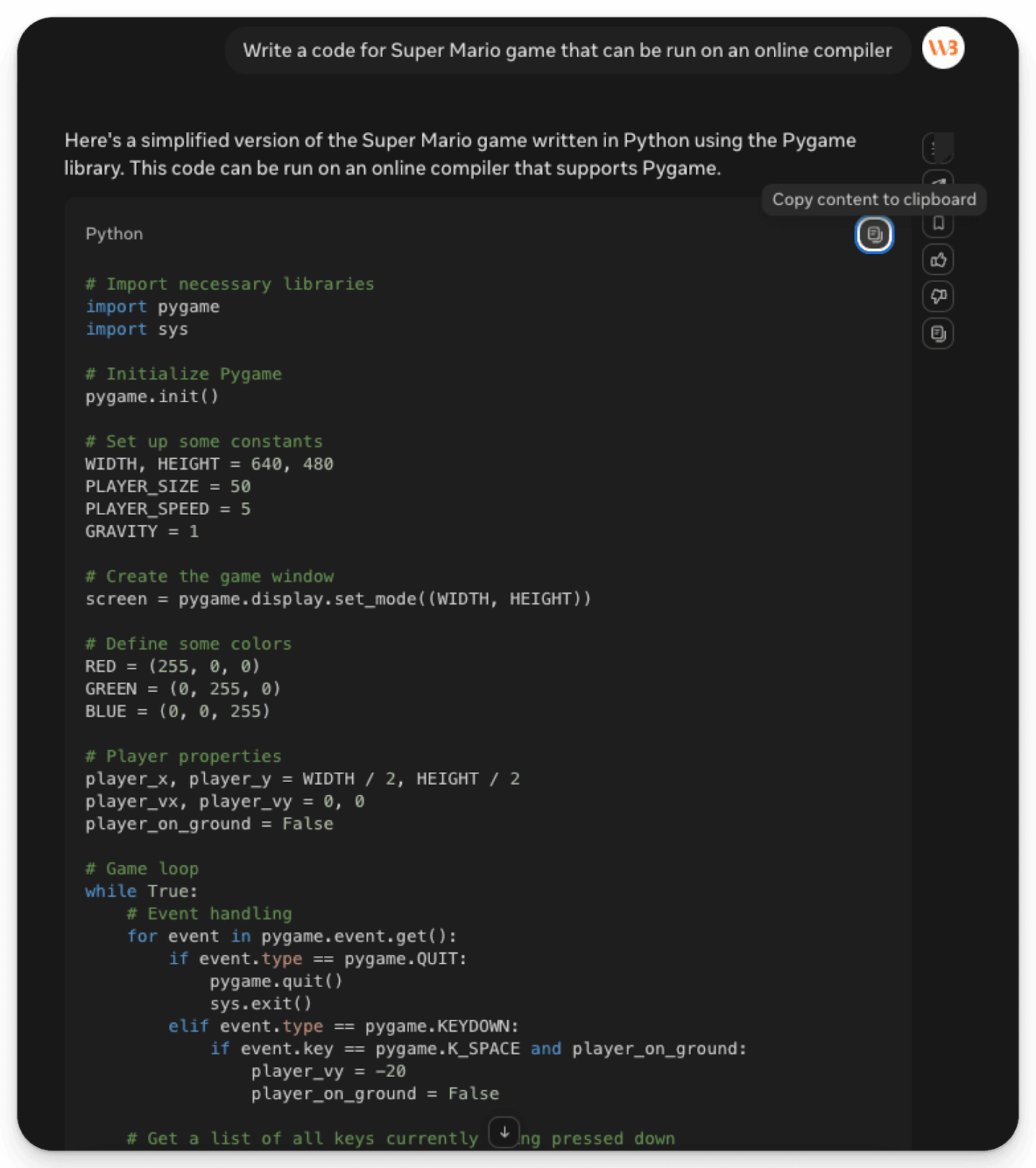

Test 1: Coding

I don't have much technical knowledge in coding, nor can I code. But as the LLM models keep developing, it is becoming easier to build software than ever. So I tried to ask both LLM models to create a Mario game that can be played on an online compiler.

Well, DeepSeek has an in-built code executor, so we can directly execute the code, and DeepSeek was able to create a good version of the game that is actually playable. Whereas Llama was able to generate code that could run on an online compiler, the code was only able to generate the surroundings, not the game like DeepSeek did. In the coding, DeepSeek wins.

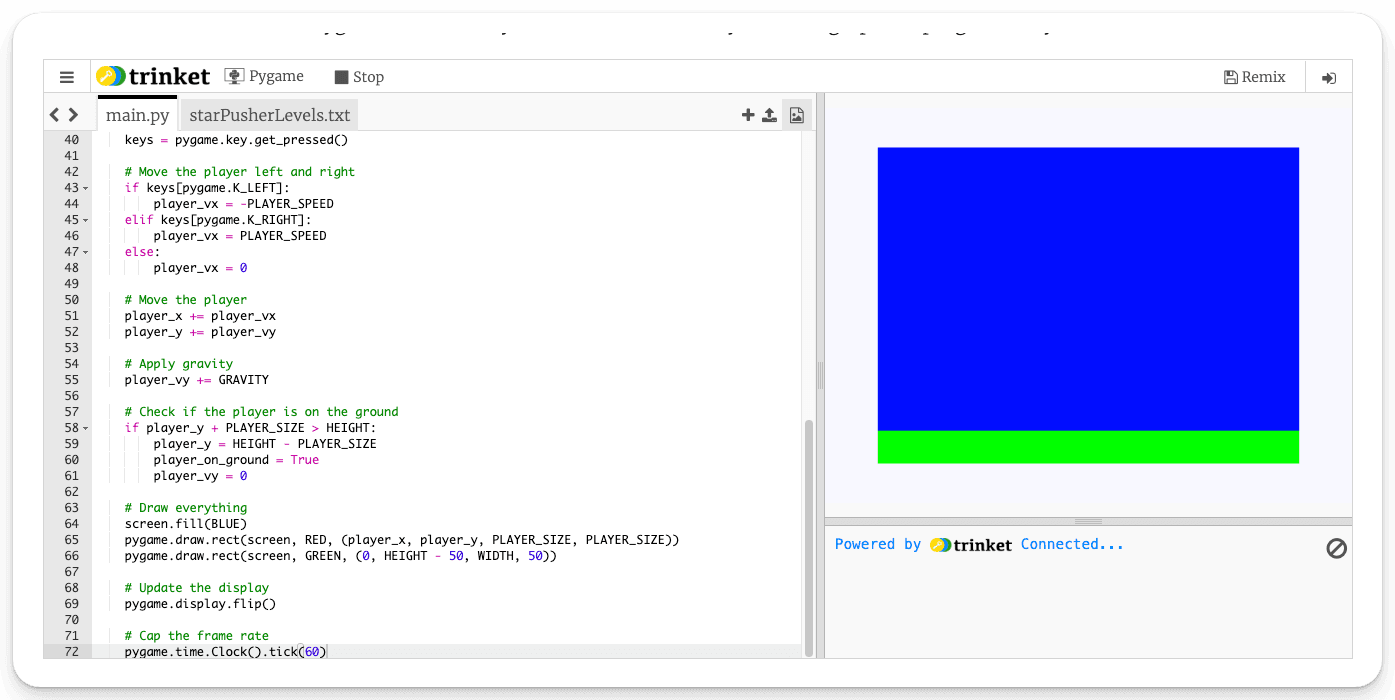

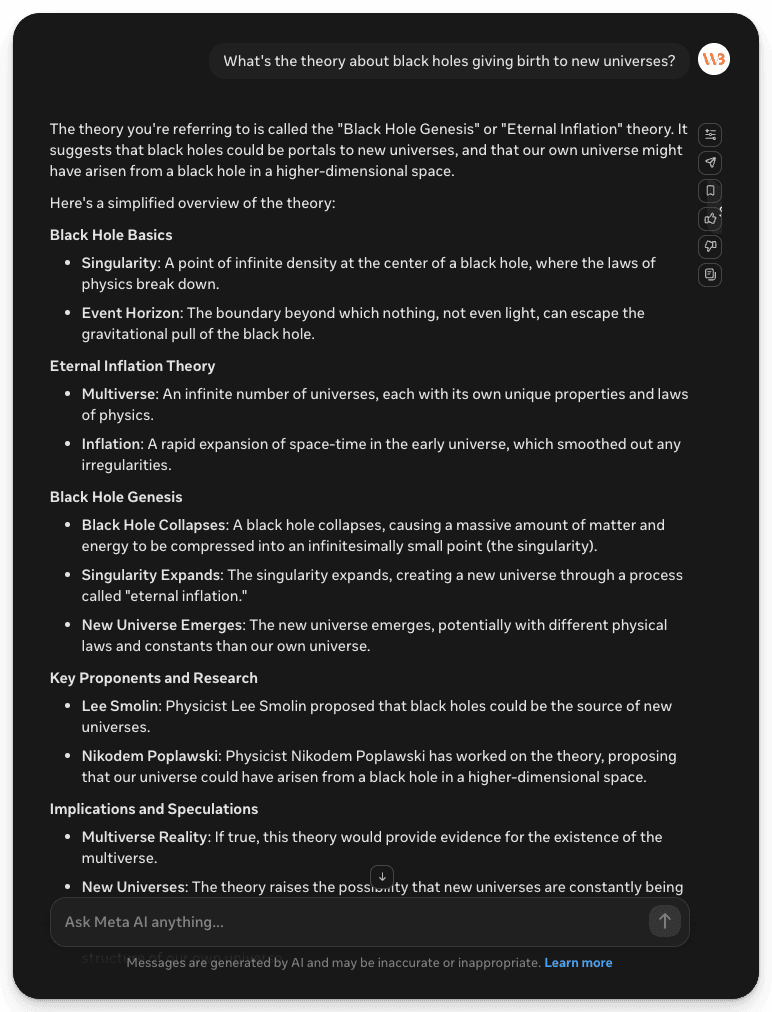

Test 2: Topic Explanation

For the next test, we asked the models to explain the theory of black holes giving birth to a new universe. To determine which model explains best, we didn't use the DeepSeek reasoning feature.

In this case, the DeepSeek model was able to generate a much better explanation of the topic than Llama. It provided a detailed analysis in an easily digestible manner, whereas Llama offered a much deeper analysis but missed the simplicity and topic explanation from the beginning compared to DeepSeek.

As of now, in the current version of Meta.ai, we cannot upload any images or files. However, if you download certain Llama models onto your device, such as the vision models of Llama, you can upload images or files to the Llama.

However, the output will largely depend on the model you downloaded and your device's capabilities.

Elephas: Best Mac AI Assistant

No matter which LLM model you're using - whether it's Claude, OpenAI, Gemini, Llama, or DeepSeek - Elephas makes it better by creating a personalized knowledge ecosystem that enhances how you interact with AI.

Key Features:

Super Brain: Build your own knowledge base by easily adding documents, web pages, YouTube videos, and notes that your AI can access and learn from.

Works With Any AI: Connect to online models like ChatGPT, Claude, and Gemini, or use privacy-focused offline models like Llama and DeepSeek right on your Mac.

Complete Offline Mode: Work entirely offline with local embeddings for 100% privacy and security of your sensitive information.

Smart Writing Tools: Get help with grammar fixes, content continuation when you're stuck, and smart replies for emails and messages.

Content Repurposing: Easily transform existing content for different platforms and audiences with a single click.

Extensive File Support: Work with CSV, JSON, and 10+ additional formats including PHP, Python, and YAML files.

Cross-Device Sync: Keep your knowledge base updated across all your Apple devices with automatic syncing.

iOS Integration: Access the same powerful features on your iPhone and iPad with the Elephas AI Keyboard.

DeepSeek vs Llama: The best choice?

For everyday users, Llama is clearly better than DeepSeek. You won't face server busy messages or waiting times with Llama. It works smoothly on apps you already use like WhatsApp and Instagram. Llama also lets you create images, which DeepSeek can't do yet.

DeepSeek shines when you need to solve hard problems. It's much better at math tasks and writing code. When you ask DeepSeek to explain something, it gives more complete answers with step-by-step thinking. It can work through complex questions in a way that feels more like human thinking.

Also, Llama costs less to use for businesses. It charges about 40% less than DeepSeek for processing the same amount of text. Llama also works without interruptions, while DeepSeek sometimes has server problems that make it stop working.

Your best choice depends on what you need. Pick Llama for daily use and reliability. Choose DeepSeek when you need help with technical problems or want deeper explanations.

FAQs

1. Can Elephas work with Llama and DeepSeek models?

Yes, Elephas works with both online LLM models (Claude, OpenAI, Gemini) and offline LLM models like Llama and DeepSeek. You can run these models locally on your Mac for complete privacy while still accessing Elephas' knowledge management and writing tools.

2. Which AI is better for everyday use?

Llama is better for everyday use because it works without server issues and integrates with common apps like WhatsApp and Instagram. You can use it consistently without encountering "server busy" messages that often happen with DeepSeek.

3. Which AI performs better for coding tasks?

DeepSeek performs significantly better for coding tasks. It can write more complex and functional code compared to Llama. If you need help with programming or building applications, DeepSeek would be the better choice.

4. Which AI is more affordable for business use?

Llama is more affordable, costing about 40% less than DeepSeek for processing the same amount of text. This makes a big difference for businesses that use AI services frequently or at scale.

5. Which AI provides better explanations for complex topics?

DeepSeek provides more thorough explanations with step-by-step reasoning. It breaks down complex topics in a more detailed way that resembles human thinking patterns, making it better for educational purposes or understanding difficult concepts.

Comments

Your comment has been submitted