OpenAI DevDay 2024: Key Announcements and Their Impact on Developers

On October 1st, OpenAI hosted its much-anticipated DevDay across San Francisco, London, and Singapore. This year’s event took a refreshing turn by focusing on developers rather than flashy new AI models. OpenAI showcased a variety of updates and tools designed specifically to support and empower developers in their work.

This approach shows their dedication to building a strong developer community and encouraging innovation in the AI space. But what are they? Are these announcements really going to change the AI landscape?

Let’s take a closer look at the key announcements that happened at the OpenAI Dev Day.

A Shift in Strategy for OpenAI DevDay 2024

OpenAI's DevDay 2024 wasn't about flashy new products. Instead, it revealed a company laser-focused on empowering its developer community. This year's event showcased a series of tools and improvements that, while less headline-grabbing, signal a major strategic pivot.

The star announcements included:

Prompt Caching: Slashing costs and speeding up responses

Realtime API: Bringing natural conversations to voice apps

Model Distillation: Making advanced AI accessible to smaller players

Vision Fine-tuning: Expanding AI's ability to understand images

Each of these updates points to a clear goal: making AI development more efficient, affordable, and versatile. It's a far cry from the consumer-focused excitement of previous years, but it's arguably more impactful.

By focusing on these developer-centric improvements, OpenAI is betting on the power of its ecosystem. They're providing the tools for others to innovate, rather than trying to do it all themselves. It's a mature move that acknowledges the changing AI landscape and the need for sustainable growth.

This shift might not grab headlines, but it could be the key to unlocking AI's potential across industries. By empowering developers, OpenAI is setting the stage for a new wave of AI applications that are faster, cheaper, and more capable than ever before.

Prompt Caching: Reducing Costs and Latency for Developers

OpenAI's latest innovation, prompt caching, is set to change the game for developers working with AI. Imagine being able to slash your API costs and speed up your app's response times - that's exactly what this announcement promises.

At its core, When you send a prompt to the API, it remembers both the input and the output. So the next time you ask the same question, it doesn't have to think about it again - it just gives you the answer it remembered.

This clever approach brings some serious perks:

Your wallet will thank you as API calls drop

Users will love the lightning-fast responses

Your system won't break a sweat handling requests

But it's not just about speed and savings. Prompt caching ensures consistency too. Ask the same question twice, and get the same answer - every time. For apps where predictability matters, this is huge.

Of course, like any powerful tool, it needs to be used wisely. Not every prompt should be cached, especially if you need fresh, context-aware responses. And there's some homework involved in managing your cache effectively.

All in all, prompt caching is a big step forward. It's giving developers the tools to build AI apps that are not just smarter, but faster and more cost-effective too. In a world where every millisecond and every cent counts, that's a game-changer.

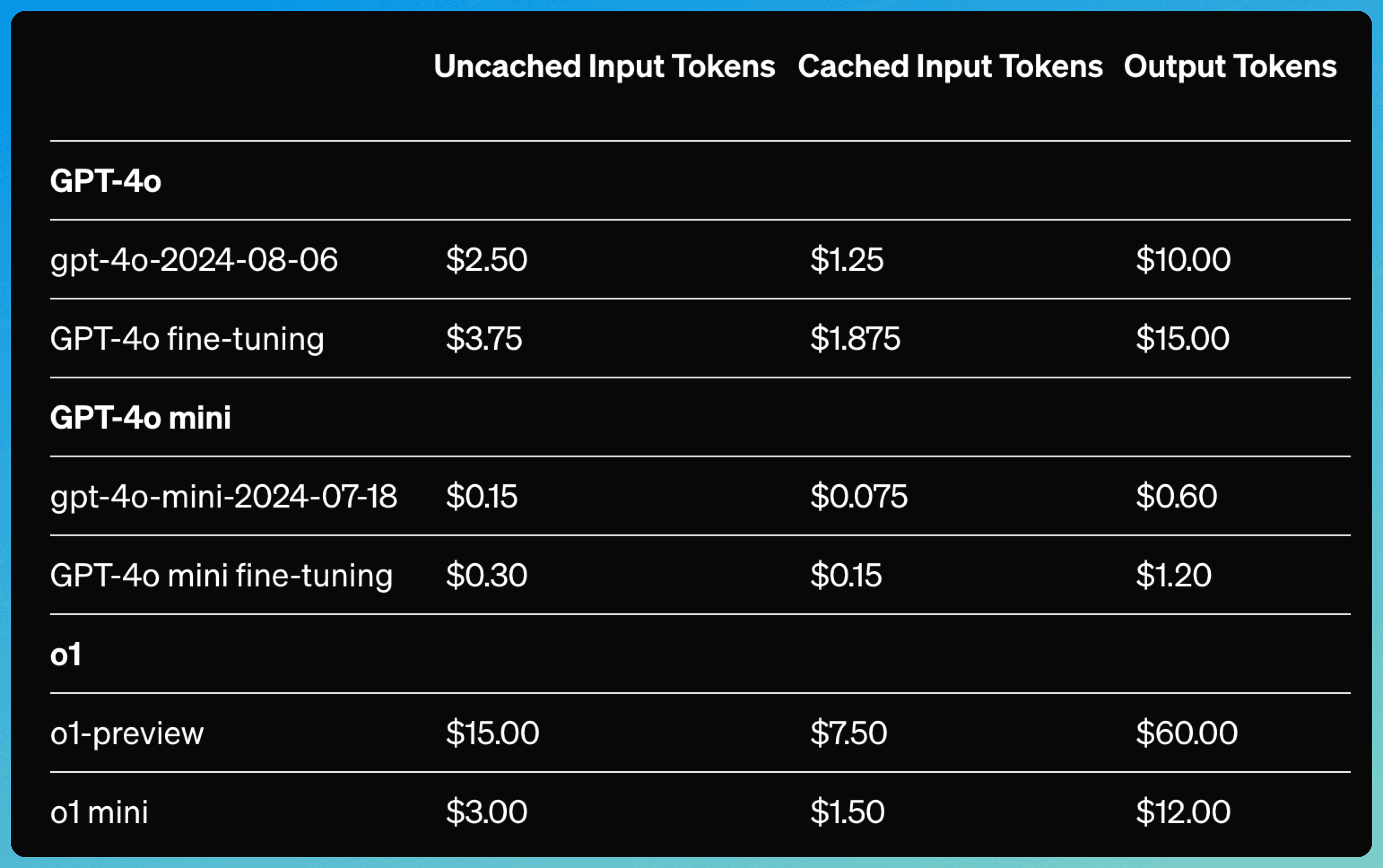

Pricing

Vision Fine-Tuning: Expanding AI’s Visual Capabilities

Example of a desktop bot successfully identifying the center of UI elements via vision fine-tuning with website screenshots.

Another major announcement in Open AI Dev Day is the vision fine-tuning for GPT-4o. It's like teaching an already smart AI to see the world through your eyes - that's exactly what this feature enables.

At its core, vision fine-tuning allows developers to customize GPT-4o using both images and text. This isn't just a small step forward; it's a giant leap for AI applications that rely on visual understanding. Think enhanced visual search, smarter autonomous vehicles, and more accurate medical image analysis.

Here's what makes this so exciting:

You can fine-tune the model with as few as 100 images

Performance improvers even more with larger datasets

It follows a similar process to text-based fine-tuning

Developers can prepare their image datasets in a specific JSON format, much like they do for chat endpoints.

Real-world applications are already showing impressive results. Grab, a food delivery and rideshare company, improved their lane count accuracy by 20% and speed limit sign localization by 13% with just 100 examples.

Automat, an enterprise automation company, saw a whopping 272% uplift in their RPA agent's success rate.

But it's not just about big companies.

This tool is available for all developers on paid usage tiers, and OpenAI is sweetening the deal with a limited-time offer: 1M training tokens per day for free through October 31, 2024.

After that, the pricing structure looks like this:

Training: $25 per 1M tokens

Inference: $3.75 per 1M input tokens and $15 per 1M output tokens

Image inputs are tokenized based on size and priced the same as text inputs

Vision fine-tuning is more than just a new feature; it's a doorway to a world where AI can truly see and understand visual information in ways we've only dreamed of before. Whether you're building the next big thing in autonomous vehicles or just want to teach AI to recognize your cat, this tool is opening up a world of possibilities.

Realtime API: Revolutionizing Voice-Enabled Applications

One of the biggest announcements at the OpenAI Dev Day is the Real-time API. With this feature, we can now interact with AI-powered apps using OpenAI Voice API, making voice experiences smoother and more lifelike than ever before.

Here's what makes the Realtime API so exciting:

It handles speech-to-speech conversations in one swift API call

Supports six preset voices, just like ChatGPT's Advanced Voice Mode

Uses a persistent WebSocket connection for lightning-fast responses

You don't have to use multiple models for voice assistants. The Realtime API does it all - speech recognition, processing, and text-to-speech - in one seamless flow. It even handles interruptions like a pro, making conversations feel more natural.

But it's not just about smooth talking. The API comes with some serious tech features:

Function calling support for triggering actions or fetching context

Multimodal capabilities (with vision and video support on the horizon)

Integration with client libraries for enhanced audio features

Early adopters are already seeing the magic. Healthify's AI coach Ria chats about nutrition, while Speak's language learning app helps users practice conversations in new tongues.

Pricing?

It's based on text and audio tokens, with audio input at $0.06 per minute and output at $0.24 per minute. Plus, there's a public beta rolling out to all paid developers.

Model Distillation: Making AI More Accessible for Smaller Companies

OpenAI announced a major upgrade to its features at the Dev Day event that changes everything for smaller companies that want to integrate AI into their workflows or systems, which is model distillation.

Model distillation is simple: having the brains of a supercomputer in a pocket-sized device, and it's set to level the playing field for smaller companies in the AI arena.

So, Model Distillation lets you take the smarts of big, powerful AI models like GPT-4o and shrink them down into more cost-efficient versions. It's like teaching a clever student to become as smart as their genius professor but without the hefty price tag.

OpenAI's made the whole process a breeze:

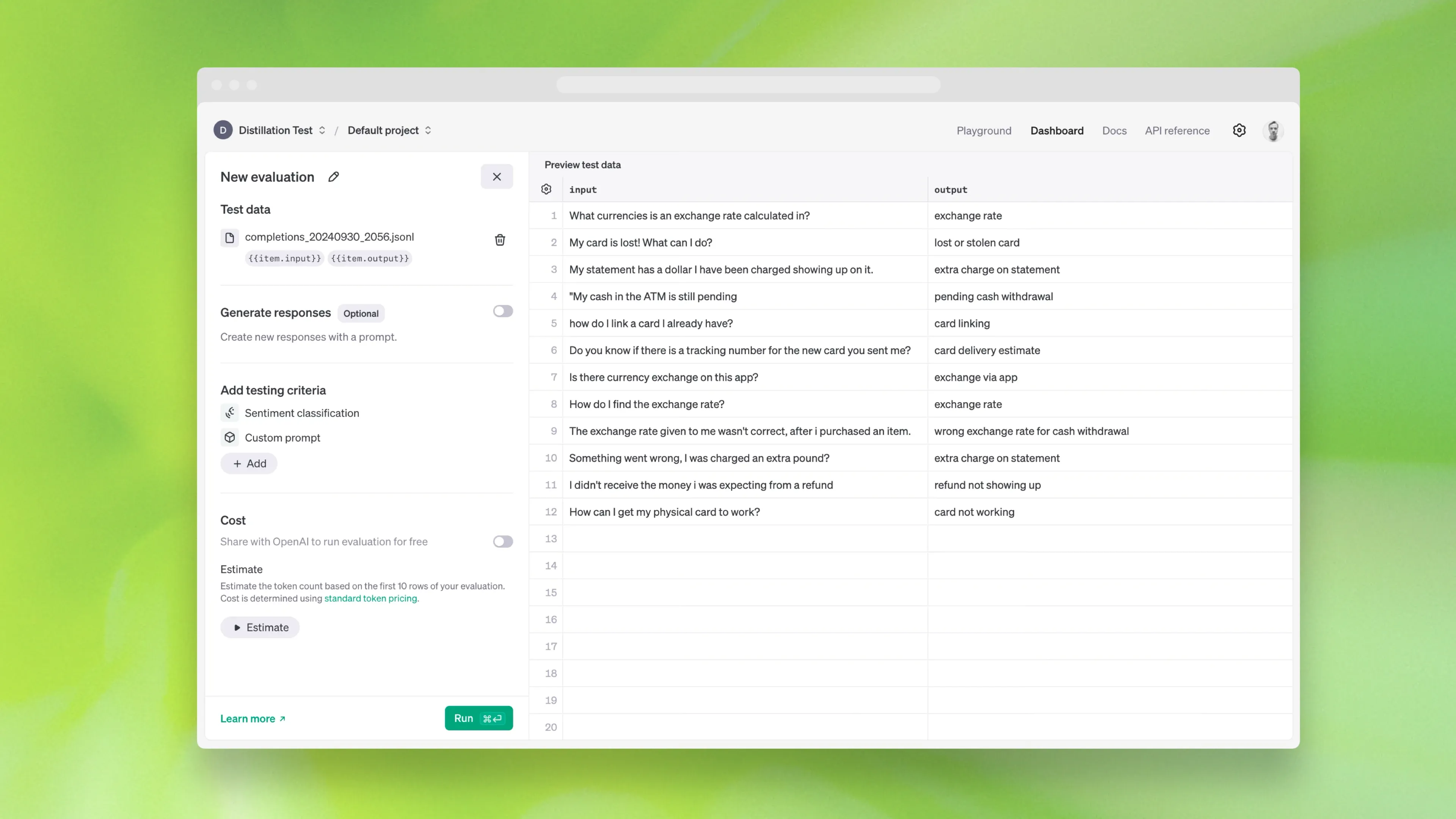

Stored Completions: Capture real-world data effortlessly

Evals: Test your AI's performance and evaluate model performance

Fine-tuning: Polish your model to perfection, all in one place

With Model Distillation, it's as simple as:

Set up an evaluation to keep tabs on your model's progress

Use Stored Completions to gather top-notch training data

Fine-tune your smaller model until it shines

Model Distillation is available to all developers, with some sweet freebies to get you started. You get 2M free training tokens daily for GPT-4o mini and 1M for GPT-4o until October 31. After that, standard fine-tuning prices apply.

For smaller companies, this is huge. It means access to powerful AI models without breaking the bank. Whether you're building the next big thing in customer service or revolutionizing data analysis, Model Distillation is your ticket to competing with the big dogs in the AI world.

Elephas: Streamlining OpenAI’s Innovations

Elephas is always at the forefront of AI innovation, continuously integrating the latest advancements to enhance the user experience. With OpenAI’s recent updates from DevDay 2024, we are already exploring how to incorporate these powerful tools into Elephas and make our users' workflow easier and their work time shorter.

Stay tuned to potentially see these new Open AI features in Elephas, your AI-powered knowledge assistant.

Conclusion

OpenAI is now focusing more on developers than on product launches, as we saw at their DevDay 2024 event. Instead of showing off flashy new products or AI models, OpenAI is giving developers the tools to innovate and build smarter AI solutions.

This shift highlights their commitment to helping developers work faster and more efficiently, making AI more accessible and affordable.

Here are some of the key announcements from DevDay:

Prompt Caching: Helps reduce API costs and speeds up responses.

Realtime API: Makes voice interactions with AI feel more natural and smooth.

Vision Fine-Tuning: Let AI better understand and process images.

Model Distillation: Allows smaller companies to use powerful AI models without the high costs.

With these updates, OpenAI is giving developers what they need to build the future of AI.

FAQs

1. Is ChatGPT-4 O free?

Yeah, ChatGPT-4o is available for free, but in the free version, you can only use it for a certain number of times. If you want more, you need to get their plus subscription, which is $20/month.

2. Who runs OpenAI?

OpenAI's leadership comprises a diverse board overseeing the nonprofit arm. Key figures include Chair Bret Taylor, CEO Sam Altman, and notable members like Adam D'Angelo and Larry Summers. This structure ensures a range of perspectives guide the organization's strategic direction and ethical considerations.

3. Does Elon own OpenAI?

No, Elon Musk never owned OpenAI; he initially only backed OpenAI by being an investor in it. However, later on, Elon Musk resigned from OpenAI's board of directors in early 2018 and left OpenAI.

Comments

Your comment has been submitted